Computer Vision for Augmented Reality in Embedded Designs

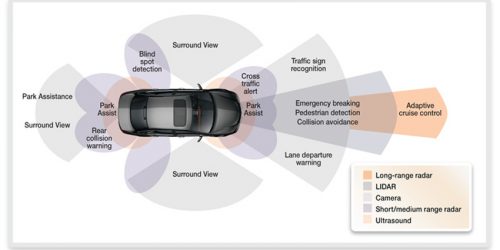

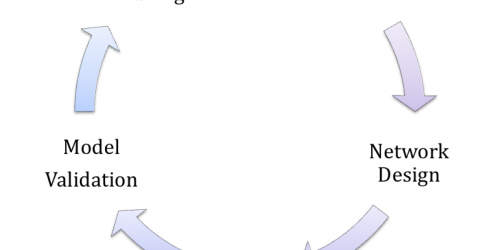

Augmented reality (AR) and related technologies and products are becoming increasingly popular and prevalent, led by their adoption in smartphones, tablets and other mobile computing and communications devices. While developers of more deeply embedded platforms are also motivated to incorporate AR capabilities in their products, the comparative scarcity of processing, memory, storage, and networking resources […]

Computer Vision for Augmented Reality in Embedded Designs Read More +