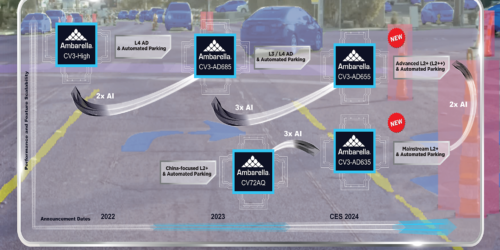

IDTechEx Company Profile: Ambarella

This market research report was originally published at Ambarella’s website. It is reprinted here with the permission of Ambarella. Note: This article was originally published on the IDTechEx subscription platform. It is reprinted here with the permission of IDTechEx – the full profile including SWOT analysis and the IDTechEx index is available as part of […]

IDTechEx Company Profile: Ambarella Read More +