Industrial Applications for Embedded Vision

Computer vision processing-based products are established in a number of industrial applications

Computer vision processing-based products have established themselves in a number of industrial applications, with the most prominent one being factory automation (where the application is commonly referred to as machine vision). Primary factory automation sectors include:

- Automotive — motor vehicle and related component manufacturing

- Chemical & Pharmaceutical — chemical and pharmaceutical manufacturing plants and related industries

- Packaging — packaging machinery, packaging manufacturers and dedicated packaging companies not aligned to any one industry

- Robotics — guidance of robots and robotic machines

- Semiconductors & Electronics — semiconductor machinery makers, semiconductor device manufacturers, electronic equipment manufacturing and assembly facilities

What are the primary embedded vision products used in factory automation applications?

The primary embedded vision products used in factory automation applications are:

- Smart Sensors — A single unit that is designed to perform a single machine vision task. Smart sensors require little or no configuring and have limited on-board processing. Frequently a lens and lighting are also incorporated into the unit.

- Smart Cameras — This is a single unit that incorporates a machine vision camera, a processor and I/O in a compact enclosure. Smart cameras are configurable and so can be used for a number of different applications. Most have the facility to change lenses and are also available with built-in LED lighting.

- Compact Vision System — This is a complete machine vision system, not based on a PC, consisting of one or more cameras and a processor module. Some products have an LCD screen incorporated as part of the processor module. This obviates the need to connect the devices to a monitor for set-up. The principal feature that distinguishes compact vision systems (CVS) from smart cameras is their ability to take information from a number of cameras. This can be more cost-effective where an application requires multiple images.

- Machine Vision Cameras (MV Cameras) — These are devices that convert an optical image into an analogue or digital signal. This may be stored in random access memory, but not processed, within the device.

- Frame Grabbers — This is a device (usually a PCB card) for interfacing the video output from a camera with a PC or other control device. Frame grabbers are sometimes called video-capture boards or cards. They vary from being a simple interface to a more complex device that can handle many functions including triggering, exposure rates, shutter speeds and complex signal processing.

- Machine Vision Lighting — This refers to any device that is used to light a scene being viewed by a machine-vision camera or sensor. This report considers only those devices that are designed and marketed for use in machine-vision applications in an industrial automation environment.

- Machine Vision Lenses — This category includes all lenses used in a machine-vision application, whether sold with a camera or as a spare or additional part.

- Machine Vision Software — This category includes all software that is sold as a product in its own right, and is designed specifically for machine-vision applications. It is split into:

- Library Software — allows users to develop their own MV system architecture. There are many different types, some offering great flexibility. They are often called SDKs (Software Development Kits).

- System Software — which is designed for a particular application. Some are very comprehensive and require little or no set-up.

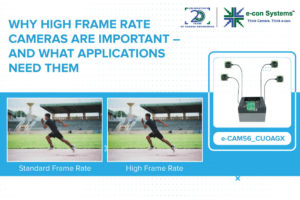

Why High Frame Rate Cameras are Important, and What Applications Need Them

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. As embedded vision systems keep taking quantum leaps to become more innovative and intuitive, it’s becoming more complex to address the specifications for resolution and frame rate. As you may know, conventionally, applications used in

Axelera AI Demonstration of Fast and Efficient Workplace Safety with the Metis AIPU

Bram Verhoef, Co-founder of Axelera AI, demonstrates the company’s latest edge AI and vision technologies and products at the 2024 Embedded Vision Summit. Specifically, Verhoef demonstrates how his company’s Metis AIPU can accelerate computer vision applications. Axelera AI, together with its partner FogSphere, has developed a computer vision system that detects if people are wearing

Basler Presents New CXP-12 Line Scan Cameras with 8k and 16k Resolution

With the new racer 2 L 8k and 16k line scan cameras, Basler is launching the first models in its new line scan camera series. They are equipped with the latest Gpixel sensors and CXP-12 interface. In addition to the cameras, Basler offers all other important components for a line scan vision system, including lighting

“Intel’s Approach to Operationalizing AI in the Manufacturing Sector,” a Presentation from Intel

Tara Thimmanaik, AI Systems and Solutions Architect at Intel, presents the “Intel’s Approach to Operationalizing AI in the Manufacturing Sector,” tutorial at the May 2024 Embedded Vision Summit. AI at the edge is powering a revolution in industrial IoT, from real-time processing and analytics that drive greater efficiency and learning… “Intel’s Approach to Operationalizing AI

ace 2 V: Basler Presents CoaXPress 2.0 Camera in a Small Design

With the new ace 2 V models, Basler AG presents compact cameras with the CoaXPress 2.0 interface. The international manufacturer of high-quality machine vision hardware and software also offers all the components necessary for a complete, cost-effective CXP vision system. Ahrensburg, June 25, 2024 – The Basler ace 2 V expands the portfolio of the

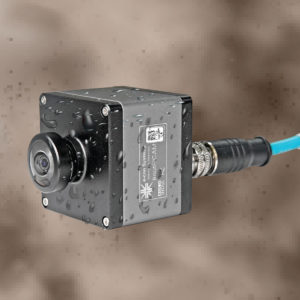

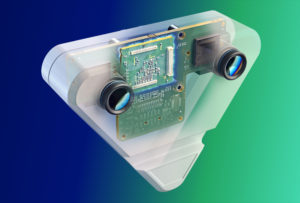

e-con Systems Unveils New Robust All-weather Global Shutter Ethernet Camera for Outdoor Applications

Latest powerful addition to its RouteCAM series California & Chennai (June 12, 2024): e-con Systems, a global leader in embedded vision solutions, introduces a new Outdoor-Ready Global Shutter GigE camera — RouteCAM_CU25, the powerful addition to its high performance Ethernet camera series, RouteCAM. This Full HD Power over Ethernet (PoE) camera excels in delivering accurate

Basler AG Acquires Stake in Roboception GmbH

Computer vision expert Basler and 3D vision specialist Roboception strengthen existing cooperation by acquiring a 25.1% stake and plan to expand the 3D solutions business for factory automation, robotics and logistics. Ahrensburg, June 12, 2024 – Basler AG, a leading supplier of image processing components for computer vision applications, has acquired 25.1 % of the

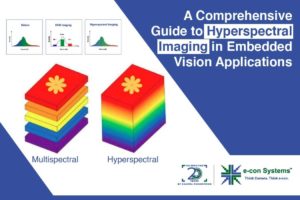

A Comprehensive Guide to Hyperspectral Imaging in Embedded Vision Applications

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Hyperspectral imaging lets cameras see beyond human vision. It offers a high spectral resolution by capturing data in large numbers of spectral bands. Explore the technical aspects of hyperspectral imaging and discover its applications in

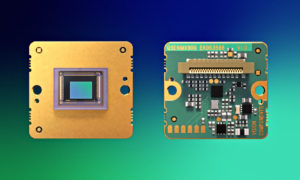

New Vision Components MIPI Cameras with GMSL2 and Miniature Vision Systems for Smart and Autonomous Devices

Vision Components presents at CVPR 2024 Ettlingen, May 14, 2024 – Vision Components presents its MIPI CSI-2 Camera lineup with an optional GMSL2 interface for data transmissions with cables up to 10 meters long at the Computer Vision and Pattern Recognition (CVPR) Conference, 17-21 June in Seattle. Further highlights are the world’s first MIPI Camera

Vision Components’ MIPI Cameras with GMSL2 for Cable Lengths of Up to 10 Meters

Vision Components extends its MIPI porfolio Ettlingen, May 14, 2024 – Vision Components offers its VC MIPI Cameras from now on with an optional GMSL2 interface for data transmission with cables up to 10 meters long. The company has therefore developed an adapter board that is fully integrated into the design of the modules. The

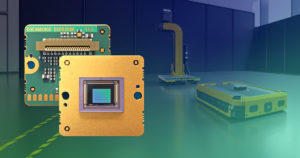

Why e-con Systems’ Latest 5MP Global Shutter Camera is Ideal for Factory Automation

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Embedded vision has transformed factory automation – thanks to advances in sensor technology, processing power, and AI. Discover the key features of e-CAM56_CUOAGX, e-con Systems’ latest camera and see why it’s perfect for factory automation.

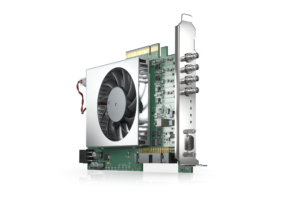

Basler Presents a New, Programmable CXP-12 Frame Grabber

With the imaFlex CXP-12 Quad, Basler AG is expanding its CXP-12 vision portfolio with a powerful, individually programmable frame grabber. Using the graphical FPGA development environment VisualApplets, application-specific image pre-processing and processing for high-end applications can be implemented on the frame grabber. Basler’s boost cameras, trigger boards, and cables combined with the card form a

The Role of Embedded Vision in Ensuring Industrial Safety

This blog post was originally published at TechNexion’s website. It is reprinted here with the permission of TechNexion. Despite numerous rules and regulations in place, ensuring complete workplace safety still seems challenging in the industrial and commercial sectors. According to the US Department of Labor: “4,764 workers died on the job in 2020 (3.4 per

Navigating the Future: How Avnet is Addressing Challenges in AMR Design

This blog post was originally published at Avnet’s website. It is reprinted here with the permission of Avnet. Autonomous mobile robots (AMRs) are revolutionizing industries such as manufacturing, logistics, agriculture, and healthcare by performing tasks that are too dangerous, tedious, or costly for humans. AMRs can navigate complex and dynamic environments, communicate with other devices

Achieving a Zero-incident Vision In Your Warehouse with Dragonfly

This blog post was originally published by Onit. It is reprinted here with the permission of Onit. At Onit, we’re revolutionizing the efficiency and safety standards in warehouse environments through edge AI and computer vision. Leveraging our state-of-the-art Dragonfly and RTLS (real-time locating system) applications, we address the complex challenges inherent in chaotic and labor-intensive

FRAMOS to Showcase Automation Solutions at the Largest Robotics and Automation Show in North America

15th of April 2024 – FRAMOS, a leading global expert in vision systems dedicated to innovation and excellence in enabling devices to see and think, is pleased to announce its presence at the Automate Show 2024 in Chicago, Illinois, USA. FRAMOS will be joining more than 800 game-changing exhibitors at the Automate Show from May

Qualcomm Announces Breakthrough Wi-Fi Technology and Introduces New AI-ready IoT and Industrial Platforms at Embedded World 2024

Highlights: Qualcomm Technologies’ leadership in connectivity, high performance, low power processing, and on-device AI position it at the center of the digital transformation of many industries. Introducing new industrial and embedded AI platforms, as well as micro-power Wi-Fi SoC—helping to enable intelligent computing everywhere. The Company is looking to expand its portfolio to address the

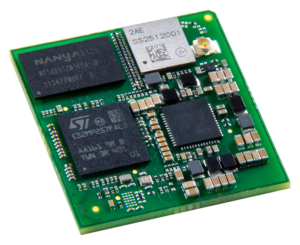

Digi International to Unveil the New Digi ConnectCore MP25 System-on-module for Next-gen Computer Vision Applications at Embedded World 2024

Versatile, wireless and secure system-on-module based on the STMicroelectronics STM32MP25 processor improves efficiency, reduces costs and enables edge processing for innovative new devices MINNEAPOLIS, April 4, 2024 – Digi International (NASDAQ: DGII, www.digi.com), a leading global provider of Internet of Things (IoT) connectivity products and services, is poised to introduce the wireless and highly power-efficient

Enhancing Precision Agriculture with 20MP High-resolution Cameras for Weed and Bug Detection

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Precision agriculture techniques have grown exponentially in the last decade due to the ever-advancing camera technologies. In this blog, we explore how a 20MP high-resolution AR2020 sensor-powered camera and its functionality help enhance precision agriculture

Embedded Vision from the Camera to Image Processing with FPGAs

Vision Components at embedded world exhibition Ettlingen, March 19, 2024 – Vision Components will showcase innovations for fast and easy embedded vision integration at embedded world, from the first MIPI camera with IMX900 image sensor for industrial applications to an update of the VC Power SoM FPGA accelerator. The tiny board enables efficient real-time image

Detecting Real-time Waste Contamination Using Edge Computing and Video Analytics

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. The past few decades have witnessed a surge in rates of waste generation, closely linked to economic development and urbanization. This escalation in waste production poses substantial challenges for governments worldwide in terms of efficient processing and

Vision Components: New Cameras and Vision Systems for Intralogistics

Ettlingen, February 28, 2024 – At LogiMAT trade fair (Messe Stuttgart, March 19 – 21, 2024), Vision Components will present the world’s first industrial-grade MIPI camera module with the new IMX900 image sensor from Sony. The VC MIPI IMX900 global shutter camera offers 3.2 megapixel resolution and high light sensitivity up to the infrared range.

Fixed-focus vs. Autofocus Lenses: How to Choose the Best Lens for Your Application

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. In this blog, you’ll learn about these two lens types with key insights into their attributes and best-use scenarios in the world of embedded vision. Embedded vision systems rely heavily on the capabilities of their

Adapting Strategies: Industrial Machine Vision’s Response to Short-term Challenges

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. In 2023, the total machine vision market generated $6.9 billion in revenue. When will growth return? OUTLINE Industrial machine vision: a $7.8 billion market in 2029 compared to $6.9 billion in 2023.

How IP69K Rating Impacts Camera Performance in Autonomous Mobility Systems

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. In this blog, you’ll discover what capabilities are required to achieve the IP69K rating and its benefits for camera solutions that power autonomous mobility systems. The Ingress Protection (IP) rating system is a recognized standard

Improving Productivity with AI

This blog post was originally published at Digica’s website. It is reprinted here with the permission of Digica. Application of Computer Vision in the Industrial Sector Inventory management is a key process for all industrial companies, but the inventory process is both time-consuming and error-prone. Mistakes can be very costly, and it is highly undesirable

First MIPI Camera with Sony IMX900 Image Sensor

Vision Components announces at Photonics West Ettlingen, January 22, 2024 – Vision Components introduces the new VC MIPI IMX900 camera module at the SPIE Photonics West trade fair, 27 January – 1 February, San Francisco. It integrates the high resolution 3.2 megapixel Sony IMX900 global shutter image sensor into an ultra-compact, industrialgrade and longterm available

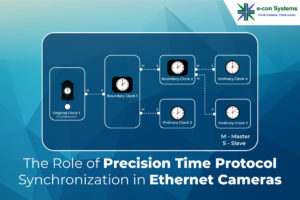

The Role of Precision Time Protocol Synchronization in Ethernet Cameras

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Ethernet cameras rely on precise timing and synchronization for accurate data transmission. The Precision Time Protocol (PTP) ensures sub-microsecond accuracy, synchronizing time clocks among IP-connected devices. Gain expert insights into how precise timing and synchronization

What are the Most Effective Methods to Achieve High Dynamic Range Imaging?

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. The importance of High Dynamic Range (HDR) in new-age embedded vision applications has been steadily growing. They can capture fine details from scenes despite changing light conditions. Get expert insights to better understand the six

D3 Announces Production-intent mmWave Radar Kit Based on Texas Instruments Radar Sensor

Based on Texas Instruments mmWave radar technology, the DesignCore® RS-2944A mmWave Radar Sensor features a single board, small form factor, and an easy-to-use USB-serial interface. Rochester, NY – December 19, 2023 – D3, announced their DesignCore® RS-2944A mmWave Radar Sensor Evaluation Kit based on TI’s AWR2944 radar sensor. The DesignCore Evaluation Kit enables the implementation

Silo AI Supports Körber in Redefining Visual Quality Control

This blog post was originally published at Silo AI’s website. It is reprinted here with the permission of Silo AI. The international technology group Körber focuses on the development of AI-driven visual quality control for the pharmaceutical industry. Therefore, Körber has teamed up with Silo AI, one of the largest private AI labs in Europe,

A Brief Introduction to Millimeter Wave Radar Sensing

This blog post was originally published by D3. It is reprinted here with the permission of D3. We’ve found that a lot of innovators are considering including millimeter wave radar in their solutions, but some are not familiar with the sensing capabilities of radar when compared with vision, lidar, or ultrasonics. I want to review some

What are Vision Systems? Everything You Need to Know in 2023

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. The ongoing embedded vision evolution carries game-changing implications for various industries. It has the potential to redefine the way machines perceive and engage with their surroundings. Discover the popular types of cameras leading this charge,

The Cloneable Platform: Making Any Device an Intelligent Extension of Your Internal Experts

This blog post was originally published at Cloneable’s website. It is reprinted here with the permission of Cloneable. No-code Edge AI Application Builder Despite the incredible potential of deep tech like AI and IoT, it’s still a struggle to make it truly impactful for businesses. Today, millions of field devices are collecting data on assets

Case History: Florim

This blog post was originally published by Onit. It is reprinted here with the permission of Onit. 80 Forklifts for Indoor and Outdoor Logistics Florim is a multinational company recognized in the production of ceramic surfaces. With an innate passion for beauty and design, Florim has been producing ceramic surfaces for every building, architecture and

Schneider Electric Integrates Hailo Technologies Processors for Greater AI Capabilities

Low-power consumption processors enable real-time, high-accuracy data analysis at the edge Increases the intelligence and efficiency of industrial automation solutions Rueil-Malmaison, France – November 16, 2023 – Schneider Electric, the leader in the digital transformation of energy management and automation, today announced a technology collaboration with Hailo Technologies, a leading Artificial Intelligence (AI) chipmaker, to

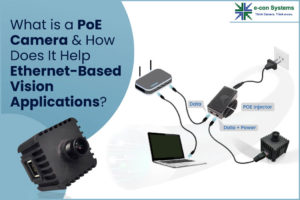

What is a PoE Camera and How Does It Help Ethernet-based Vision Applications?

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. PoE cameras combine power source and data connection capabilities through a single Ethernet cable. They can be integrated into intelligent embedded vision systems across different environments. Find out the real impact of PoE cameras on

Basler and Siemens Join Forces to Enhance Machine Vision and Factory Automation Capabilities

Basler AG, a leading provider of advanced computer vision products, is excited to announce its new partnership with the technology company Siemens, an innovation leader in automation and digitalization. This strategic partnership will make it much easier for automation customers in all industries to integrate machine vision solutions directly into their automation systems. Ahrensburg, November