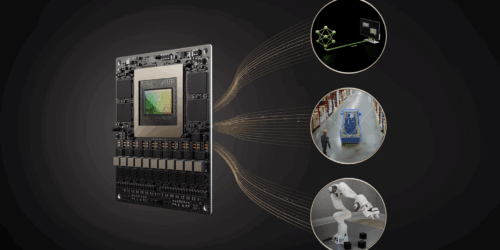

SiMa.ai Announces First Integrated Capability with Synopsys to Accelerate Automotive Physical AI Development

San Jose, California – January 6, 2026 – SiMa.ai today announced the first integrated capability resulting from its strategic collaboration with Synopsys. The joint solution provides a blueprint to accelerate architecture exploration and early virtual software development for AI- ready, next-generation automotive SoCs that support applications such as Advanced Driver Assistance Systems (ADAS) and In-vehicle-Infotainment […]