Synopsys, Inc. (Nasdaq: SNPS) is the Silicon to Software partner for innovative companies developing the electronic products and software applications we rely on every day. As an S&P 500 company, Synopsys has a long history of being a global leader in electronic design automation (EDA) and semiconductor IP and offers the industry’s broadest portfolio of application security testing tools and services. Whether you’re a system-on-chip (SoC) designer creating advanced semiconductors, or a software developer writing more secure, high-quality code, Synopsys has the solutions needed to deliver innovative products.

Synopsys

Recent Content by Company

Inuitive Demonstration of a RGBD Sensor Using a Synopsys ARC-based NU4100 AI and Vision Processor

Dor Zepeniuk, CTO at Inuitive, demonstrates the company’s latest edge AI and vision technologies and products at the 2024 Embedded Vision Summit. Specifically, Zepeniuk demonstrates his company’s latest RGBD sensor, which integrates RGB color sensor with a depth sensor into a single device. The Inuitive NU4100 is an all-in-one vision processor that supports simultaneous AI-powered […]

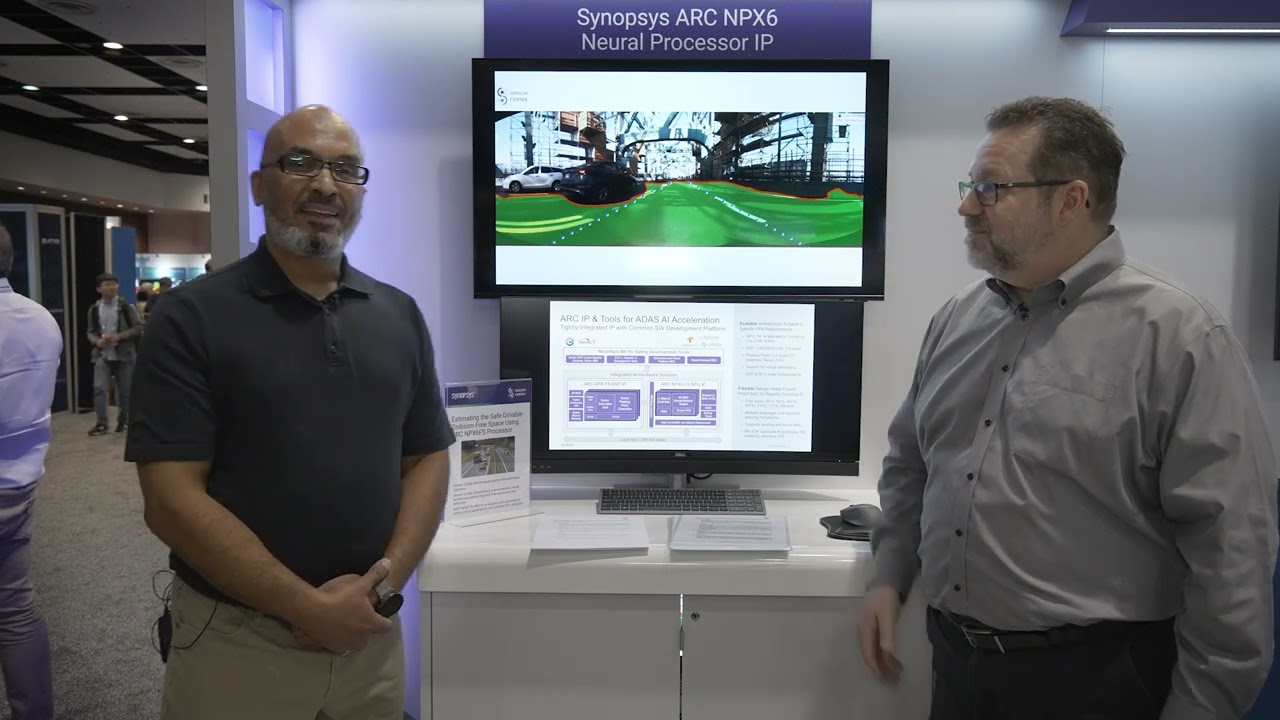

Sensor Cortek Demonstration of SmarterRoad Running on Synopsys ARC NPX6 NPU IP

Fahed Hassanhat, head of engineering at Sensor Cortek, demonstrates the company’s latest edge AI and vision technologies and products in Synopsys’ booth at the 2024 Embedded Vision Summit. Specifically, Hassanhat demonstrates his company’s latest ADAS neural network (NN) model, SmarterRoad, combining lane detection and open space detection. SmarterRoad is a light integrated convolutional network that […]

Ecosystem Collaboration Drives New AMBA Specification for Chiplets

This blog post was originally published at Arm’s website. It is reprinted here with the permission of Arm. AMBA is being extended to the chiplets market with the new CHI C2C specification. As Arm’s EVP and Chief Architect Richard Grisenthwaite said in this blog, Arm is collaborating across the ecosystem on standards to enable a […]

Synopsys at CES 2024: Driving the Future of Software-defined Vehicles

This blog post was originally published at Synopsys’ website. It is reprinted here with the permission of Synopsys. Streaming video. Voice-activated controls. A variety of apps. While these may sound like features in today’s smartphones, they can just as well be applicable to cars. Welcome to the era of software-defined vehicles (SDVs), where sophisticated software […]

Visionary.ai Demonstration of a True Night Vision ISP Running on Synopsys’ ARC AI and Vision Processor

Oren Debbi, CEO and Co-founder of Visionary.ai, demonstrates the company’s latest edge AI and vision technologies and products at the 2023 Embedded Vision Summit. Specifically, Debbi demonstrates Visionary.ai’s low-light software-based image signal processor (ISP). Two cameras with identical hardware are placed inside a box, one using a standard ISP and the other using Visionary.ai’s AI […]

Inuitive Demonstration of Its Multicore Processor Running on a Synopsys ARC AI and Vision Processor

Dor Zepeniuk, Co-founder, CTO and VP Products at Inuitive, demonstrates the company’s latest edge AI and vision technologies and products at the 2023 Embedded Vision Summit. Specifically, Zepeniuk demonstrates Inuitive’s latest multicore vision-on-chip processor, the NU4100. The NU4100 all-in-one vision processor supports simultaneous AI-powered image processing, depth sensing and VSLAM capabilities for a wide range […]

“How Transformers Are Changing the Nature of Deep Learning Models,” a Presentation from Synopsys

Tom Michiels, System Architect for ARC Processors at Synopsys, presents the “How Transformers Are Changing the Nature of Deep Learning Models” tutorial at the May 2023 Embedded Vision Summit. The neural network models used in embedded real-time applications are evolving quickly. Transformer networks are a deep learning approach that has become dominant for natural language […]

2023 Edge AI and Vision Product of the Year Award Winner Showcase: Synopsys (Edge AI Processors)

Synopsys’ ARC® NPX6 NPU IP is the 2023 Edge AI and Vision Product of the Year Award Winner in the Edge AI Processors category. The ARC NPX6 NPU IP is an AI inference engine optimized for the latest neural network models – including newly emerging transformers – which can scale from 8 to 3,500 TOPS […]

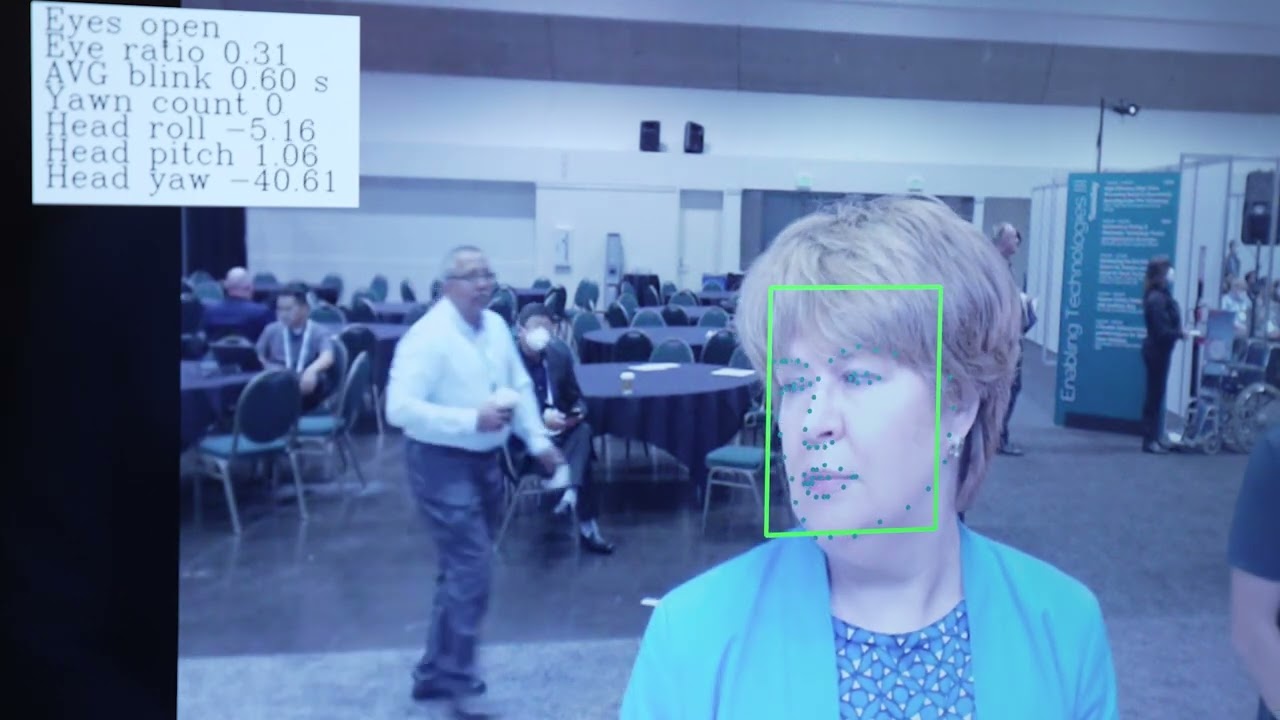

Visidon Demonstration of a Driver Monitoring System Running On a Synopsys ARC EV7x Processor

Vaida Jasulaityte, Business Development Director at Visidon, demonstrates the company’s latest edge AI and vision technologies and products in Synopsys’ booth at the 2022 Embedded Vision Summit. Specifically, Jasulaityte demonstrates Visidon’s Driver Monitoring System (DMS) running on a Synopsys ARC® EV7x Vision Processor. In the demo, Jasulaityte shows how Visidon’s DMS, a vehicle safety system, […]

Sensor Cortek Demonstration of Neural Network-Enhanced Radar Processing on the ARC VPX5 DSP

Fahed Hassenat, COO and Director of Engineering at Sensor Cortek, demonstrates the company’s latest edge AI and vision technologies and products in Synopsys’ booth at the 2022 Embedded Vision Summit. Specifically, Hassenat demonstrates AI-based radar detection for edge applications. In the demonstration, Hassenat describes how Sensor Cortek captures raw radar data and implements a signal […]

“How Transformers are Changing the Direction of Deep Learning Architectures,” a Presentation from Synopsys

Tom Michiels, System Architect for DesignWare ARC Processors at Synopsys, presents the “How Transformers are Changing the Direction of Deep Learning Architectures” tutorial at the May 2022 Embedded Vision Summit. The neural network architectures used in embedded real-time applications are evolving quickly. Transformers are a leading deep learning approach for natural language processing and other […]

Award-Winning Processors Drive Greater Intelligence and Safety into Autonomous Automotive Systems

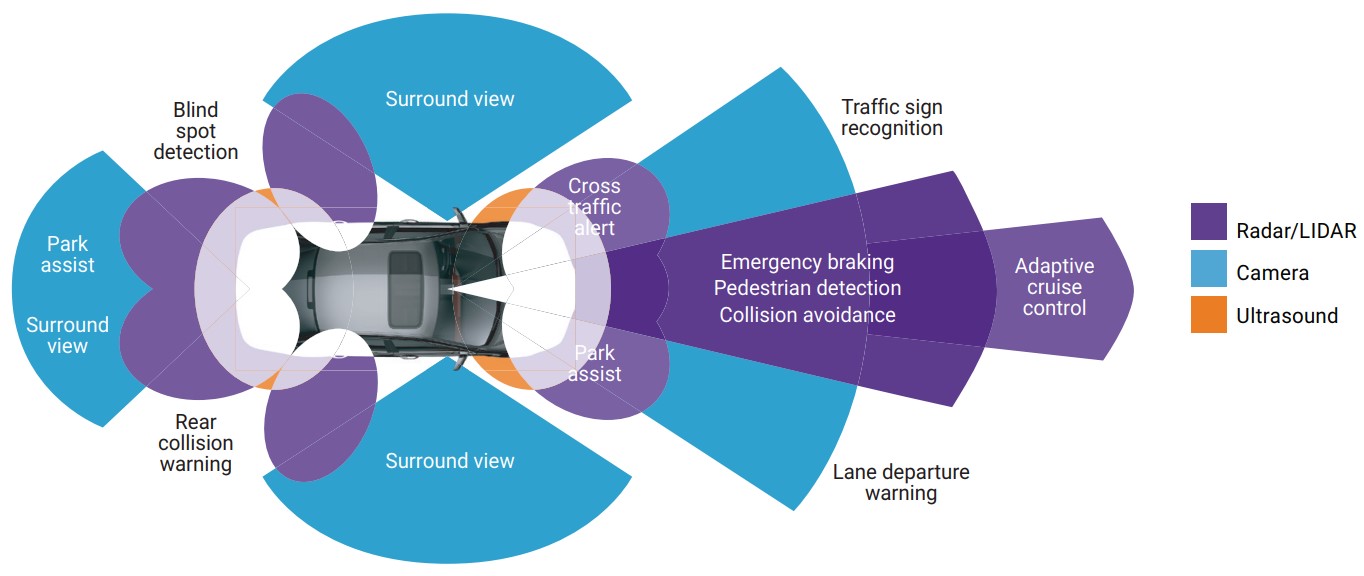

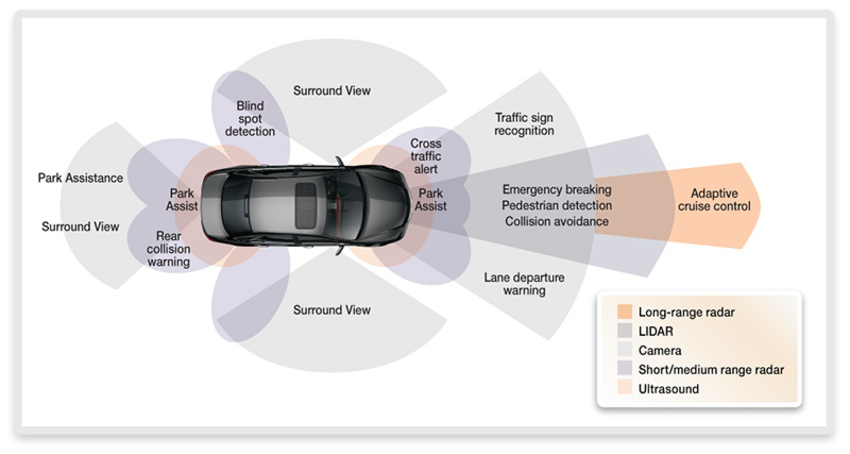

This blog post was originally published at Synopsys’ website. It is reprinted here with the permission of Synopsys. From safety features like collision avoidance to the self-driving cars that are being tested on highways and city streets, artificial intelligence (AI) technologies play an integral role in modern vehicles. Sophisticated sensors and deep-learning algorithms like neural […]

Edge AI and Vision Alliance Announces 2022 Edge AI and Vision Product of the Year Award Winners

Awards Celebrating Innovation and Achievement in Computer Vision and Edge AI SANTA CLARA, Calif., May 17, 2022 /PRNewswire/ — The Edge AI and Vision Alliance today announced the 2022 winners of the Edge AI and Vision Product of the Year Awards. The Awards celebrate the innovation and achievement of the industry’s leading companies that are […]

2022 Edge AI and Vision Product of the Year Award Winner Showcase: Synopsys (Automotive Solutions)

Synopsys’ DesignWare ARC EV7xFS Processor IP for Functional Safety is the 2022 Edge AI and Vision Product of the Year Award Winner in the Automotive Solutions category. The EV7xFS is a unique multi-core SIMD vector digital signal processing (DSP) solution that combines seamless scalability of computer vision, DSP and AI processing with state-of-the-art safety features […]

Synopsys Introduces Industry’s Highest Performance Neural Processor IP

New DesignWare ARC NPX6 NPU IP Delivers Up to 3,500 TOPS Performance for Automotive, Consumer and Data Center Chip Designs Highlights of this Announcement: DesignWare ARC NPX6 NPU IP delivers industry-leading performance and power efficiency of 30 TOPS/Watt, featuring up to 96K MACs with enhanced utilization, new sparsity features and new interconnect for scalability New […]

Synopsys Demonstration of SRGAN Super Resolution on DesignWare ARC EV7x Processors

Liliya Tazieva, Software Engineer at Synopsys, demonstrates the company’s latest edge AI and vision technologies and products at the 2021 Embedded Vision Summit. Specifically, Tazieva demonstrates SRGAN super resolution on Synopsys’ DesignWare ARC EV7x processors. Image super resolution techniques reconstruct a higher-resolution image from a lower-resolution one. Although this can be done with classical vision […]

Synopsys Demonstration of SLAM Acceleration on DesignWare ARC EV7x Processors

Liliya Tazieva, Software Engineer at Synopsys, demonstrates the company’s latest edge AI and vision technologies and products at the 2021 Embedded Vision Summit. Specifically, Tazieva demonstrates simultaneous localization and mapping (SLAM) acceleration on Synopsys’ DesignWare ARC EV7x processors. SLAM creates and updates a map of an unknown environment while at the same time keeping track […]

Upcoming Webinar Explores How to Build and Secure an Edge AI Solution

On December 1, 2021 at 9:00 am PT (noon ET), Alliance Member companies Arm, NXP Semiconductors, Siemens Digital Industries Software and Synopsys, along with Arcturus Networks, will deliver the free webinar “How to Build and Secure an Edge AI Solution from IP Cores, to SoC, to OS, to Algorithm , to Software Applications.” From the […]

Synopsys Advances Processor IP Leadership with New ARC DSP IP Solutions for Low-Power Embedded SoCs

New ARC VPX DSPs Reduce Power and Area Up to Two-Thirds for IoT, AI, Automotive and Voice/Language Processing Designs Highlights of this Announcement: Synopsys ARC 128-bit VPX2 and 256-bit VPX3 DSP IP are based on same advanced VLIW/SIMD architecture as higher performance 512-bit VPX5, providing greater flexibility for specific application requirements Portfolio includes safety-enhanced implementations […]

“Case Study: Facial Detection and Recognition for Always-On Applications,” a Presentation from Synopsys

Jamie Campbell, Product Marketing Manager for Embedded Vision IP at Synopsys, presents the “Case Study: Facial Detection and Recognition for Always-On Applications” tutorial at the May 2021 Embedded Vision Summit. Although there are many applications for low-power facial recognition in edge devices, perhaps the most challenging to design are always-on, battery-powered systems that use facial […]

Why In-Memory Computing Will Disrupt Your AI SoC Development

This blog post was originally published at Synopsys’ website. It is reprinted here with the permission of Synopsys. Artificial intelligence (AI) algorithms thirsting for higher performance per watt have driven the development of specific hardware design techniques, including in-memory computing, for system-on-chip (SoC) designs. In-memory computing has predominantly been publicly seen in semiconductor startups looking […]

“Trends in Neural Network Topologies for Vision at the Edge,” a Presentation from Synopsys

Pierre Paulin, Director of R&D for Embedded Vision at Synopsys, presents the “Trends in Neural Network Topologies for Vision at the Edge” tutorial at the September 2020 Embedded Vision Summit. The widespread adoption of deep neural networks (DNNs) in embedded vision applications has increased the importance of creating DNN topologies that maximize accuracy while minimizing […]

Synopsys Online Embedded Vision Sessions: Navigating Intelligent Vision at the Edge

In these online sessions from Synopsys’ recent workshop, you’ll learn about the latest trends in artificial intelligence and computer vision and how to use embedded vision technologies to navigate from concept to successful silicon. You’ll get a deep dive into deep learning, embedded vision, and standards-based programming for automotive, mobile, surveillance, and consumer applications. Below […]

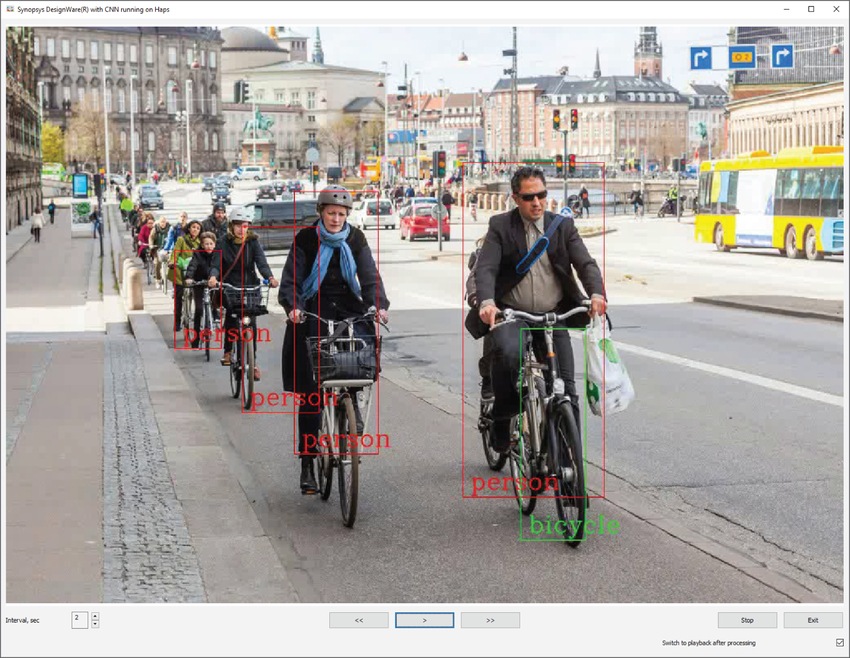

Synopsys Demonstration of Performance Scaling On the ARC EV6x Embedded Vision Processor IP with CNN Engine

Gordon Cooper, Product Marketing Manager at Synopsys, demonstrates the company’s latest embedded vision technologies and products at the 2019 Embedded Vision Summit. Specifically, Cooper discusses deep leaning computer vision scaling challenges while demonstrating the DesignWare ARC EV6x with CNN Engine IP running on a HAPS FPGA-based prototyping system. The demo shows the performance changes between […]

Synopsys Demonstration of ASIL-D Ready DesignWare ARC EV6x Embedded Vision Processor IP for Safe Auto SoCs

Gordon Cooper, Product Marketing Manager at Synopsys, demonstrates the company’s latest embedded vision technologies and products at the 2019 Embedded Vision Summit. Specifically, Cooper demonstrates the ARC EV6x Embedded Vision Processor with Safety Enhancement Package (SEP). In the demo, the EV6x is used for drowsiness detection, object detection, and lane detection for safety-critical automotive applications. […]

Synopsys and Inuitive Demonstration of DesignWare ARC EV6x Processor IP in NU4000 SoC

Gordon Cooper, EV Product Marketing Manager at Synopsys, and Dor Zepeniuk, CTO and VP Products at Inuitive, demonstrates the companies’ latest embedded vision technologies and products at the 2019 Embedded Vision Summit. Specifically, Cooper and Zepeniuk demonstrate Inuitive’s NU4000 3D imaging and vision processor. The ARC EV6x Embedded Vision Processor IP, which powers the NU4000, […]

“Five+ Techniques for Efficient Implementation of Neural Networks,” a Presentation from Synopsys

Bert Moons, Hardware Design Architect at Synopsys, presents the “Five+ Techniques for Efficient Implementation of Neural Networks” tutorial at the May 2019 Embedded Vision Summit. Embedding real-time, large-scale deep learning vision applications at the edge is challenging due to their huge computational, memory and bandwidth requirements. System architects can mitigate these demands by modifying deep […]

“Fundamental Security Challenges of Embedded Vision,” a Presentation from Synopsys

Mike Borza, Principal Security Technologist at Synopsys, presents the “Fundamental Security Challenges of Embedded Vision” tutorial at the May 2019 Embedded Vision Summit. As facial recognition, surveillance and smart vehicles become an accepted part of our daily lives, product and chip designers are coming to grips with the business need to secure the data that […]

Infineon and Synopsys Collaborate to Accelerate Artificial Intelligence in Automotive Applications

MOUNTAIN VIEW, Calif. and MUNICH, Sept. 17, 2019 /PRNewswire/ — Artificial intelligence (AI) and neural networks are becoming a key factor in developing safer, smart, and eco-friendly cars. In order to support AI-driven solutions with its future automotive microcontrollers, Infineon Technologies AG (FSE: IFX/OTCQX: IFNNY) has started a collaboration with Synopsys, Inc. (Nasdaq: SNPS). Next-generation AURIX microcontrollers […]

Synopsys Simplifies Automotive SoC Development with New ARC Functional Safety Processor IP

Expanded Portfolio of ISO 26262 ASIL B and ASIL D Compliant DesignWare ARC Processors Accelerate Safety Certification of ADAS, Radar/LiDAR, and Automotive Sensor SoCs MOUNTAIN VIEW, Calif., Sept. 16, 2019 – Highlights: New Synopsys DesignWare ARC EM22FS, HS4xFS, and EV7xFS processors support ASIL B and ASIL D safety levels to simplify safety-critical automotive SoC development […]

Synopsys’ New Embedded Vision Processor IP Delivers Industry-Leading 35 TOPS Performance for Artificial Intelligence SoCs

DesignWare ARC EV7x Vision Processors with Deep Neural Network Accelerator Provide More Than 4X Performance Increase for AI-Intensive Edge Applications MOUNTAIN VIEW, Calif., Sept. 16, 2019 – Highlights DesignWare EV7x Vision Processors’ heterogeneous architecture integrates vector DSP, vector FPU, and neural network accelerator to provide a scalable solution for a wide range of current and […]

Upcoming Conference Details The Latest Technologies in Trendsetting Applications and Markets

On September 19, 2019, Synopsys, an Embedded Vision Alliance member company, will host its annual embedded processor IP event, ARC Processor Summit – Silicon Valley. This free one-day event consists of multiple tracks in which Synopsys experts, ecosystem partners and the ARC user community will deliver technical presentations on a range of topics, including embedded […]

“Making Cars That See — Failure is Not an Option,” a Presentation from Synopsys

Burkhard Huhnke, Vice President of Automotive Strategy for Synopsys, presents the "Making Cars That See—Failure is Not an Option" tutorial at the May 2019 Embedded Vision Summit. Drivers are the biggest source of uncertainty in the operation of cars. Computer vision is helping to eliminate human error and make the roads safer. But 14 years […]

2019 Vision Product of the Year Award Winner Showcase: Synopsys (Processors)

Synopsys' EV6x Embedded Vision Processor with Safety Enhancement Package (SEP) Family is the 2019 Vision Product of the Year Award Winner in the Processors category. Automotive SoC designers must meet stringent requirements for safety and reliability as defined in the ISO 26262 standard to achieve Automotive Safety Integrity Level (ASIL) targets. Furthermore, the IP — […]

Synopsys’ ARC EV6x Vision Processor IP Named Best Processor of the Year by the Embedded Vision Alliance

ASIL D Ready Vision Processor with Safety Enhancement Delivers Highest Level of Functional Safety for Automotive SoCs MOUNTAIN VIEW, Calif., May 21, 2019 /PRNewswire/ — Synopsys, Inc. (Nasdaq: SNPS) today announced that its DesignWare® ARC® EV6x Vision Processor IP with Safety Enhancement Package (SEP) was named "Best Processor" by the Embedded Vision Alliance as part […]

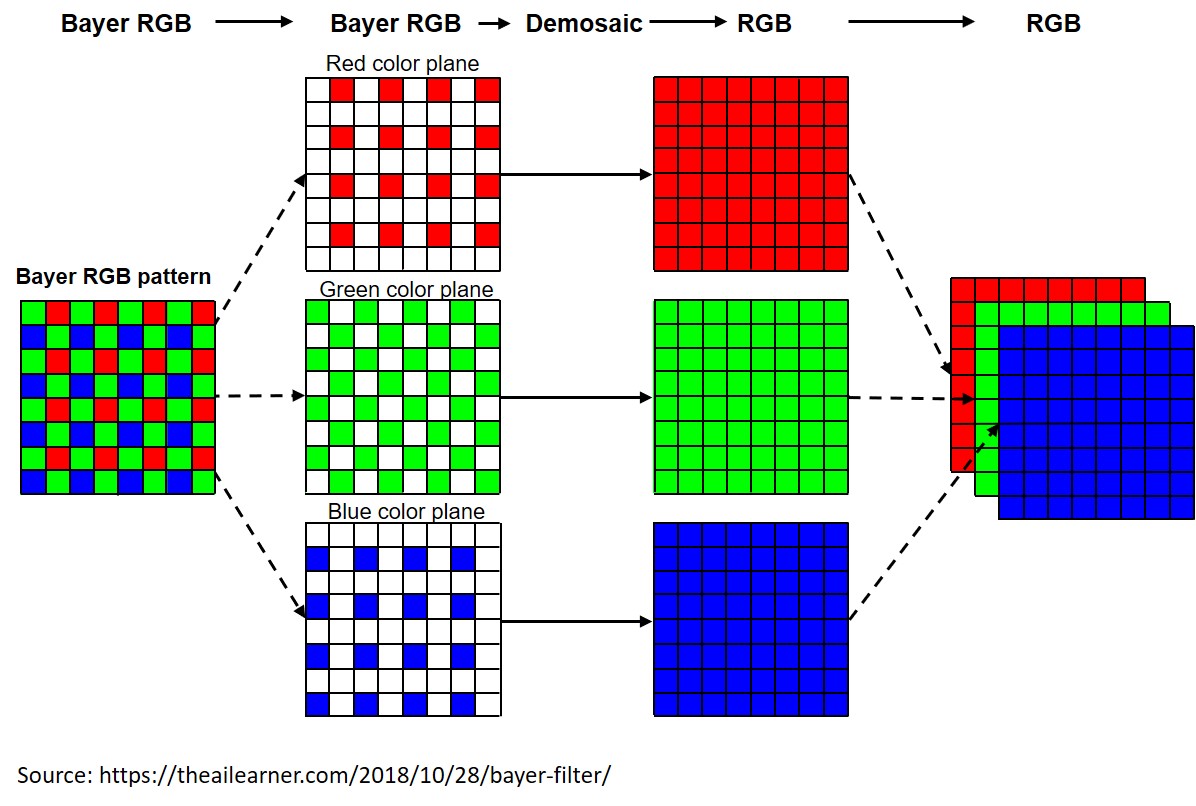

Combining an ISP and Vision Processor to Implement Computer Vision

An ISP (image signal processor) in combination with one or several vision processors can collaboratively deliver more robust computer vision processing capabilities than vision processing is capable of providing standalone. However, an ISP operating in a computer vision-optimized configuration may differ from one functioning under the historical assumption that its outputs would be intended for […]

Multi-sensor Fusion for Robust Device Autonomy

While visible light image sensors may be the baseline “one sensor to rule them all” included in all autonomous system designs, they’re not necessarily a sole panacea. By combining them with other sensor technologies: “Situational awareness” sensors; standard and high-resolution radar, LiDAR, infrared and UV, ultrasound and sonar, etc., and “Positional awareness” sensors such as […]

Synopsys Demonstration of Inuitive’s NU4000 Artificial Intelligence SoC Based On the DesignWare EV6x

Inuitive delivers a product demonstration at Synopsys' suite at the 2019 CES (Consumer Electronics Show). Specifically, the company demonstrates its NU4000 SoC for 3D imaging and vision, which uses Synopsys’ DesignWare EV6x Embedded Vision Processor IP with a CNN Engine. The EV6x can execute on-chip deep learning tasks for artificial intelligence and vision such as […]

PathPartner Technology and Synopsys Demonstration of Facial Recognition and Verification

Gordon Cooper, product marketing manager at Synopsys, and Praveen G.B., technical lead for DSP at PathPartner Technology, deliver a product demonstration at the May 2018 Embedded Vision Summit. Specifically, Cooper and Praveen demonstrate PathPartner’s facial recognition/verification model, which accounts for changes in illumination, clothes, occlusion, and expression variations. The model can be run on the […]

Inuitive and Synopsys Demonstration of AI Applications with NU4000 SoC

Gordon Cooper, product marketing manager at Synopsys, and Michael Degtyar, field application engineer at Inuitive, deliver a product demonstration at the May 2018 Embedded Vision Summit. Specifically, Cooper and Degtyar demonstrate how Inuitive is using Synopsys¹ EV6x Embedded Vision Processor with CNN Engine in Inuitive’s NU4000 multi-core SoC that supports artificial intelligence, 3D imaging, and […]

Synopsys Demonstration of Deep Learning Inference and Sparse Optical Flow

Gordon Cooper, product marketing manager at Synopsys, delivers a product demonstration at the May 2018 Embedded Vision Summit. Specifically, Cooper demonstrates combining deep learning with traditional computer vision by using the DesignWare EV6x Embedded Vision Processor¹s vector DSP and CNN engine. The tightly integrated CNN engine executes deep learning inference (using TinyYOLO, but any graph […]

Synopsys Demonstration of Android Neural Network Acceleration with EV6x

Gordon Cooper, product marketing manager, and Mischa Jonker, software engineer, both of Synopsys, deliver a product demonstration at the May 2018 Embedded Vision Summit. Specifically, Cooper and Jonker demonstrate how the DesignWare EV6x Embedded Vision Processor with deep learning can offload application processor tasks to increase performance and reduce power consumption, using an Android Neural […]

Computer Vision for Augmented Reality in Embedded Designs

Augmented reality (AR) and related technologies and products are becoming increasingly popular and prevalent, led by their adoption in smartphones, tablets and other mobile computing and communications devices. While developers of more deeply embedded platforms are also motivated to incorporate AR capabilities in their products, the comparative scarcity of processing, memory, storage, and networking resources […]

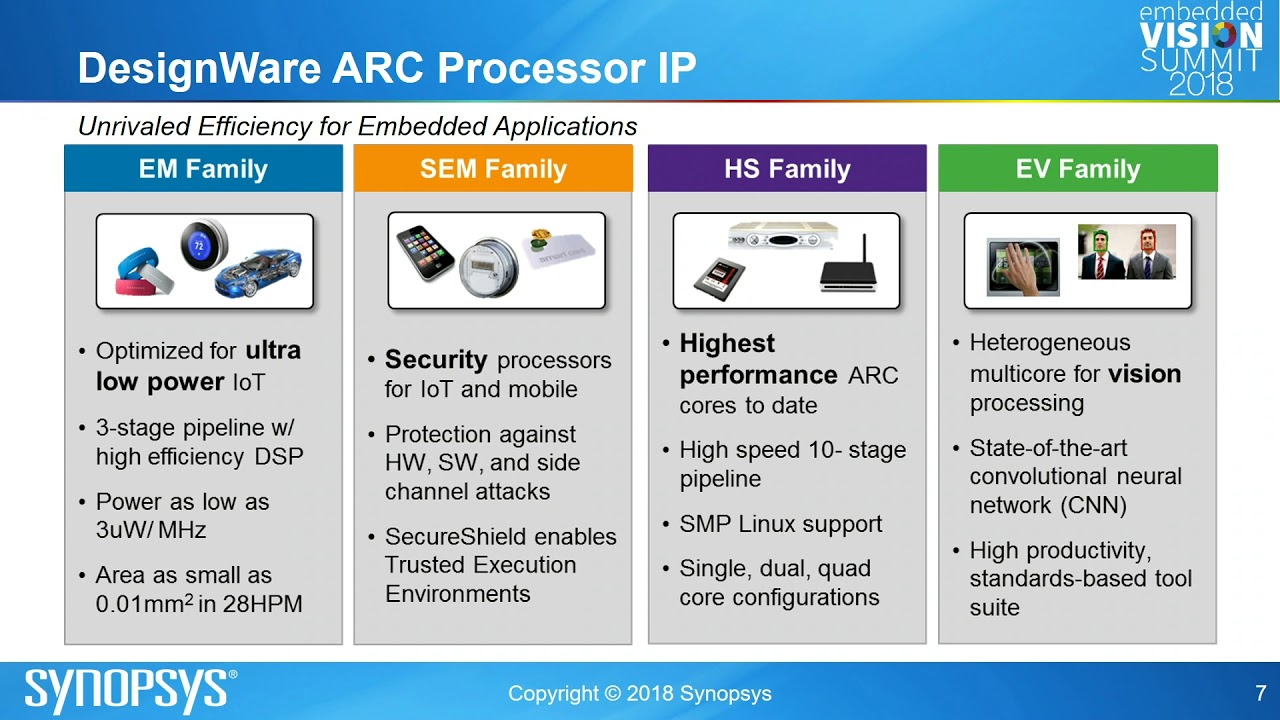

“Designing Smarter, Safer Cars with Embedded Vision Using EV Processor Cores,” a Presentation from Synopsys

Fergus Casey, R&D Director for ARC Processors at Synopsys, presents the “Designing Smarter, Safer Cars with Embedded Vision Using Synopsys EV Processor Cores” tutorial at the May 2018 Embedded Vision Summit. Consumers, the automotive industry and government regulators are requiring greater levels of automotive functional safety with each new generation of cars. Embedded vision, using […]

“New Deep Learning Techniques for Embedded Systems,” a Presentation from Synopsys

Tom Michiels, System Architect for Embedded Vision at Synopsys, presents the “New Deep Learning Techniques for Embedded Systems” tutorial at the May 2018 Embedded Vision Summit. In the past few years, the application domain of deep learning has rapidly expanded. Constant innovation has improved the accuracy and speed of learning and inference. Many techniques are […]

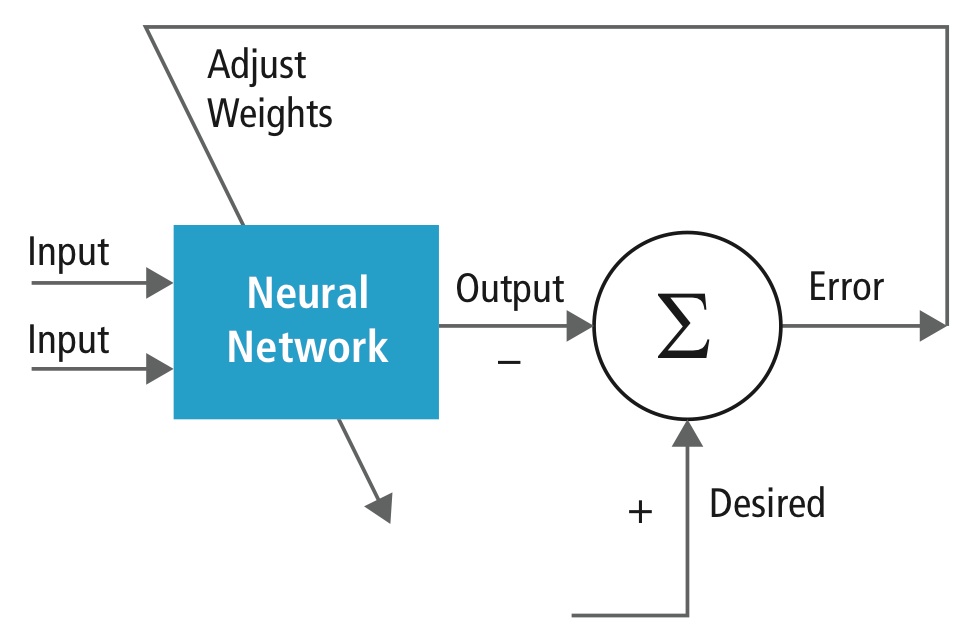

Implementing Vision with Deep Learning in Resource-constrained Designs

DNNs (deep neural networks) have transformed the field of computer vision, delivering superior results on functions such as recognizing objects, localizing objects within a frame, and determining which pixels belong to which object. Even problems like optical flow and stereo correspondence, which had been solved quite well with conventional techniques, are now finding even better […]

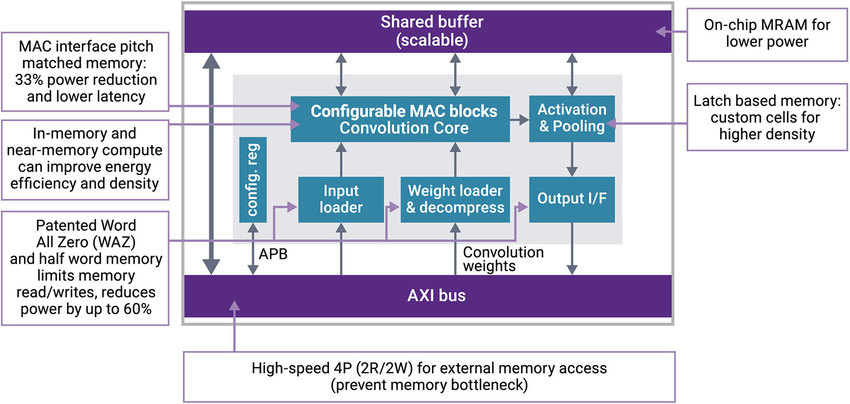

Implementing High-performance Deep Learning Without Breaking Your Power Budget

This article was originally published at Synopsys' website. It is reprinted here with the permission of Synopsys. Examples of applications abound where high-performance, low-power embedded vision processors are used: a mobile phone using face recognition to identify a user, an augmented or mixed reality headset identifying your hands and the layout of your living room […]

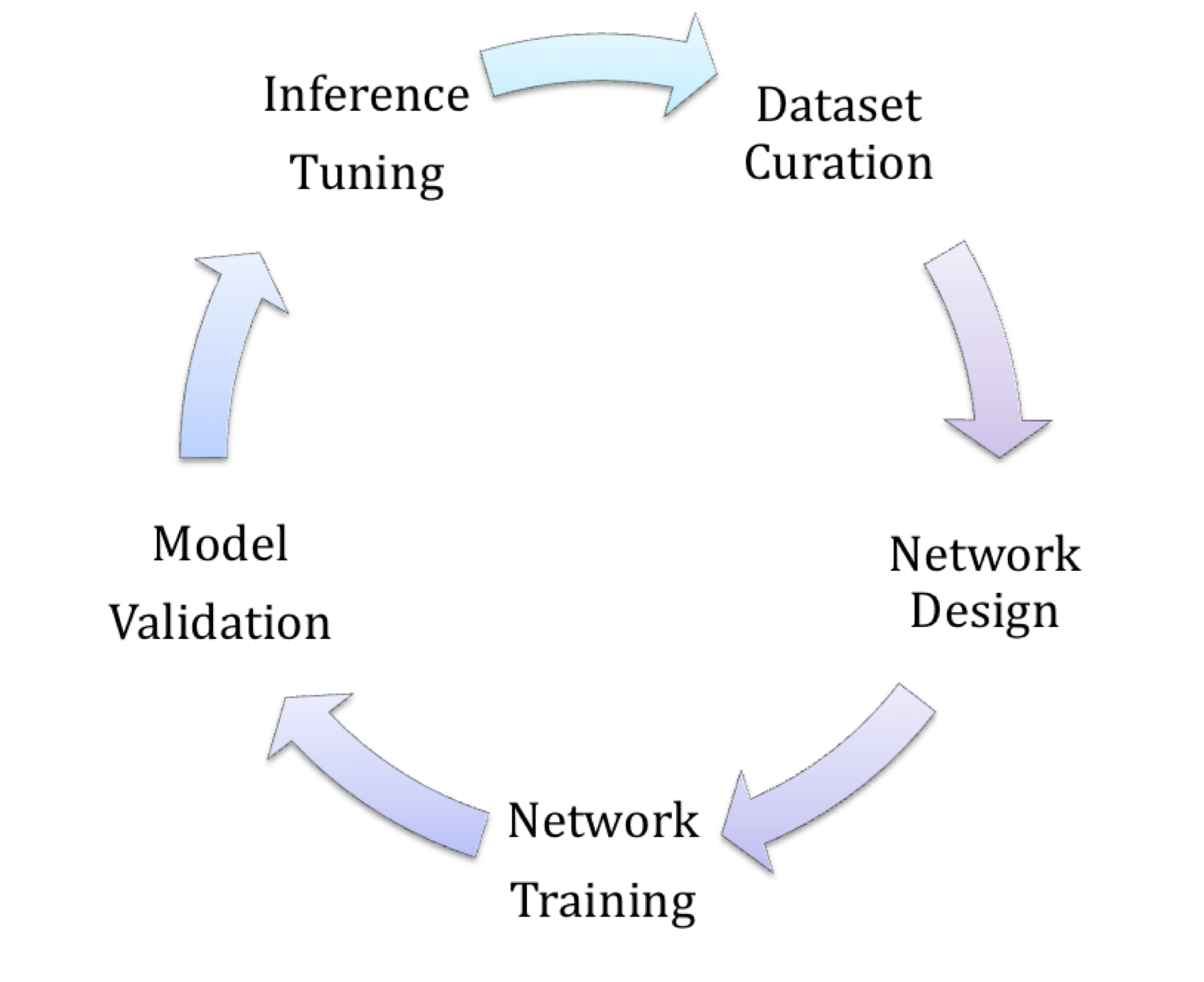

The Evolution of Deep Learning for ADAS Applications

This technical article was originally published at Synopsys' website. It is reprinted here with the permission of Synopsys. Embedded vision solutions will be a key enabler for making automobiles fully autonomous. Giving an automobile a set of eyes – in the form of multiple cameras and image sensors – is a first step, but it […]

Software Frameworks and Toolsets for Deep Learning-based Vision Processing

This article provides both background and implementation-detailed information on software frameworks and toolsets for deep learning-based vision processing, an increasingly popular and robust alternative to classical computer vision algorithms. It covers the leading available software framework options, the root reasons for their abundance, and guidelines for selecting an optimal approach among the candidates for a […]

“Designing Scalable Embedded Vision SoCs from Day 1,” a Presentation from Synopsys

Pierre Paulin, Director of R&D for Embedded Vision at Synopsys, presents the "Designing Scalable Embedded Vision SoCs from Day 1" tutorial at the May 2017 Embedded Vision Summit. Some of the most critical embedded vision design decisions are made early on and affect the design’s ultimate scalability. Will the processor architecture support the needed vision […]

“Moving CNNs from Academic Theory to Embedded Reality,” a Presentation from Synopsys

Tom Michiels, System Architect for Embedded Vision Processors at Synopsys, presents the "Moving CNNs from Academic Theory to Embedded Reality" tutorial at the May 2017 Embedded Vision Summit. In this presentation, you will learn to recognize and avoid the pitfalls of moving from an academic CNN/deep learning graph to a commercial embedded vision design. You […]

Facial Analysis Delivers Diverse Vision Processing Capabilities

Computers can learn a lot about a person from their face – even if they don’t uniquely identify that person. Assessments of age range, gender, ethnicity, gaze direction, attention span, emotional state and other attributes are all now possible at real-time speeds, via advanced algorithms running on cost-effective hardware. This article provides an overview of […]

“Using the OpenCL C Kernel Language for Embedded Vision Processors,” a Presentation from Synopsys

Seema Mirchandaney, Engineering Manager for Software Tools at Synopsys, presents the "Using the OpenCL C Kernel Language for Embedded Vision Processors" tutorial at the May 2016 Embedded Vision Summit. OpenCL C is a programming language that is used to write computation kernels. It is based on C99 and extended to support features such as multiple […]

Deep Learning for Object Recognition: DSP and Specialized Processor Optimizations

Neural networks enable the identification of objects in still and video images with impressive speed and accuracy after an initial training phase. This so-called "deep learning" has been enabled by the combination of the evolution of traditional neural network techniques, with one latest-incarnation example known as a CNN (convolutional neural network), by the steadily increasing […]

“Programming Embedded Vision Processors Using OpenVX,” a Presentation from Synopsys

Pierre Paulin, Senior R&D Director for Embedded Vision at Synopsys, presents the "Programming Embedded Vision Processors Using OpenVX" tutorial at the May 2016 Embedded Vision Summit. OpenVX, a new Khronos standard for embedded computer vision processing, defines a higher level of abstraction for algorithm specification, with the goal of enabling platform and tool innovation in […]

May 2015 Embedded Vision Summit Technical Presentation: “Low-power Embedded Vision: A Face Tracker Case Study,” Pierre Paulin, Synopsys

Pierre Paulin, R&D Director for Embedded Vision at Synopsys, presents the "Low-power Embedded Vision: A Face Tracker Case Study" tutorial at the May 2015 Embedded Vision Summit. The ability to reliably detect and track individual objects or people has numerous applications, for example in the video-surveillance and home entertainment fields. While this has proven to […]

“Tailoring Convolutional Neural Networks for Low-Cost, Low-Power Implementation,” a Presentation From Synopsys

Bruno Lavigueur, Project Leader for Embedded Vision at Synopsys, presents the "Tailoring Convolutional Neural Networks for Low-Cost, Low-Power Implementation" tutorial at the May 2015 Embedded Vision Summit. Deep learning-based object detection using convolutional neural networks (CNN) has recently emerged as one of the leading approaches for achieving state-of-the-art detection accuracy for a wide range of […]

May 2014 Embedded Vision Summit Technical Presentation: “Combining Flexibility and Low-Power in Embedded Vision Subsystems: An Application to Pedestrian Detection,” Bruno Lavigueur, Synopsys

Bruno Lavigueur, Embedded Vision Subsystem Project Leader at Synopsys, presents the "Combining Flexibility and Low-Power in Embedded Vision Subsystems: An Application to Pedestrian Detection" tutorial at the May 2014 Embedded Vision Summit. Lavigueur presents an embedded-mapping and refinement case study of a pedestrian detection application. Starting from a high-level functional description in OpenCV, he decomposes […]

Improved Vision Processors, Sensors Enable Proliferation of New and Enhanced ADAS Functions

This article was originally published at John Day's Automotive Electronics News. It is reprinted here with the permission of JHDay Communications. Thanks to the emergence of increasingly capable and cost-effective processors, image sensors, memories and other semiconductor devices, along with robust algorithms, it's now practical to incorporate computer vision into a wide range of embedded […]

October 2013 Embedded Vision Summit Technical Presentation: “Designing a Multi-Core Architecture Tailored for Pedestrian Detection Algorithms,” Tom Michiels, Synopsys

Tom Michiels, R&D Manager at Synopsys, presents the "Designing a Multi-Core Architecture Tailored for Pedestrian Detection Algorithms" tutorial within the "Algorithms and Implementations" technical session at the October 2013 Embedded Vision Summit East. Pedestrian detection is an important function in a wide range of applications, including automotive safety systems, mobile applications, and industrial automation. A […]

Another Upcoming Synopsys Embedded Vision Seminar: Application-Specific Processor Design and Prototyping

Following up on a recent news posting regarding an upcoming Japan-based event, Embedded Vision Alliance member company Synopsys also has a U.S.-based seminar coming up in the near future. On Wednesday, May 29, from 9:30am – 2:30pm in Mountain View, California, the company will present a seminar exploring application-specific processor (ASIP) design and prototyping. ASIPs, […]

April 2013 Embedded Vision Summit Technical Presentation: “Lessons Learned: FPGA Prototyping of a Processor-Based Embedded Vision Application,” Markus Wloka, Synopsys

Markus Wloka, R&D Director for System-Level Solutions at Synopsys, presents the "Lessons Learned: FPGA Prototyping of a Processor-Based Embedded Vision Application" tutorial within the "Developing Vision Software, Accelerators and Systems" technical session at the April 2013 Embedded Vision Summit. This presentation covers the steps of building a programmable vision system and highlights the importance of […]

Upcoming Synopsys Seminar Showcases DSP Development for Embedded Vision Processing

On Friday, May 31, from 10:00am to 6:30pm (Tokyo, Japan), Embedded Vision Alliance member Synopsys will present a seminar exploring application-specific processor (ASIP) design. According to Synopsys, ASIPs are ideal for embedded vision applications where real-time performance, low power and programmability are required. Please join Synopsys for this full day seminar, beginning with a keynote […]

The Synopsys Vision Processor Starter Kit: Audition An Online Webinar To Learn More About It

Bo Wu, the Technical Marketing Manager of Synopsys' Systems Group, is a name that is hopefully already familiar to many of you. Last September at the Embedded Vision Summit in Boston, Massachusetts, he delivered a presentation on "Optimization and Acceleration for OpenCV-Based Embedded Vision Applications" as well as representing Synopsys in a follow-on panel discussion […]

September 2012 Embedded Vision Summit Presentation: “Optimization and Acceleration for OpenCV-Based Embedded Vision Applications,” Bo Wu, Synopsys

Bo Wu, Technical Marketing Manager at Synopsys, presents the "Optimization and Acceleration for OpenCV-Based Embedded Vision Applications" tutorial within the "Using Tools, APIs and Design Techniques for Embedded Vision" technical session at the September 2012 Embedded Vision Summit.

September 2012 Embedded Vision Summit Panel: Avnet, CogniVue, and Synopsys

Jeff Bier, Embedded Vision Alliance Founder, moderates a discussion panel comprised of the presenters in the "Embedded Vision Applications and Algorithms" technical session at the September 2012 Embedded Vision Summit; Mario Bergeron from Avnet, Simon Morris from CogniVue, and Bo Wu from Synopsys. The panelists discuss topics such as the ability to derive depth approximation […]

Synopsys And Embedded Vision: A Multi-Faceted Product Line

If you've looked closely at the Embedded Vision Alliance member page beginning earlier today, you might have noticed two new entries; Synopsys and VanGogh Imaging. Welcome to both companies! Jeff Bier, Jeremy Giddings and I had the opportunity to meet with several Synopsys representatives a few days ago to better understand the company's technology and […]