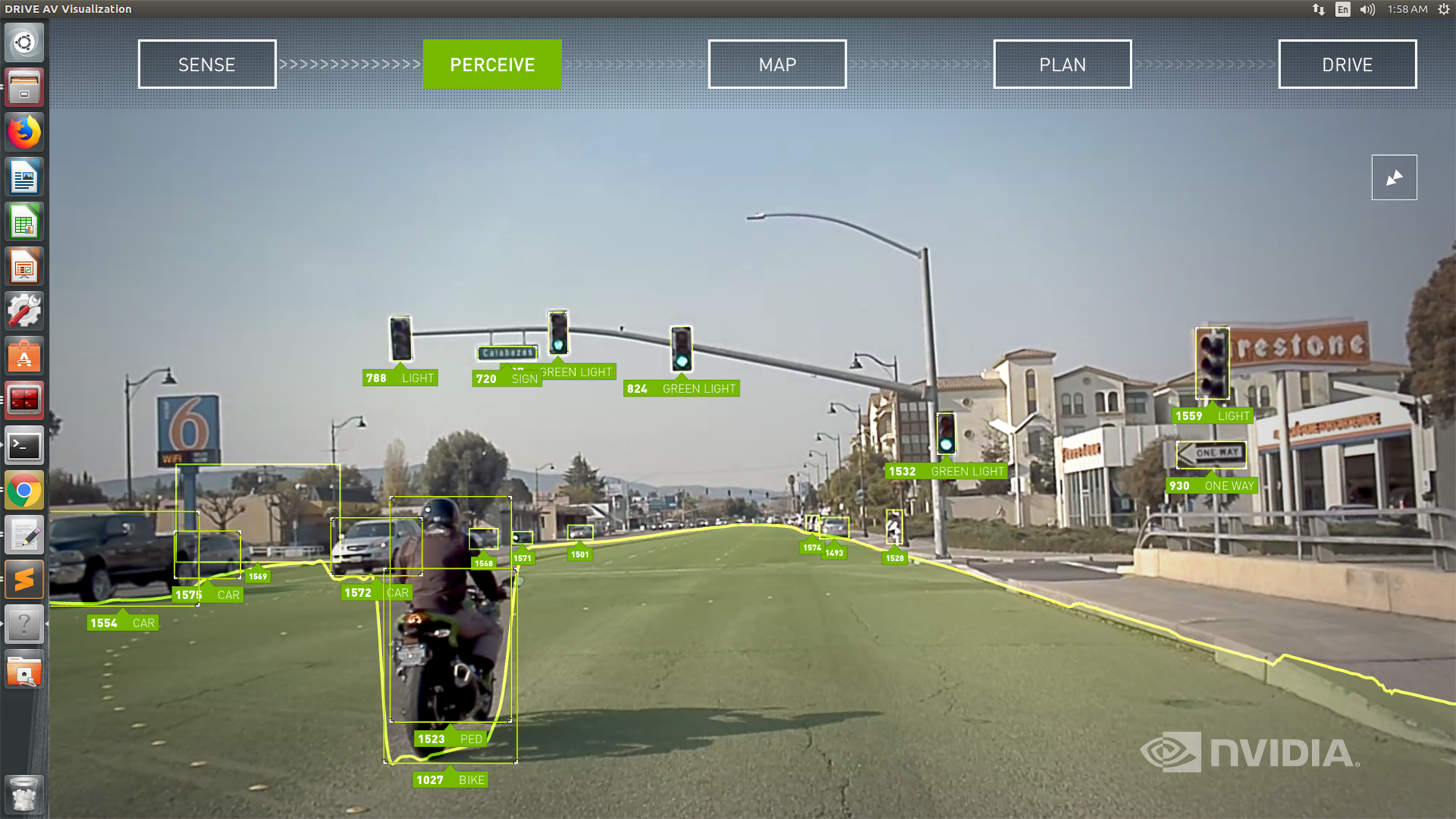

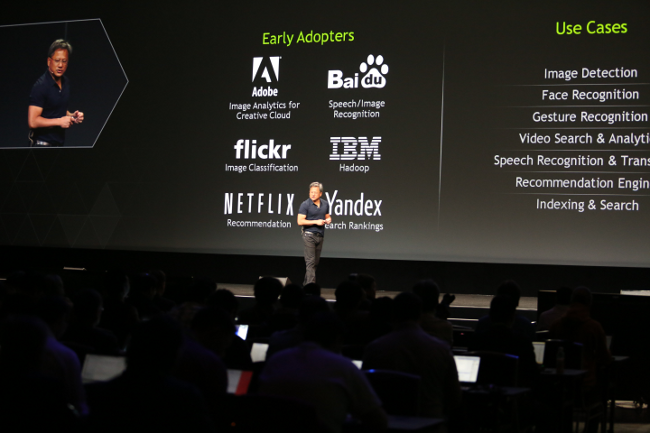

NVIDIA invented the highly parallel graphics processing unit—the GPU—in 1999. Since then, NVIDIA has set new standards in visual computing with interactive graphics on products ranging from smart phones and tablets to supercomputers and automobiles. Since computer vision is extremely computationally intensive, it is perfectly suited for parallel processing, and NVIDIA GPUs have led the way in the acceleration of computer vision applications. In embedded vision, NVIDIA is focused on delivering solutions for consumer electronics, driver assistance systems, and national defense programs. NVIDIA expertise in visual computing, combined with the power of NVIDIA parallel processors, delivers exceptional results.

NVIDIA

Recent Content by Company

The Building Blocks of AI: Decoding the Role and Significance of Foundation Models

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. These neural networks, trained on large volumes of data, power the applications driving the generative AI revolution. Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more accessible, […]

AI Decoded: Demystifying Large Language Models, the Brains Behind Chatbots

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Explore what LLMs are, why they matter and how to use them. Editor’s note: This post is part of our AI Decoded series, which aims to demystify AI by making the technology more accessible, while showcasing new […]

AI Decoded From GTC: The Latest Developer Tools and Apps Accelerating AI on PC and Workstation

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Next Chat with RTX features showcased, TensorRT-LLM ecosystem grows, AI Workbench general availability, and NVIDIA NIM microservices launched. Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more […]

AI Decoded: Demystifying AI and the Hardware, Software and Tools That Power It

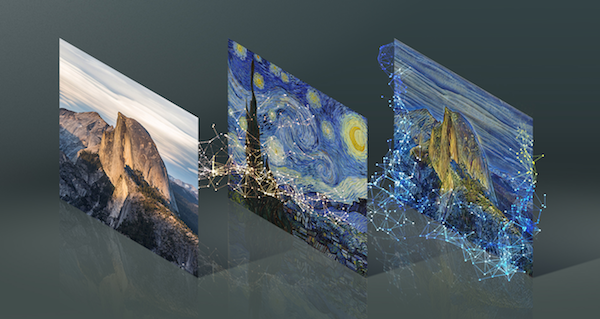

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. RTX AI PCs and workstations deliver exclusive AI capabilities and peak performance for gamers, creators, developers and everyday PC users. With the 2018 launch of RTX technologies and the first consumer GPU built for AI — GeForce […]

Unlocking Peak Generations: TensorRT Accelerates AI on RTX PCs and Workstations

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. TensorRT extension for Stable Diffusion WebUI now supports ControlNets, performance showcased in new benchmark. Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more accessible, and which showcases […]

Calculating Video Quality Using NVIDIA GPUs and VMAF-CUDA

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Video quality metrics are used to evaluate the fidelity of video content. They provide a consistent quantitative measurement to assess the performance of the encoder. Peak signal-to-noise ratio (PSNR): A long-established quality metric that compares the pixel […]

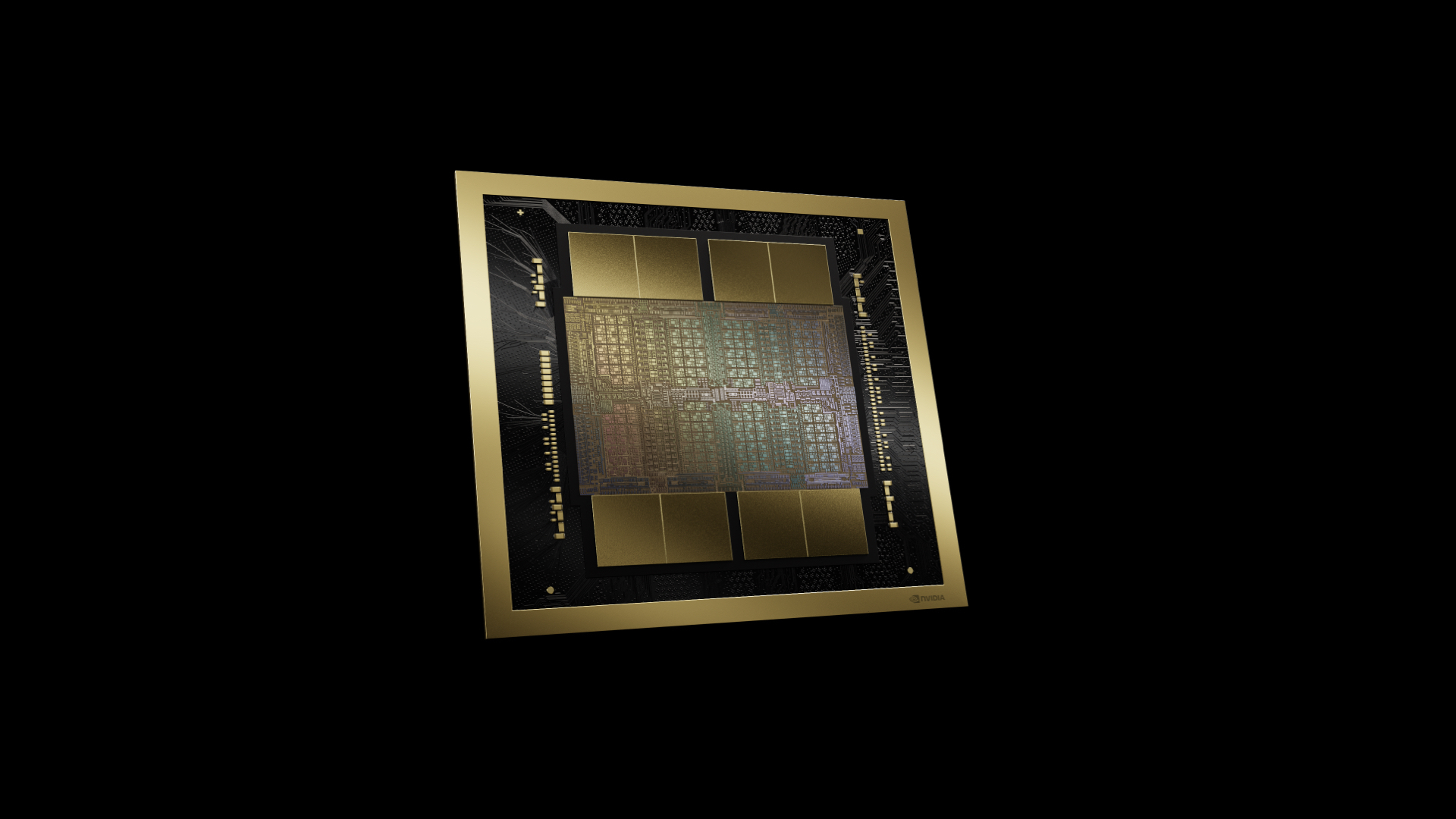

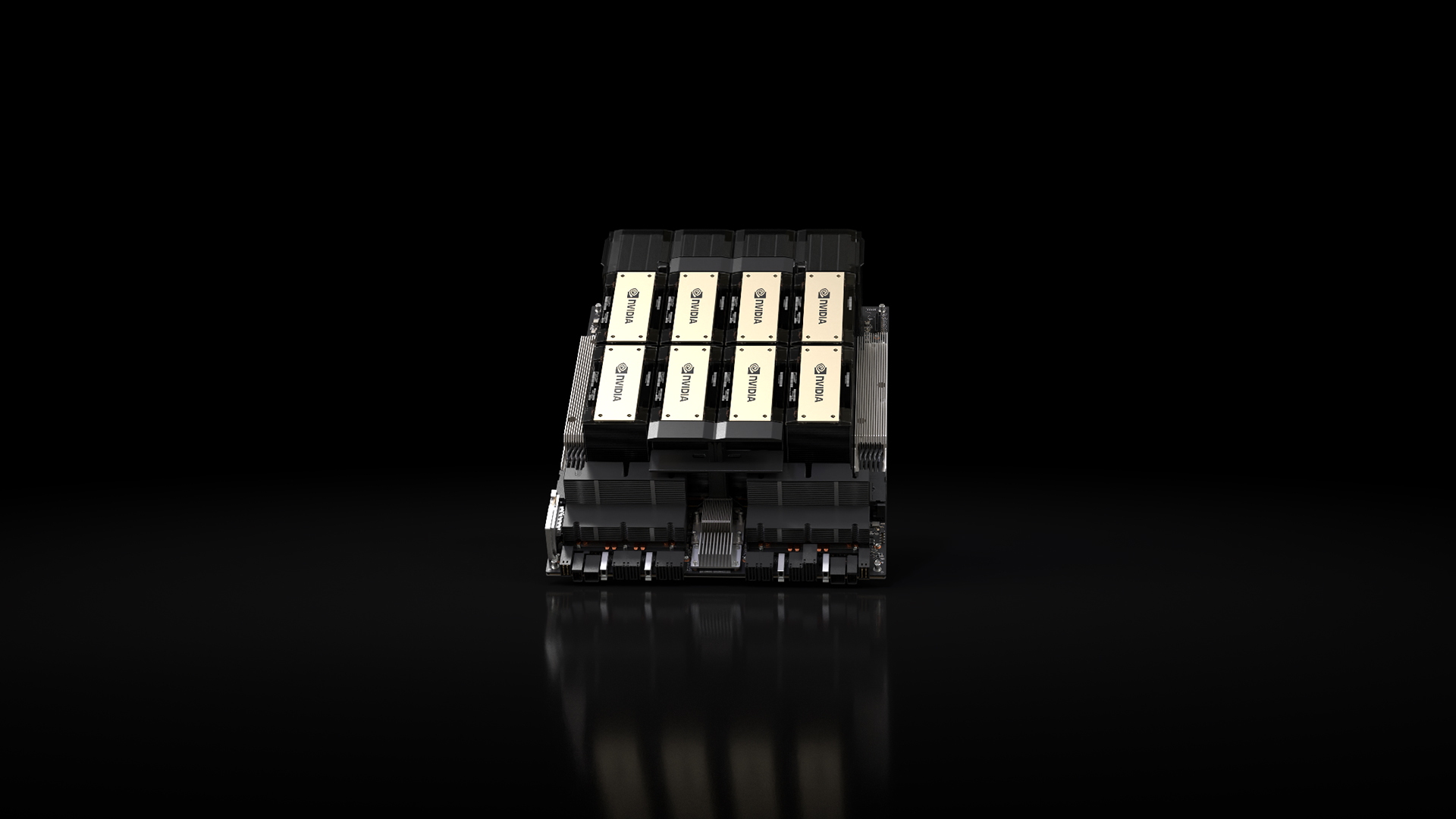

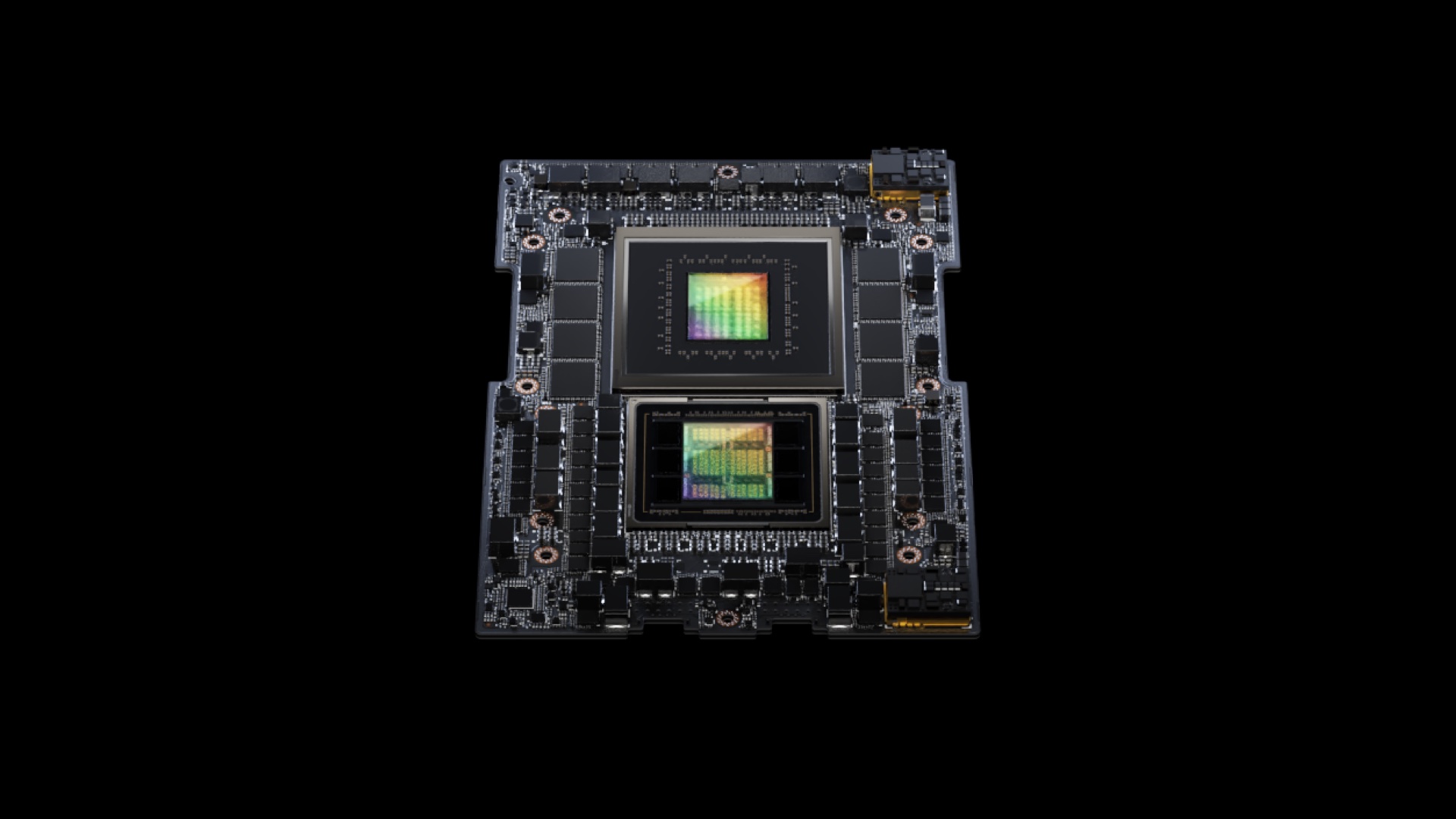

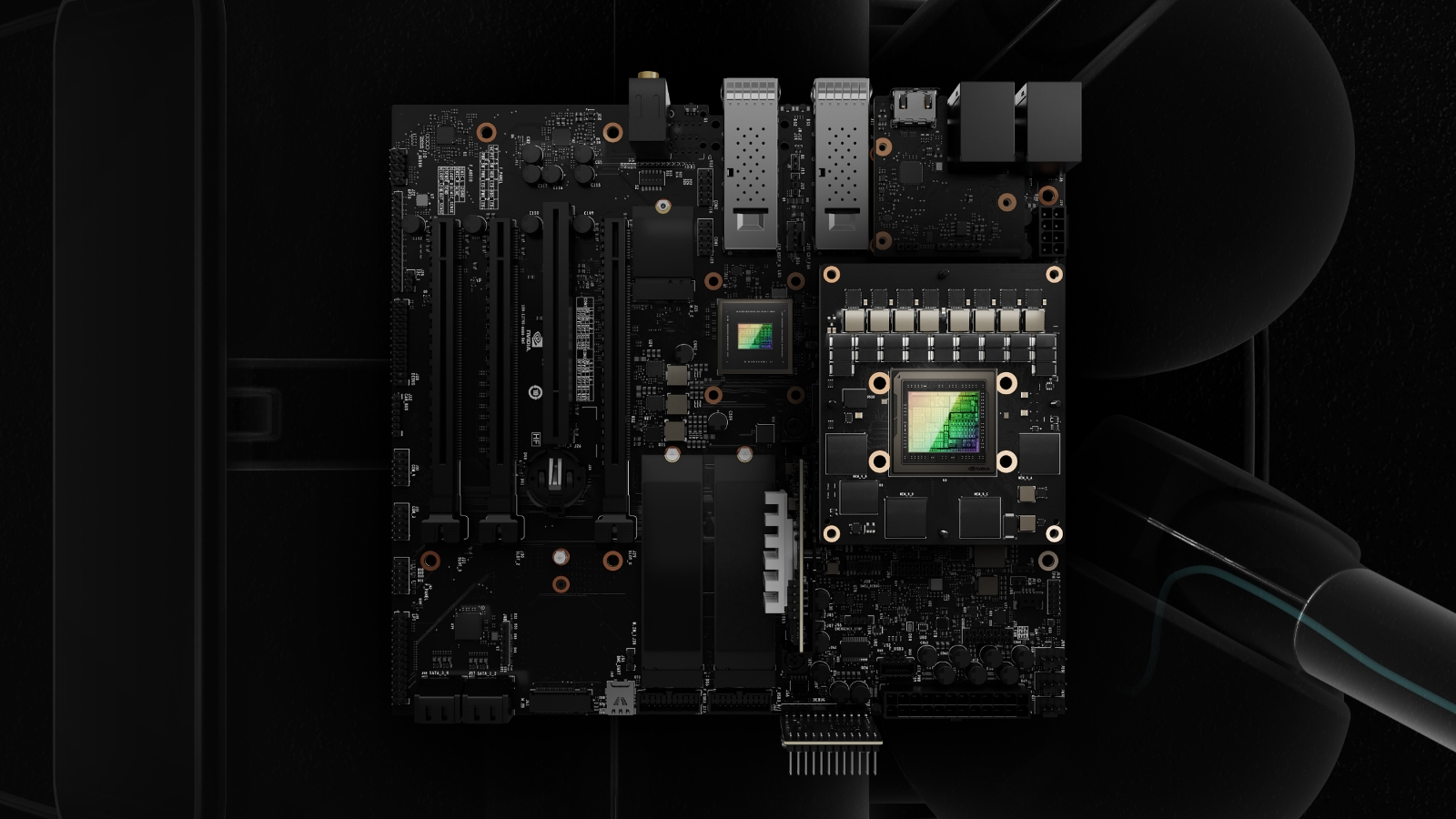

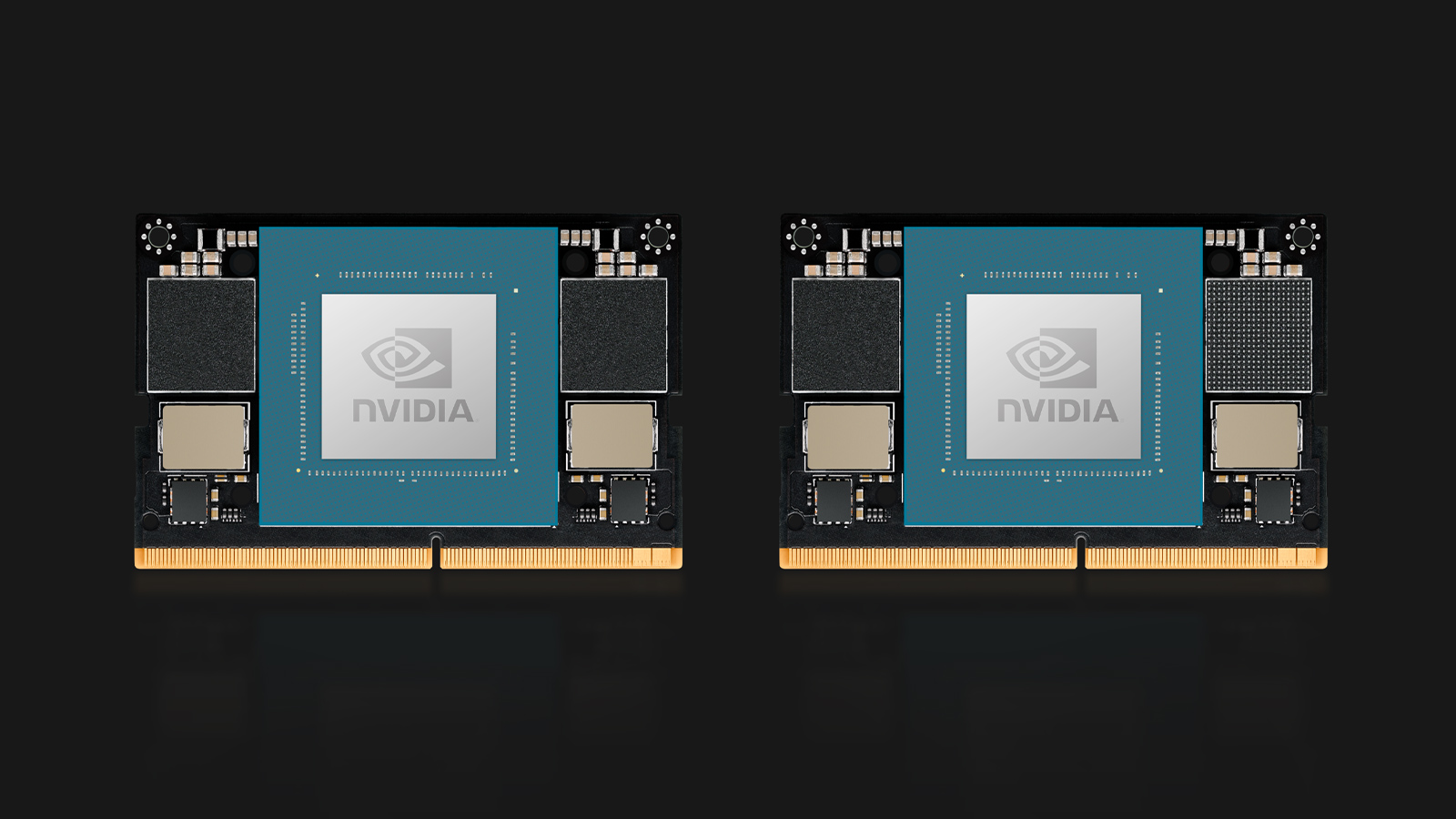

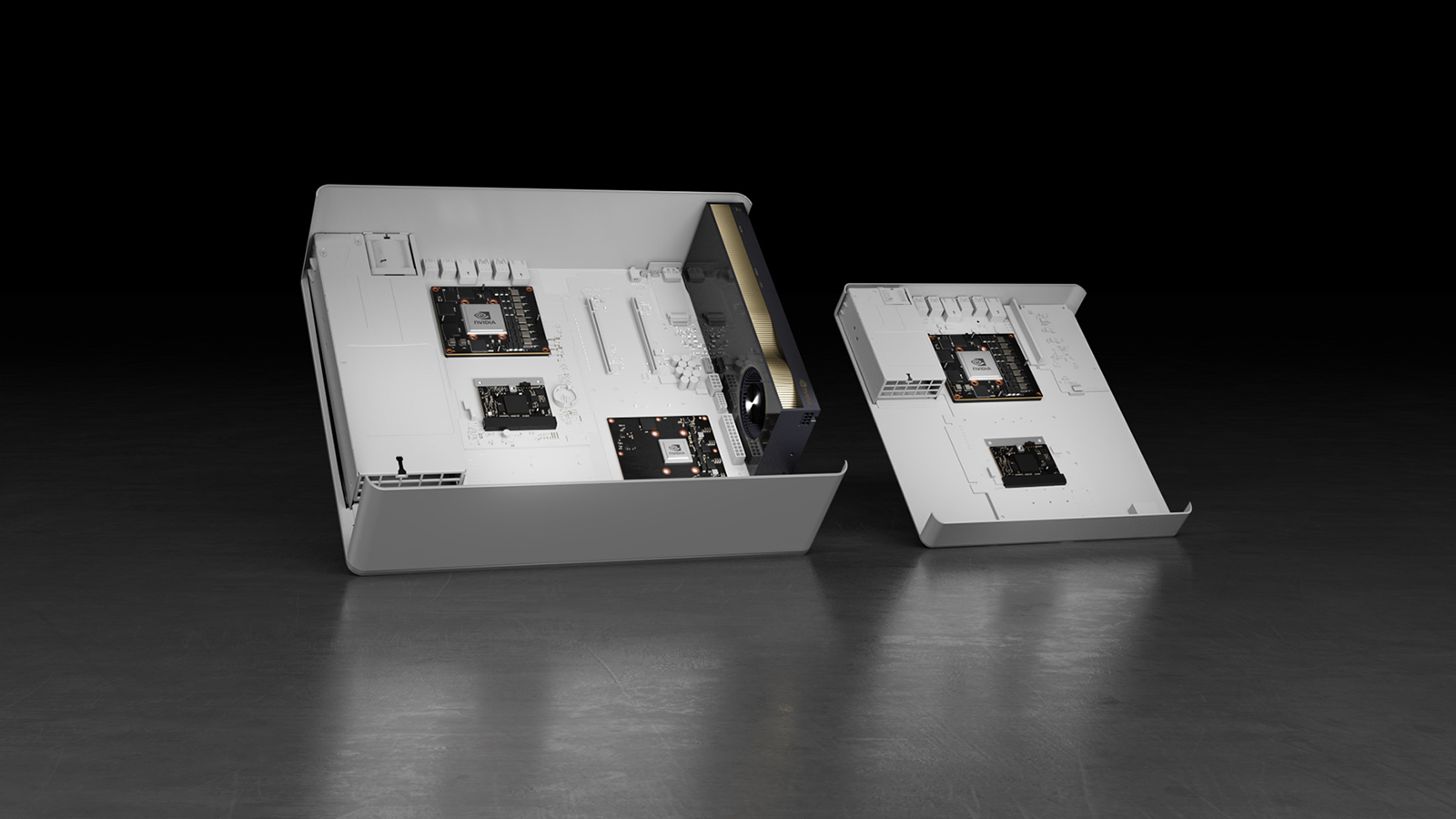

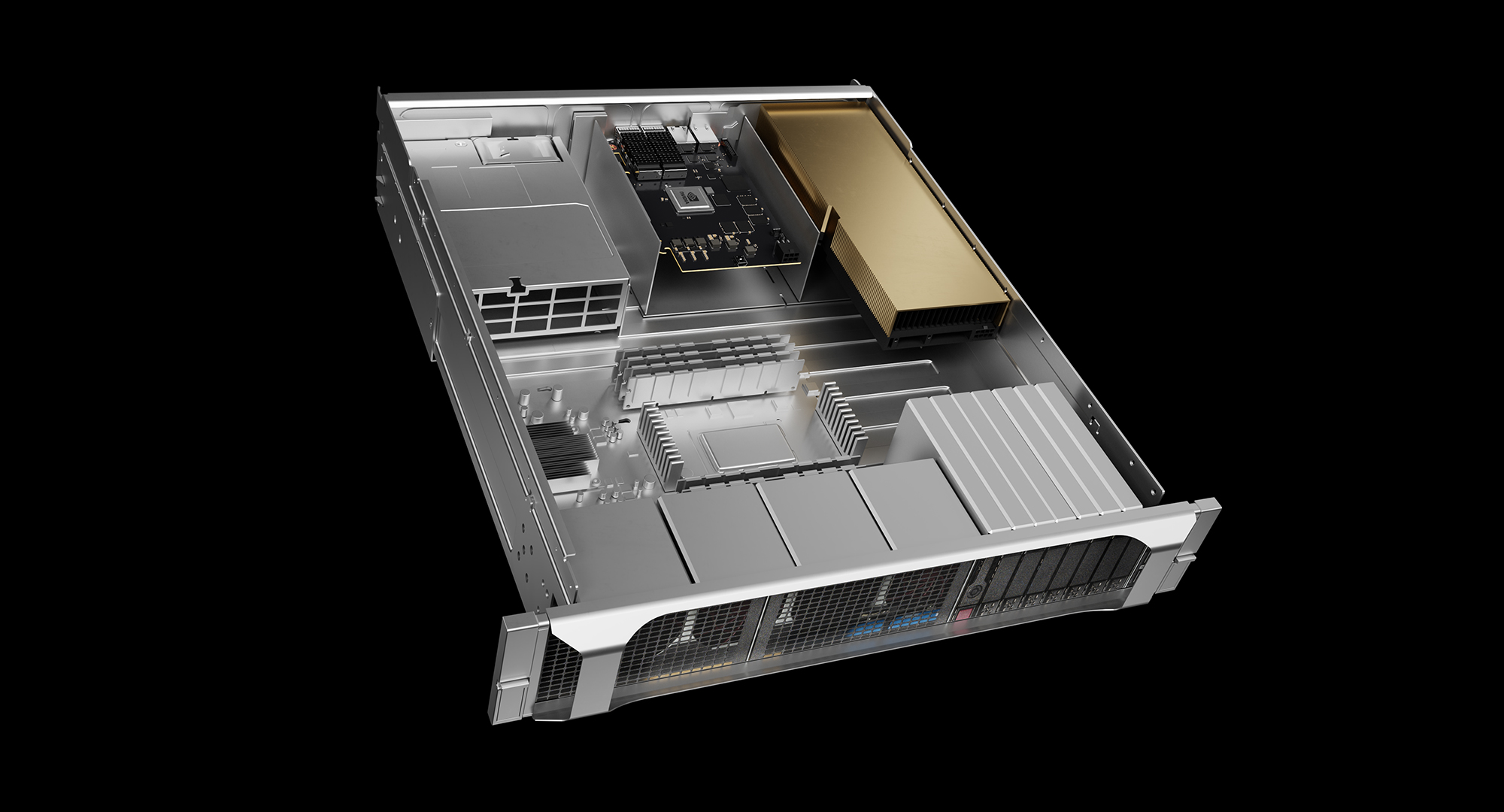

NVIDIA Blackwell Platform Arrives to Power a New Era of Computing

NVIDIA Blackwell powers a new era of computing, enabling organizations everywhere to build and run real-time generative AI on trillion-parameter large language models. New Blackwell GPU, NVLink and Resilience Technologies Enable Trillion-Parameter-Scale AI Models New Tensor Cores and TensorRT- LLM Compiler Reduce LLM Inference Operating Cost and Energy by up to 25x New Accelerators Enable […]

A One-stop Solution for Digital Skin Analyzers

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. In this blog, we are exploring types of skin analyzers prevalent in the industry and giving insights on the kind of cameras that should be used to get the best results. In the skincare industry, […]

ProHawk AI Unveils AI Computer Vision Solutions at NVIDIA GTC

Sponsoring the largest technology and AI events, to be held March 18-21 in San Jose, California Unveiling ProHawk’s edge to cloud solutions embedded across the suite of NVIDIA platforms Also demonstrating integrated solutions with BCD, Dedicated Computing, Network Optix, and Onyx Healthcare LAKE MARY, FLORIDA, March 12, 2024 – ProHawk Technology Group (ProHawk AI), a […]

Join e-con Systems at NVIDIA GTC 2024

Experience our Cutting-Edge Camera Solutions at Booth 341! e-con Systems is attending NVIDIA GTC 2024, a premier global AI event, from March 18-21, 2024, at the San Jose Convention Center! Drop by Booth 341 to see firsthand how e-con Systems™, a reputed NVIDIA’s Elite partner, leads the way in providing top-tier cameras for the NVIDIA […]

Detecting Real-time Waste Contamination Using Edge Computing and Video Analytics

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. The past few decades have witnessed a surge in rates of waste generation, closely linked to economic development and urbanization. This escalation in waste production poses substantial challenges for governments worldwide in terms of efficient processing and […]

ProHawk Gains NVIDIA Preferred Partner Status for its AI-enabled Computer Vision Solutions

ProHawk solutions validated in NVIDIA’s labs over the past 18 months, and now embedded with NVIDIA Metropolis, Holoscan, and Jetson platforms, with more integrations coming. LAKE MARY, FLORIDA, February 27, 2024 — ProHawk Technology Group (ProHawk AI), a leading AI-enabled computer vision company, today announced that it has been selected as a Preferred partner within […]

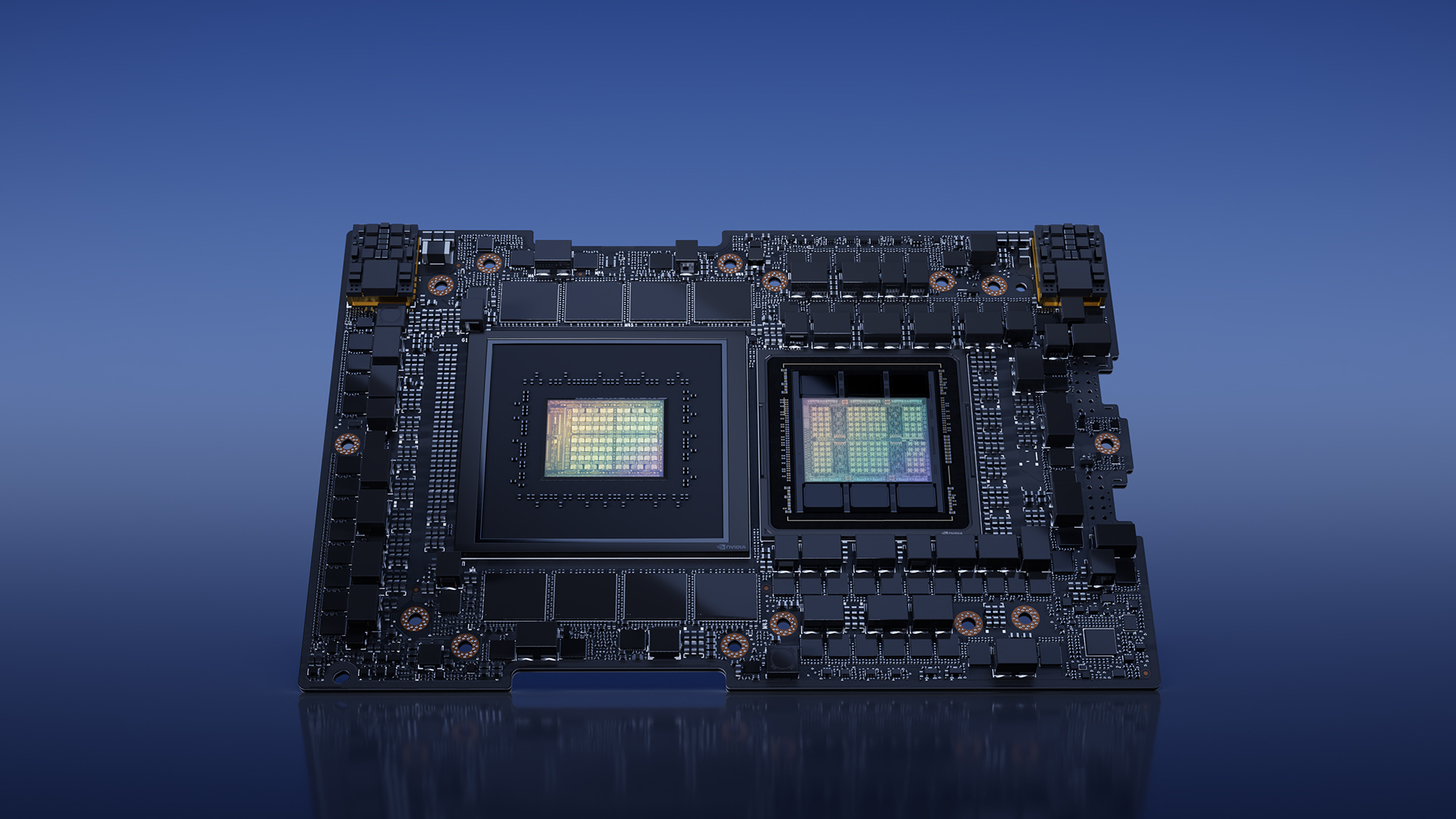

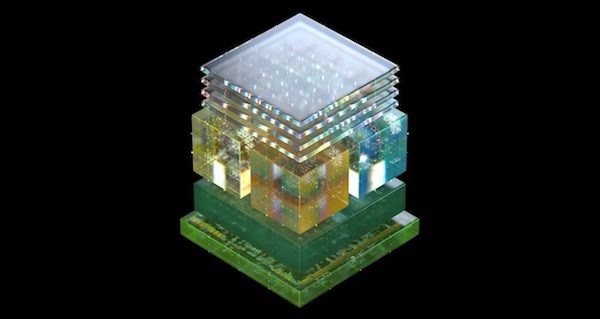

Micron Commences Volume Production of Industry-leading HBM3E Solution to Accelerate the Growth of AI

Micron HBM3E helps reduce data center operating costs by consuming about 30% less power than competing HBM3E offerings BOISE, Idaho, Feb. 26, 2024 (GLOBE NEWSWIRE) — Micron Technology, Inc. (Nasdaq: MU), a global leader in memory and storage solutions, today announced it has begun volume production of its HBM3E (High Bandwidth Memory 3E) solution. Micron’s 24GB 8H […]

NVIDIA RTX 500 and 1000 Professional Ada Generation Laptop GPUs Drive AI-enhanced Workflows From Anywhere

Thin and light designs deliver advanced AI, compute and graphics horsepower for professionals on the go. With generative AI and hybrid work environments becoming the new standard, nearly every professional, whether a content creator, researcher or engineer, needs a powerful, AI-accelerated laptop to help users tackle their industry’s toughest challenges — even on the go. […]

Shining Brighter Together: Google’s Gemma Optimized to Run on NVIDIA GPUs

New open language models from Google accelerated by TensorRT-LLM across NVIDIA AI platforms — including local RTX AI PCs. NVIDIA, in collaboration with Google, today launched optimizations across all NVIDIA AI platforms for Gemma — Google’s state-of-the-art new lightweight 2 billion– and 7 billion-parameter open language models that can be run anywhere, reducing costs and […]

Benchmarking Camera Performance on Your Workstation with NVIDIA Isaac Sim

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Robots are typically equipped with cameras. When designing a digital twin simulation, it’s important to replicate its performance in a simulated environment accurately. However, to make sure the simulation runs smoothly, it’s crucial to check the performance […]

Ecosystem Collaboration Drives New AMBA Specification for Chiplets

This blog post was originally published at Arm’s website. It is reprinted here with the permission of Arm. AMBA is being extended to the chiplets market with the new CHI C2C specification. As Arm’s EVP and Chief Architect Richard Grisenthwaite said in this blog, Arm is collaborating across the ecosystem on standards to enable a […]

NVIDIA RTX 2000 Ada Generation GPU Brings Performance, Versatility for Next Era of AI-accelerated Design and Visualization

Latest RTX technology delivers cost-effective package for designers, developers, engineers, and embedded and edge applications. Generative AI is driving change across industries — and to take advantage of its benefits, businesses must select the right hardware to power their workflows. The new NVIDIA RTX 2000 Ada Generation GPU delivers the latest AI, graphics and compute […]

Free Webinar Explores How to Accelerate Edge AI Development With Microservices For NVIDIA Jetson

On March 5, 2024 at 8 am PT (11 am ET), NVIDIA senior product manager Chintan Shah will present the free hour webinar “Accelerate Edge AI Development With Microservices For NVIDIA Jetson,” organized by the Edge AI and Vision Alliance. Here’s the description, from the event registration page: Building vision AI applications for the edge […]

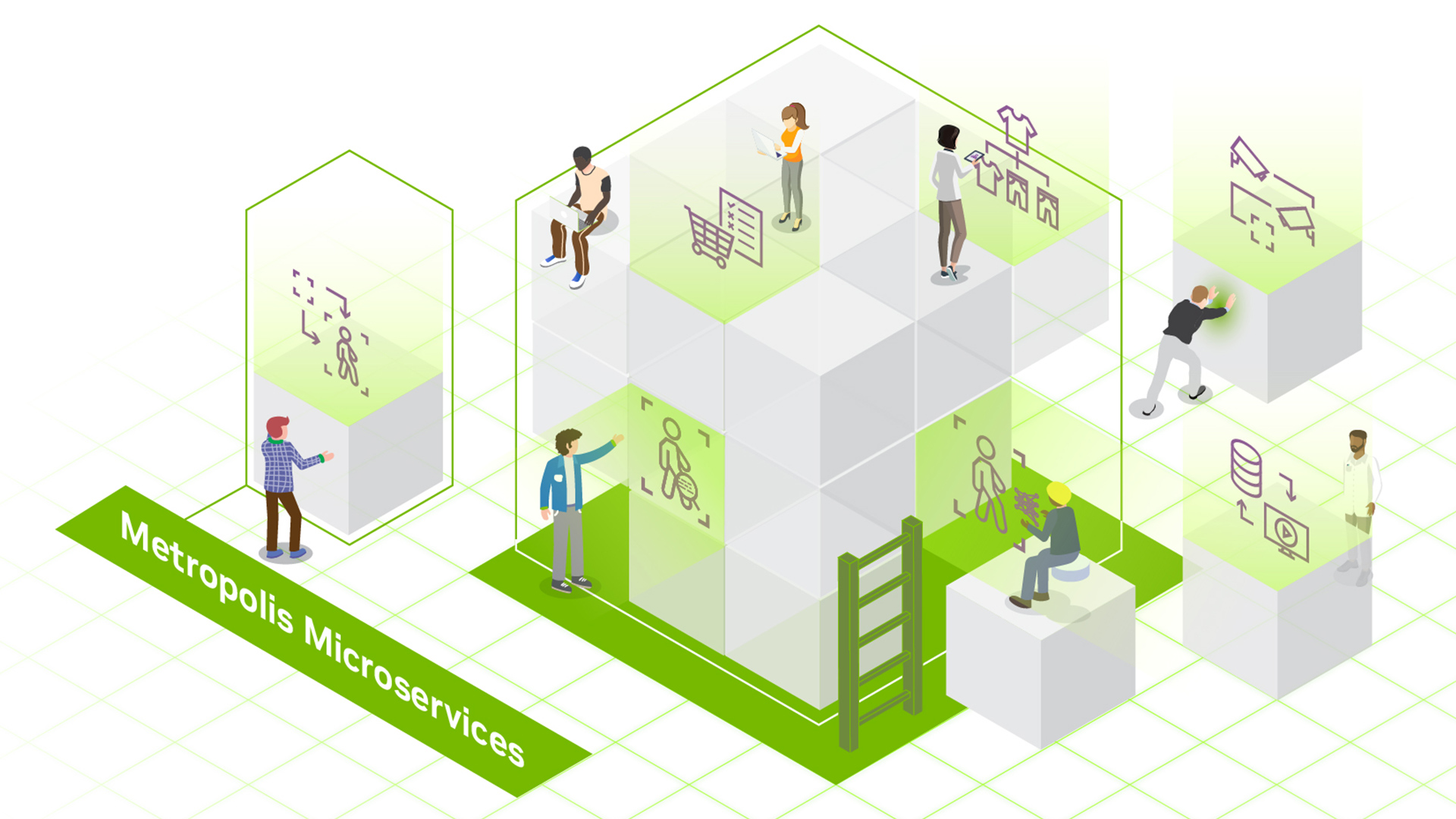

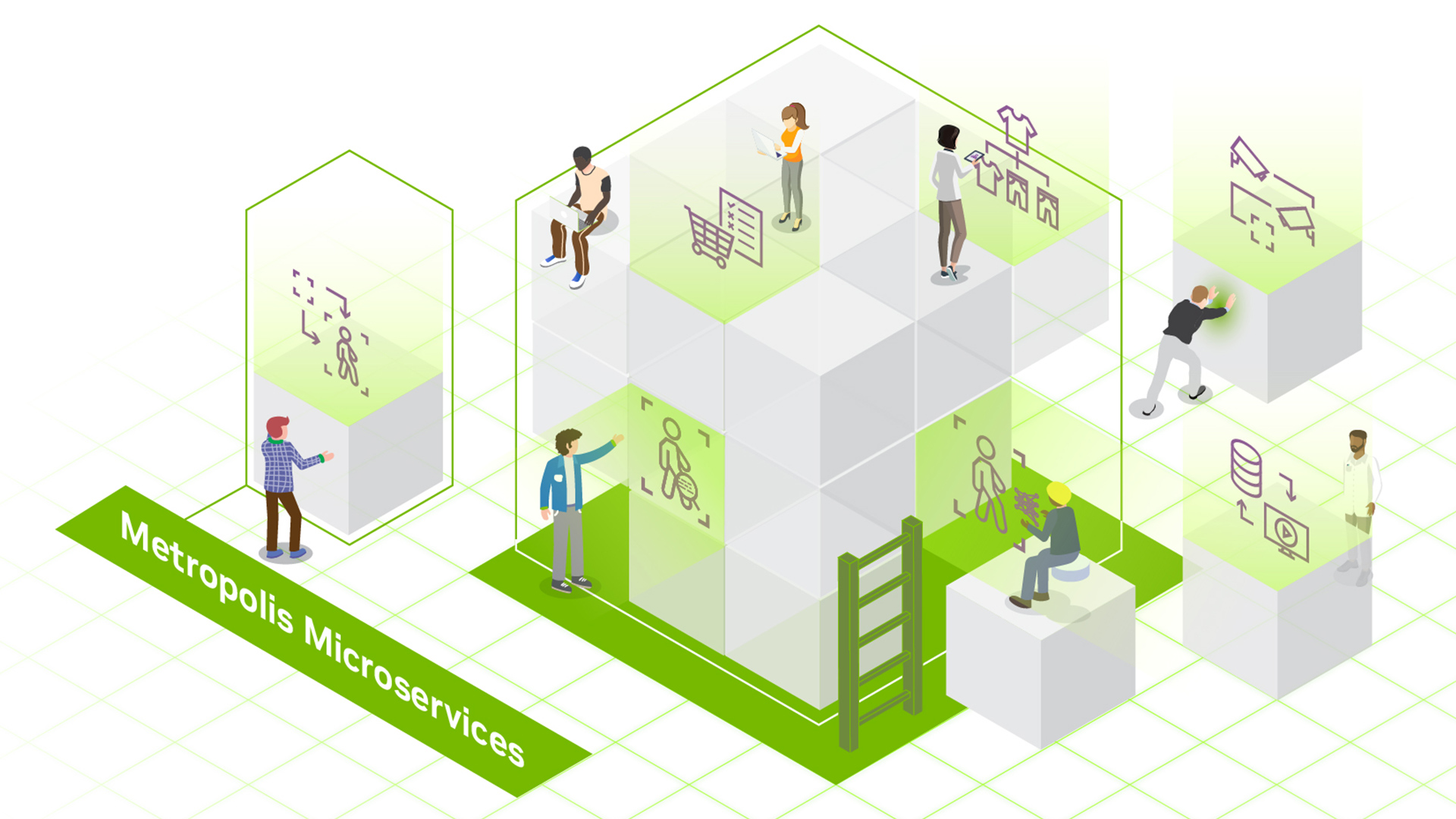

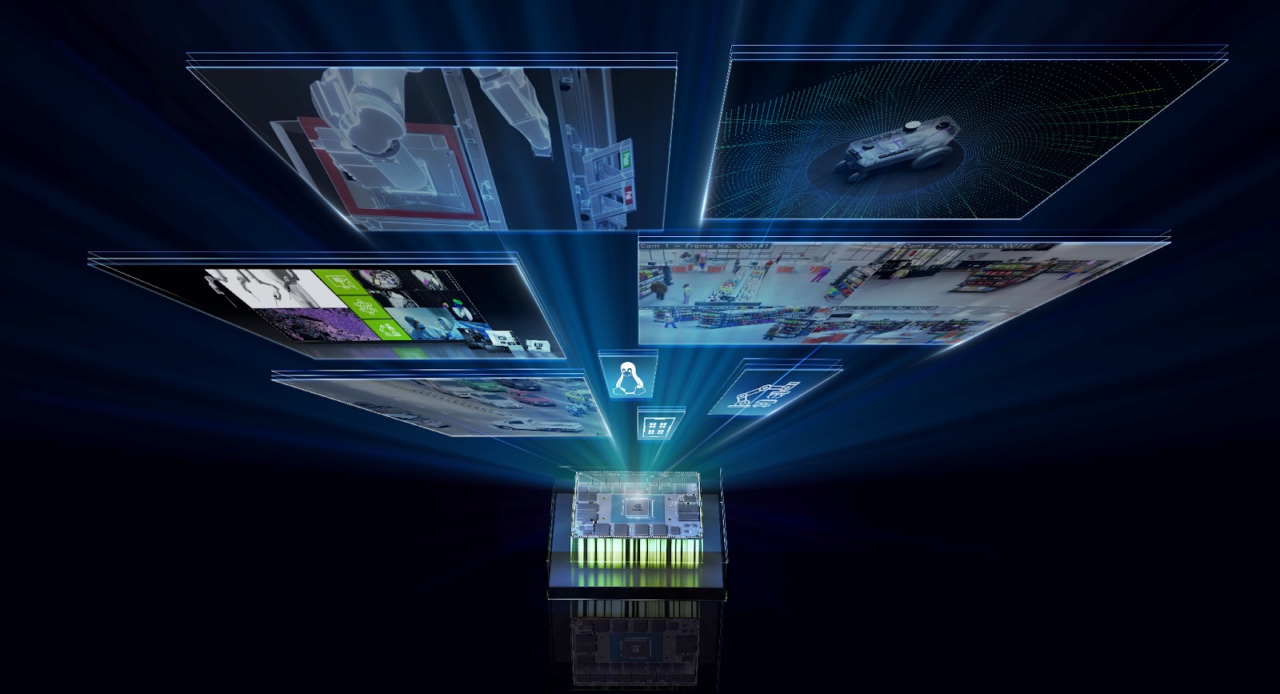

Announcing NVIDIA Metropolis Microservices for Jetson for Rapid Edge AI Development

Building vision AI applications for the edge often comes with notoriously long and costly development cycles. At the same time, quickly developing edge AI applications that are cloud-native, flexible, and secure has never been more important. Now, a powerful yet simple API-driven edge AI development workflow is available with the new NVIDIA Metropolis microservices. NVIDIA […]

Build Vision AI Applications at the Edge with NVIDIA Metropolis Microservices and APIs

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. NVIDIA Metropolis microservices provide powerful, customizable, cloud-native APIs and microservices to develop vision AI applications and solutions. The framework now includes NVIDIA Jetson, enabling developers to quickly build and productize performant and mature vision AI applications at […]

Fast-track Computer Vision Deployments with NVIDIA DeepStream and Edge Impulse

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. AI-based computer vision (CV) applications are increasing, and are particularly important for extracting real-time insights from video feeds. This revolutionary technology empowers you to unlock valuable information that was once impossible to obtain without significant operator intervention, […]

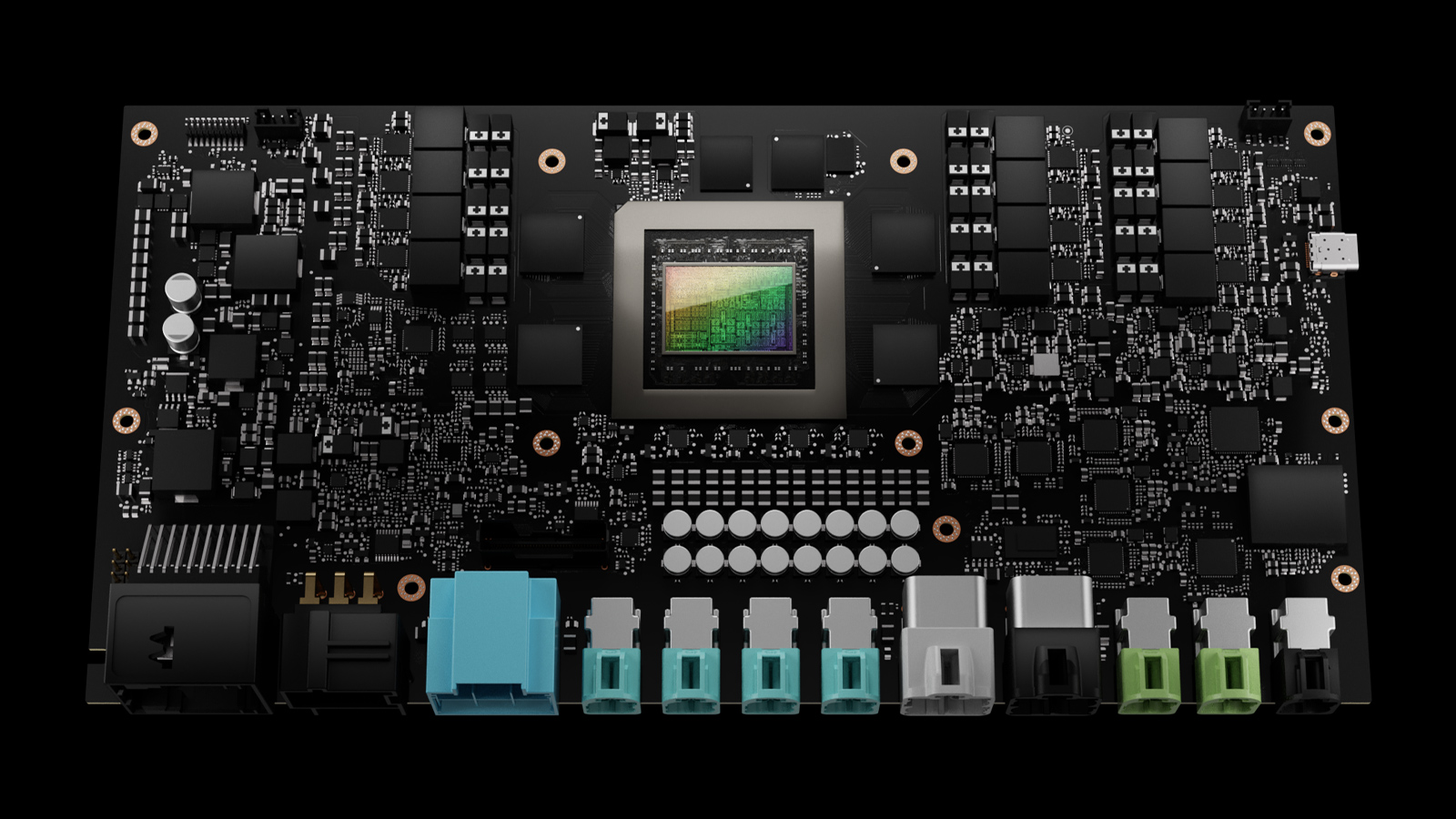

Wave of EV Makers Choose NVIDIA DRIVE for Automated Driving

Li Auto Selects DRIVE Thor for Next-Gen EVs; GWM, ZEEKR and Xiaomi Develop AI-Driven Cars Powered by NVIDIA DRIVE Orin January 8, 2024 — CES — NVIDIA today announced that Li Auto, a pioneer in extended-range electric vehicles (EVs), has selected the NVIDIA DRIVE Thor™ centralized car computer to power its next-generation fleets. NVIDIA also announced […]

NVIDIA Brings Generative AI to Millions, With Tensor Core GPUs, LLMs, Tools for RTX PCs and Workstations

Leading AI Platform Gets RTX-Accelerated Boost From New GeForce RTX SUPER GPUs, AI Laptops From Every Top Manufacturer January 8, 2024 — CES — NVIDIA today announced GeForce RTX™ SUPER desktop GPUs for supercharged generative AI performance, new AI laptops from every top manufacturer, and new NVIDIA RTX™-accelerated AI software and tools for both developers […]

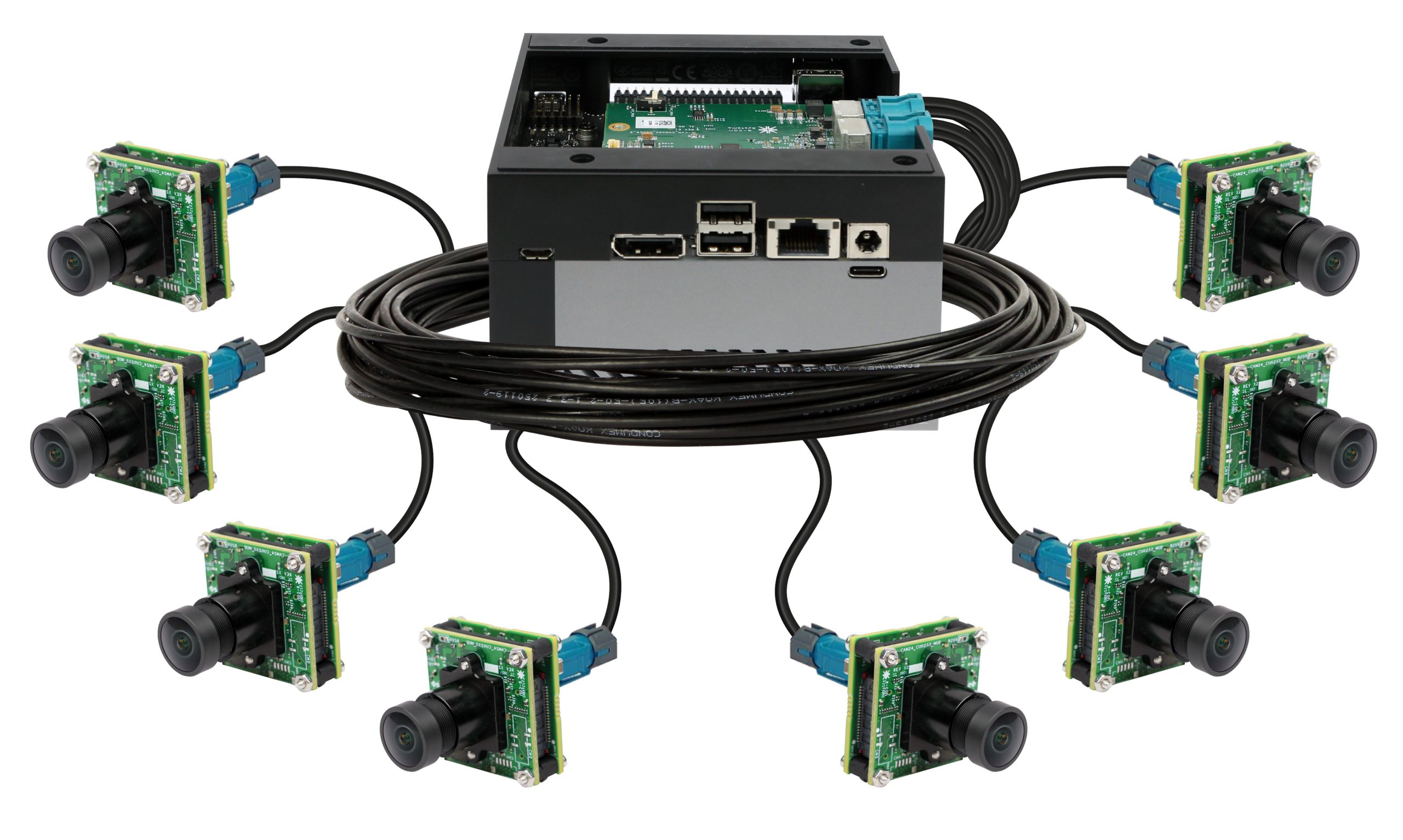

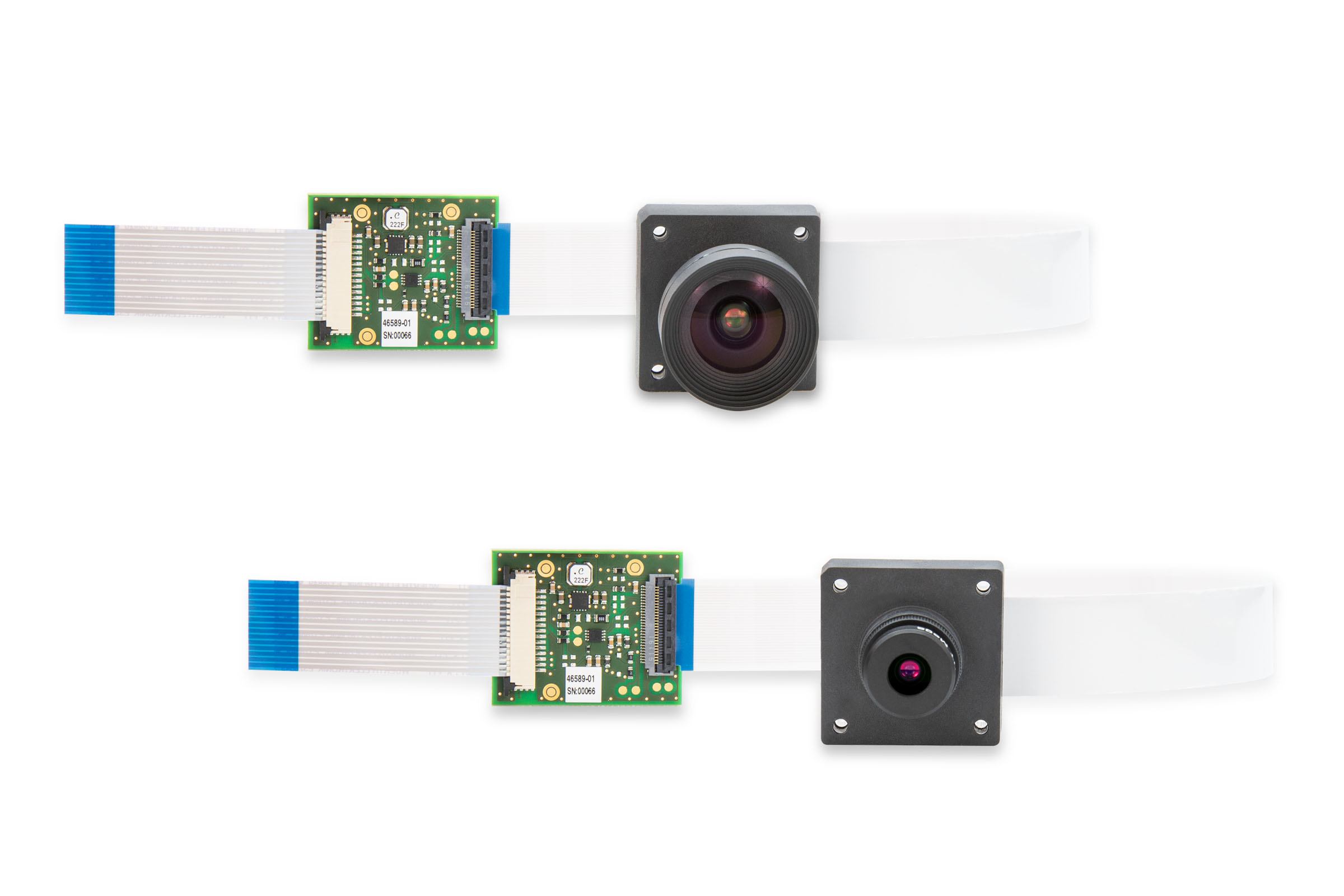

e-con Systems Demonstration of a 360 Degree Bird Eye View Camera for Autonomous Mobility

Laxman Sankaran, Director of US Operations, and Vinoth Rajagopalan, Project Engineering Manager, both of e-con Systems, demonstrate the company’s latest edge AI and vision technologies and products at the December 2023 Edge AI and Vision Alliance Forum. Specifically, Sankaran and Rajagopalan demonstrate the company’s ArniCAM20, a 360-degree bird-eye-view synchronized multi-camera solution. This multi-camera solution comprises […]

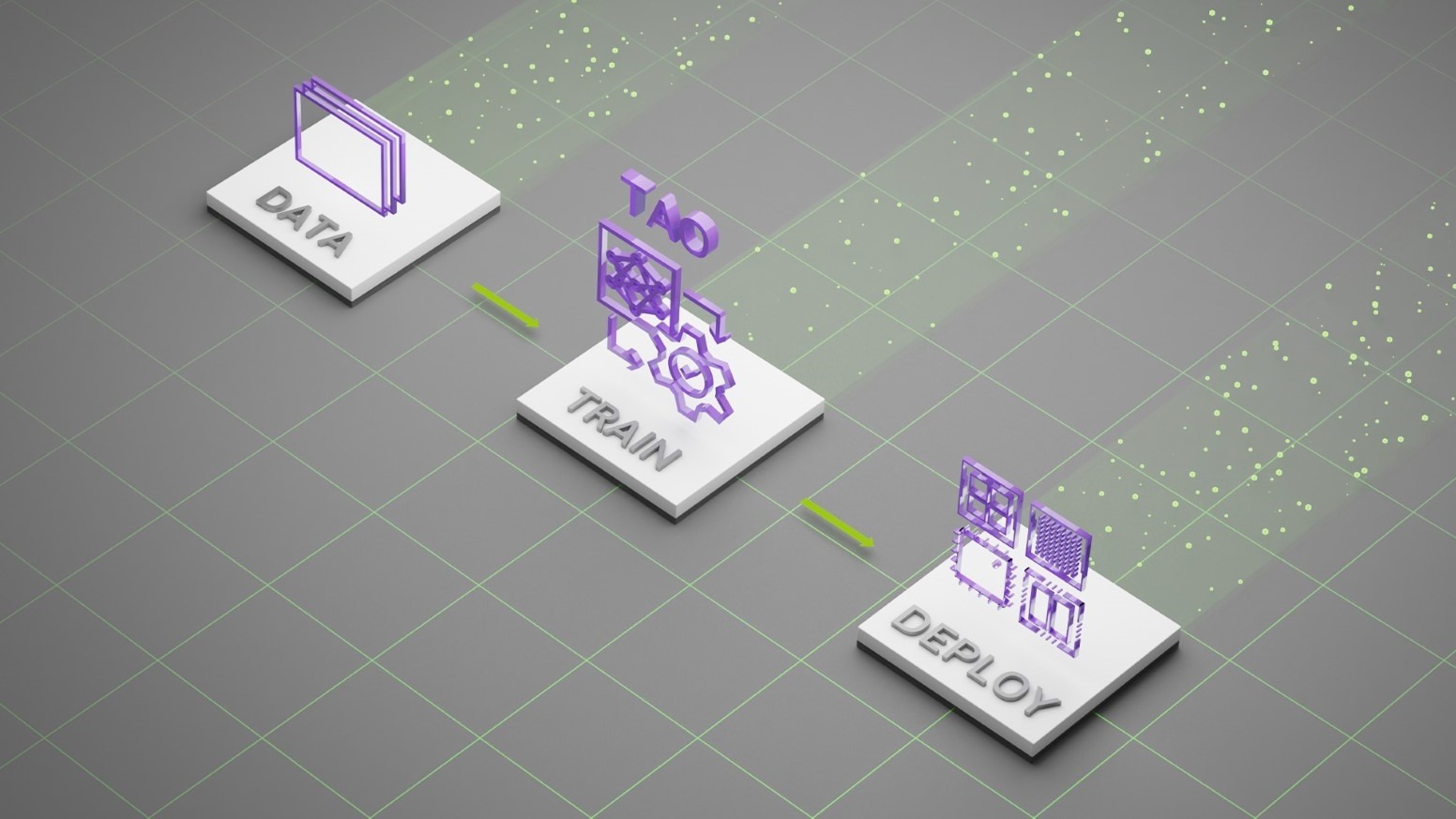

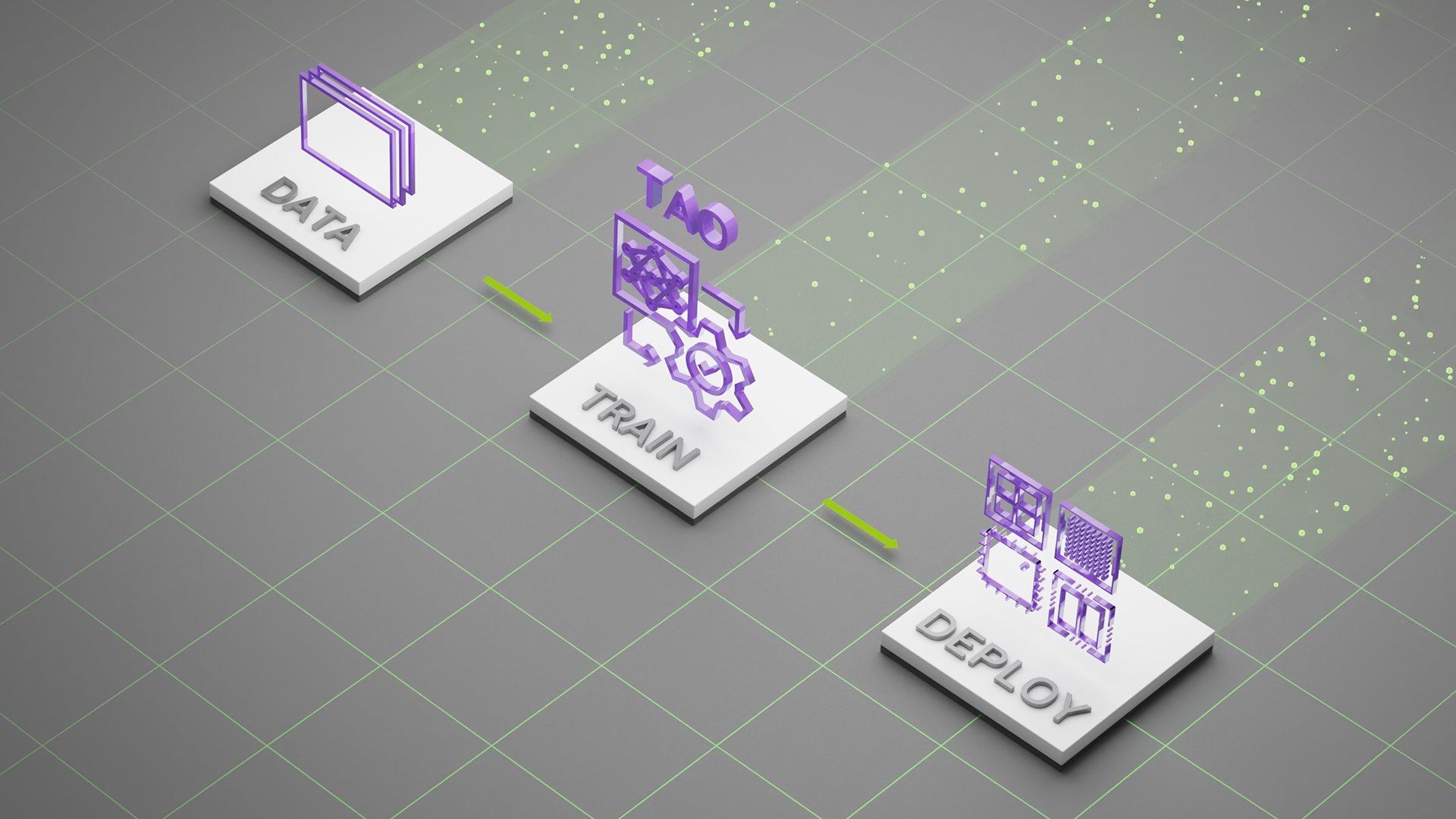

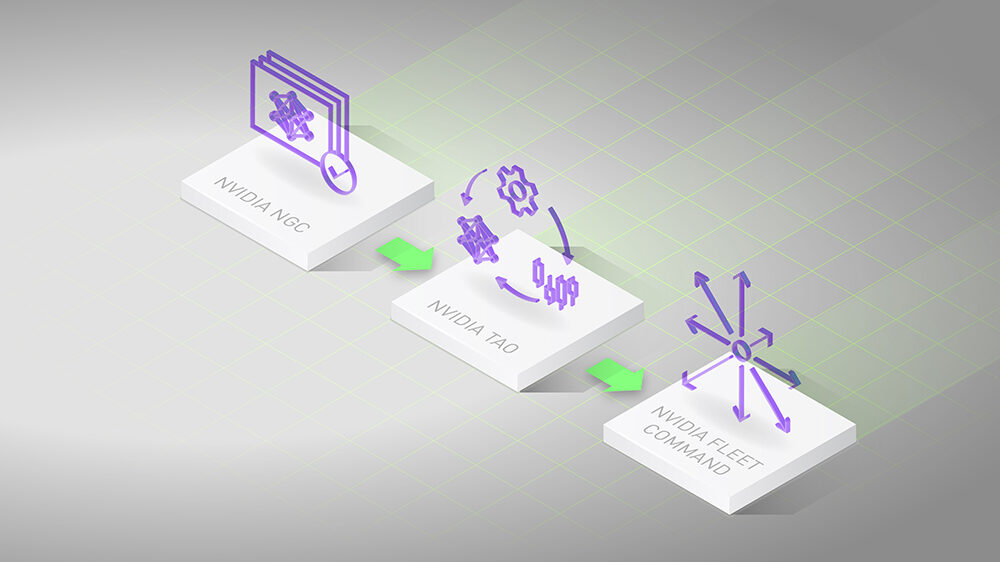

Develop and Optimize Vision AI Models for Trillions of Devices with NVIDIA TAO

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. With NVIDIA TAO Toolkit, developers around the world are building AI-powered visual perception and computer vision applications. Now the process is faster and easier than ever, thanks to significant platform enhancements and strong ecosystem adoption. NVIDIA TAO […]

Lattice Collaborates with NVIDIA to Accelerate Edge AI

Announces integrated solution combining low-power, low-latency Lattice FPGAs with the NVIDIA Orin platform to efficiently bridge sensors to AI applications HILLSBORO, Ore. – Dec. 5, 2023 – Today at the Lattice Developers Conference, Lattice Semiconductor (NASDAQ: LSCC) introduced a new reference sensor-bridging design to accelerate the development of edge AI applications using the NVIDIA Jetson […]

Nvidia Reaps the Benefits of AI Growth

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. Nvidia’s financial results for Q3 2023 caused excitement in the market with revenues of $18.12 billion, a growth of 34% from Q2 and an impressive 206% year-on-year growth. The company appears to […]

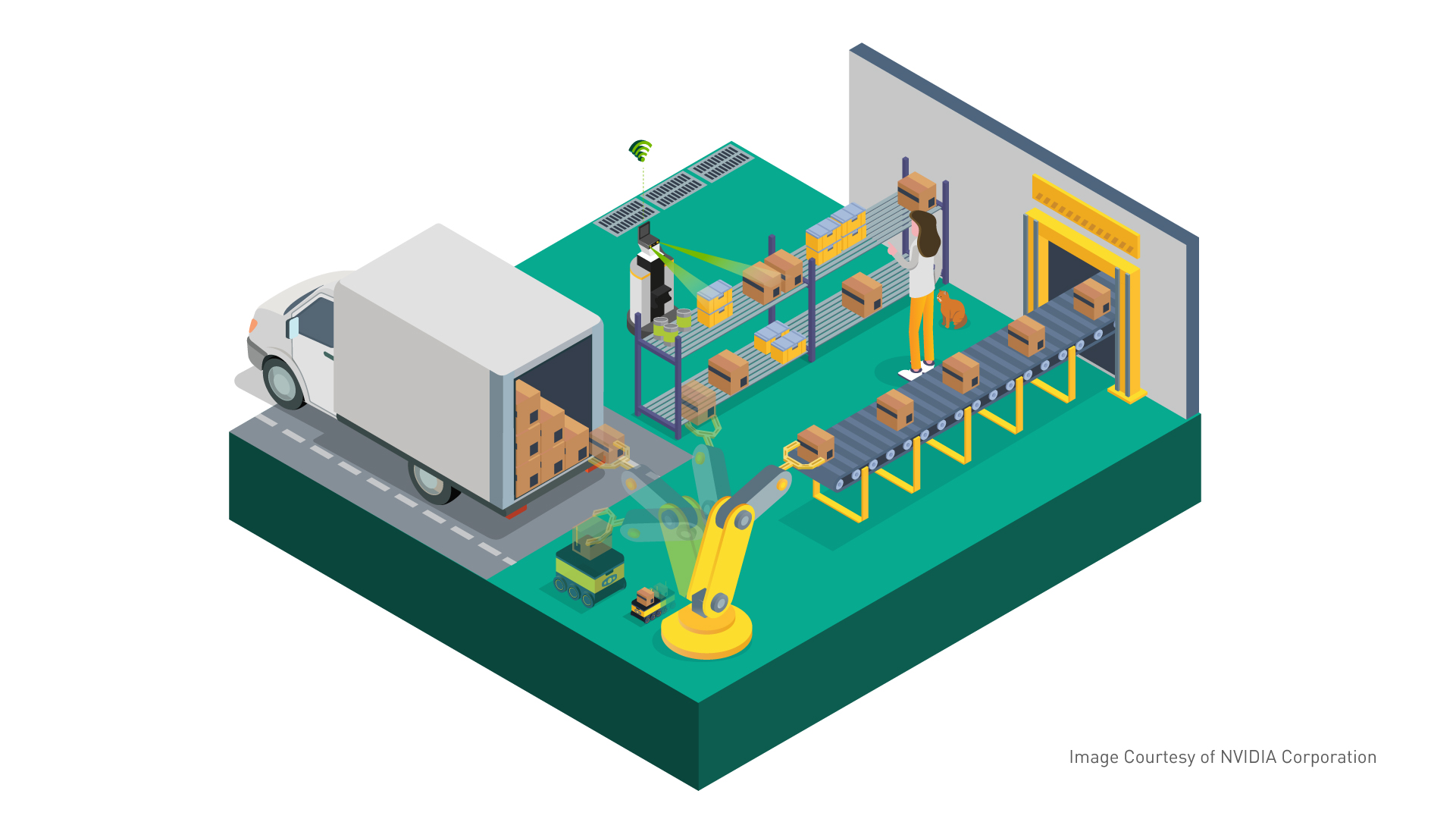

NVIDIA Expands Robotics Platform to Meet the Rise of Generative AI

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. More than 10,000 companies building on the NVIDIA Jetson platform can now use new generative AI, APIs and microservices to accelerate industrial digitalization. Powerful generative AI models and cloud-native APIs and microservices are coming to the edge. […]

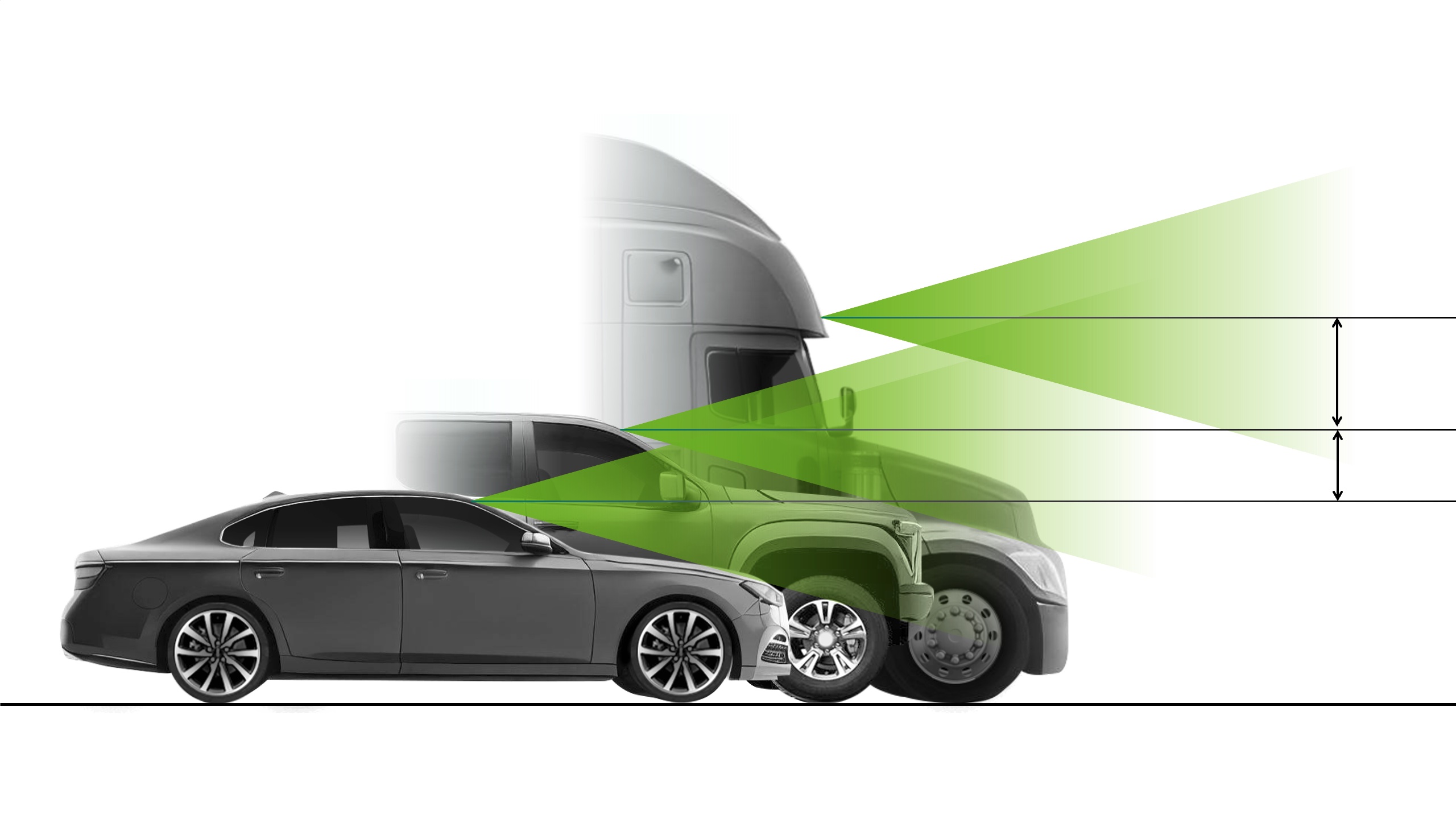

Using Synthetic Data to Address Novel Viewpoints for Autonomous Vehicle Perception

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Autonomous vehicles (AV) come in all shapes and sizes, ranging from small passenger cars to multi-axle semi-trucks. However, a perception algorithm deployed on these vehicles must be trained to handle similar situations, like avoiding an obstacle or […]

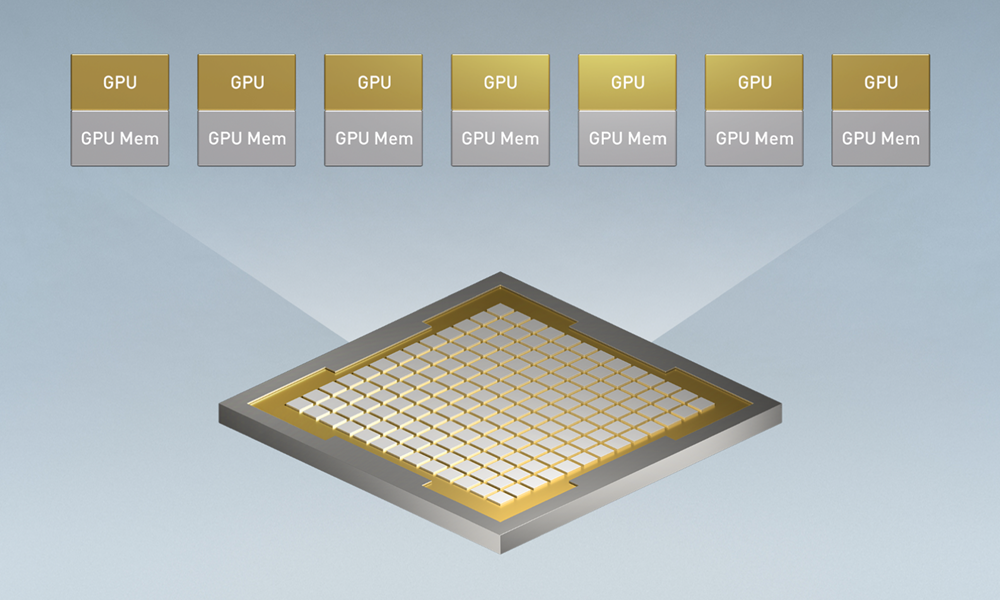

NVIDIA Supercharges Hopper, the World’s Leading AI Computing Platform

HGX H200 Systems and Cloud Instances Coming Soon From World’s Top Server Manufacturers and Cloud Service Providers November 13, 2023—SC23—NVIDIA today announced it has supercharged the world’s leading AI computing platform with the introduction of the NVIDIA HGX™ H200. Based on NVIDIA Hopper™ architecture, the platform features the NVIDIA H200 Tensor Core GPU with advanced […]

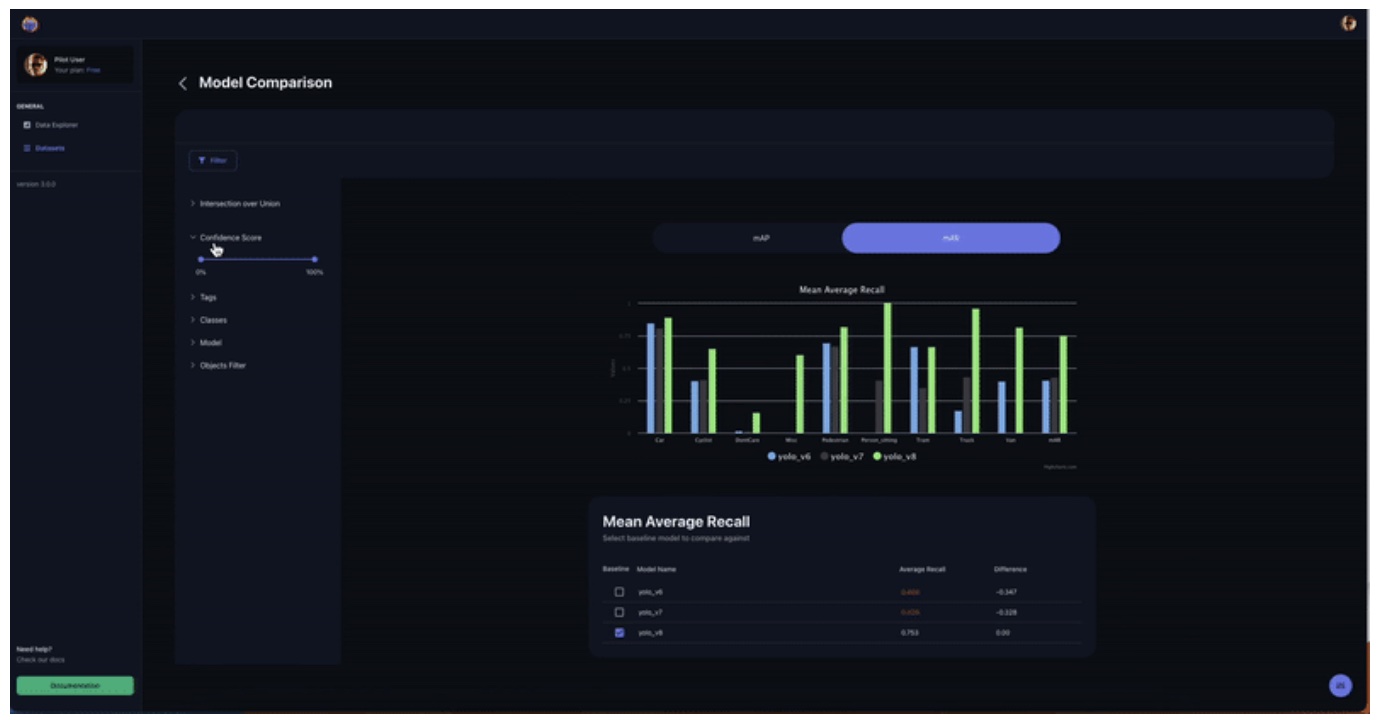

NVIDIA TAO Toolkit “Zero to Hero”: A Simple Guide for Model Comparison in Object Detection

This article was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks. In Part 2 of our NVIDIA TAO Toolkit series, we describe & address the common challenges of model deployment, in particular edge deployment. We explore practical solutions to these challenges, especially on the issues surrounding model comparison. Here […]

NVIDIA TAO Toolkit “Zero to Hero”: Setup Tips and Tricks

This article was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks. A quick setup guide for an NVIDIA TAO Toolkit (v3 & v4) object detection pipeline for edge computing, including tips & tricks and common pitfalls. This article will help you setup an NVIDIA TAO Toolkit (v3 & v4) […]

How NVIDIA and e-con Systems are Helping Solve Major Challenges In the Retail Industry

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. e-con Systems has proven expertise in integrating our cameras into the NVIDIA platform, including Jetson Xavier NX / Nano / TX2 NX, Jetson AGX Xavier, Jetson AGX Orin, and NVIDIA Jetson Orin NX / NANO. […]

FRAMOS Launches Event-based Vision Sensing (EVS) Development Kit

[Munich, Germany / Ottawa, Canada , 4 October] — FRAMOS launched the FSM-IMX636 Development Kit, an innovative platform allowing developers to explore the capabilities of Event-based Vision Sensing (EVS) technology and test potential benefits of using the technology on NVIDIA® Jetson with the FRAMOS sensor module ecosystem. Built around SONY and PROPHESEE’s cutting-edge EVS technology, […]

e-con Systems Launches Superior HDR Multi-camera Solution for NVIDIA Jetson Orin to Revolutionize Autonomous Mobility

California & Chennai (Sep 27, 2023): e-con Systems, with over two decades of experience in designing, developing, and manufacturing OEM cameras, has recently launched STURDeCAM31 – a 3MP GMSL2 HDR IP69K camera powered by Sony® ISX031 sensor for NVIDIA Jetson AGX Orin. Designed for automotive grade, this small form factor camera has been engineered to […]

Selecting the Right Camera for the NVIDIA Jetson and Other Embedded Systems

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. The camera module is the most integral part of an AI-based embedded system. With so many camera module choices on the market, the selection process may seem overwhelming. This post breaks down the process to help make […]

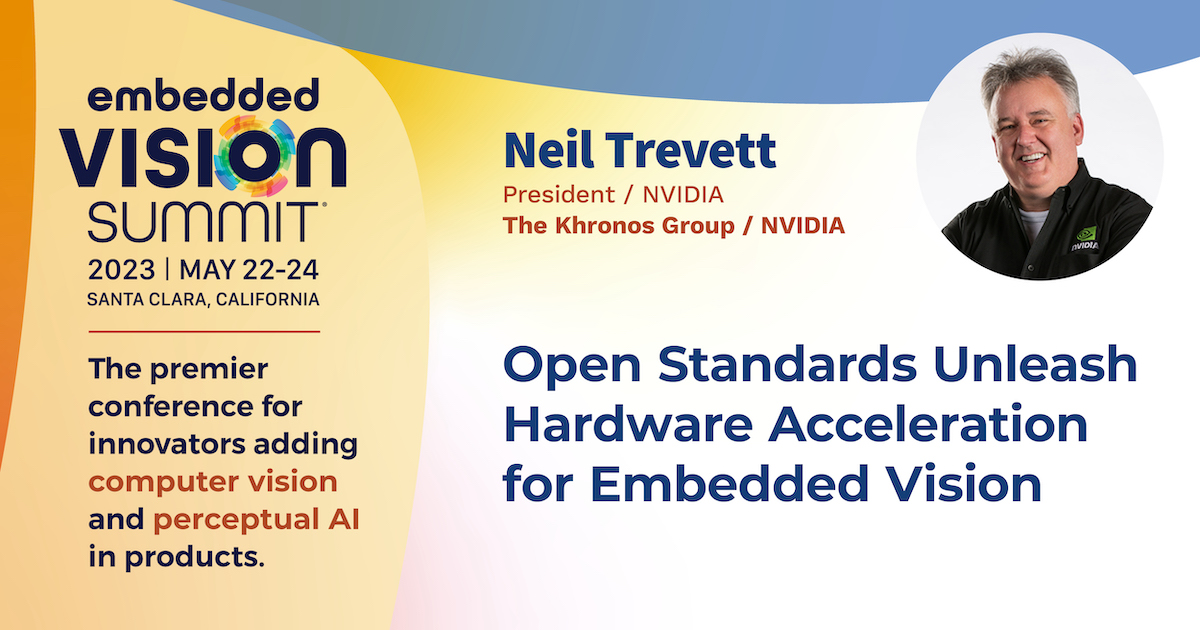

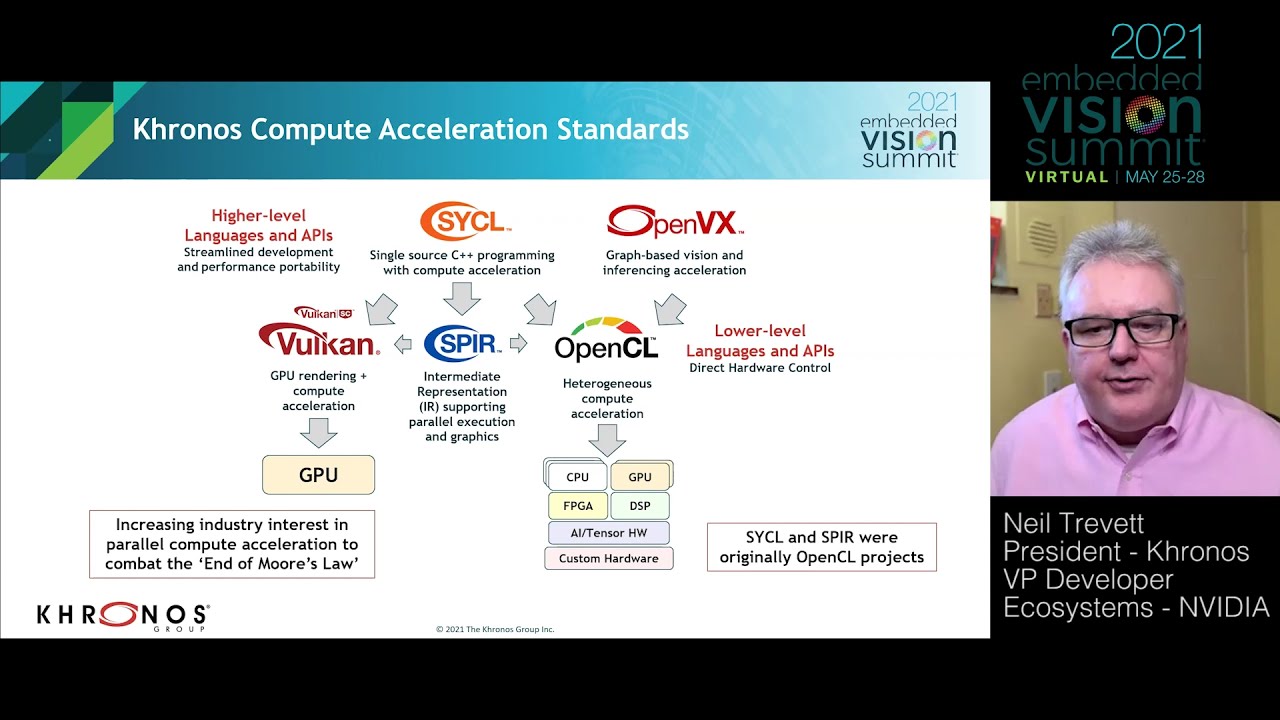

“Open Standards Unleash Hardware Acceleration for Embedded Vision,” a Presentation from the Khronos Group

Neil Trevett, President of the Khronos Group and Vice President of Developer Ecosystems at NVIDIA, presents the “Open Standards Unleash Hardware Acceleration for Embedded Vision” tutorial at the May 2023 Embedded Vision Summit. Offloading visual processing to a hardware accelerator has many advantages for embedded vision systems. Decoupling hardware and software removes barriers to innovation […]

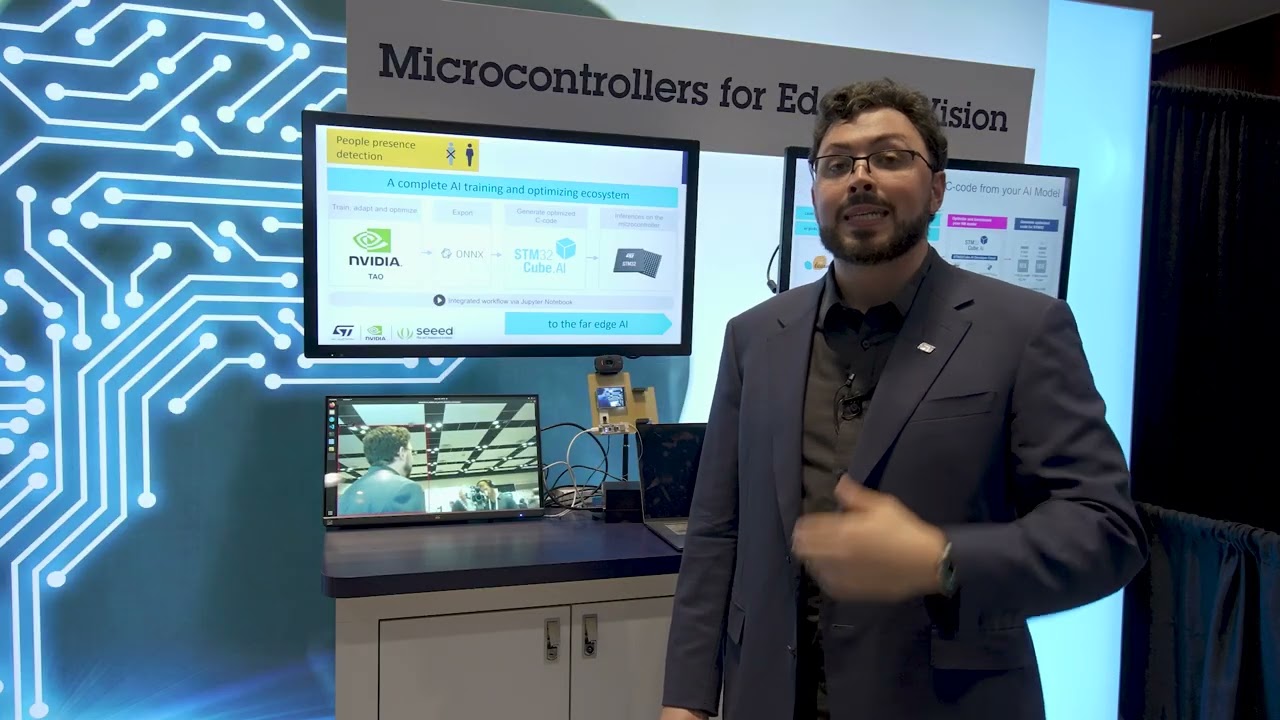

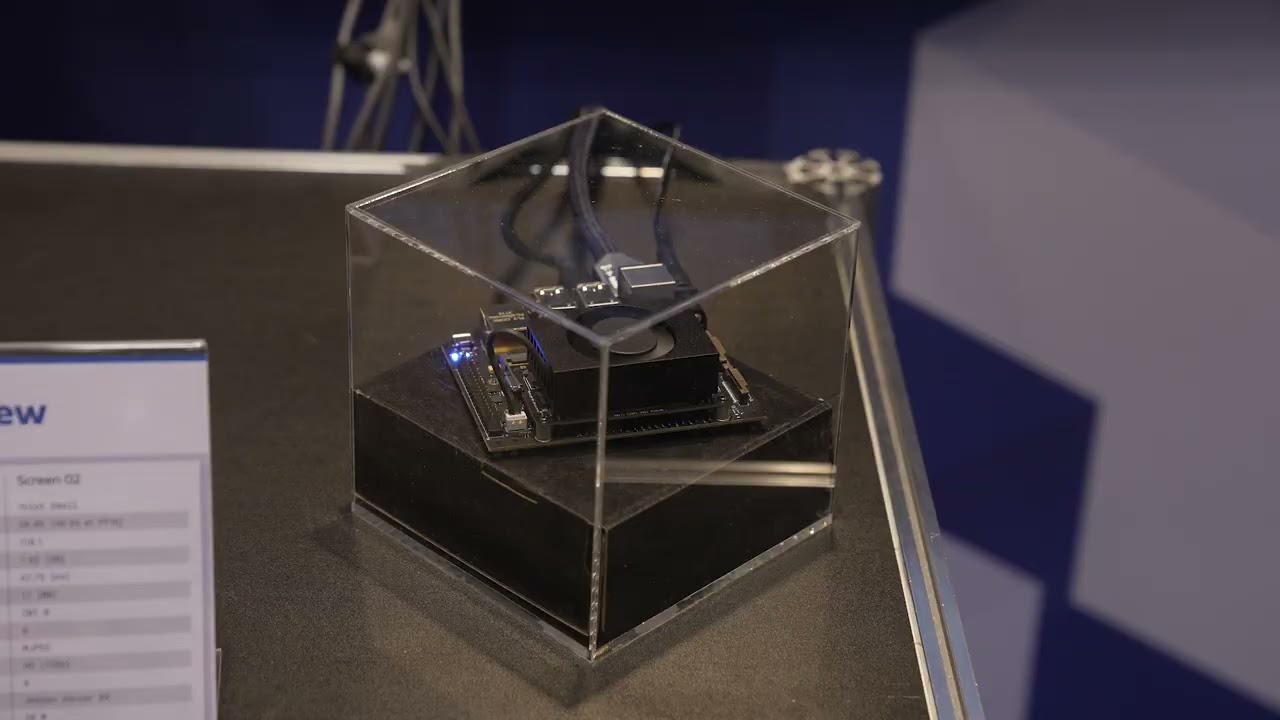

STMicroelectronics Demonstration of the Cube.ai Developer Cloud, from TAO to STM32

Louis Gobin, AI Product Marketing Engineer at STMicroelectronics, demonstrates the company’s latest edge AI and vision technologies and products at the 2023 Embedded Vision Summit. Specifically, Gobin demonstrates the work of Seeed, using STMicroelectronics and NVIDIA solutions. Gobin shows the Seeed Wio Lite AI board based on a STM32H7 microcontroller. The STM32H7 runs a real-time […]

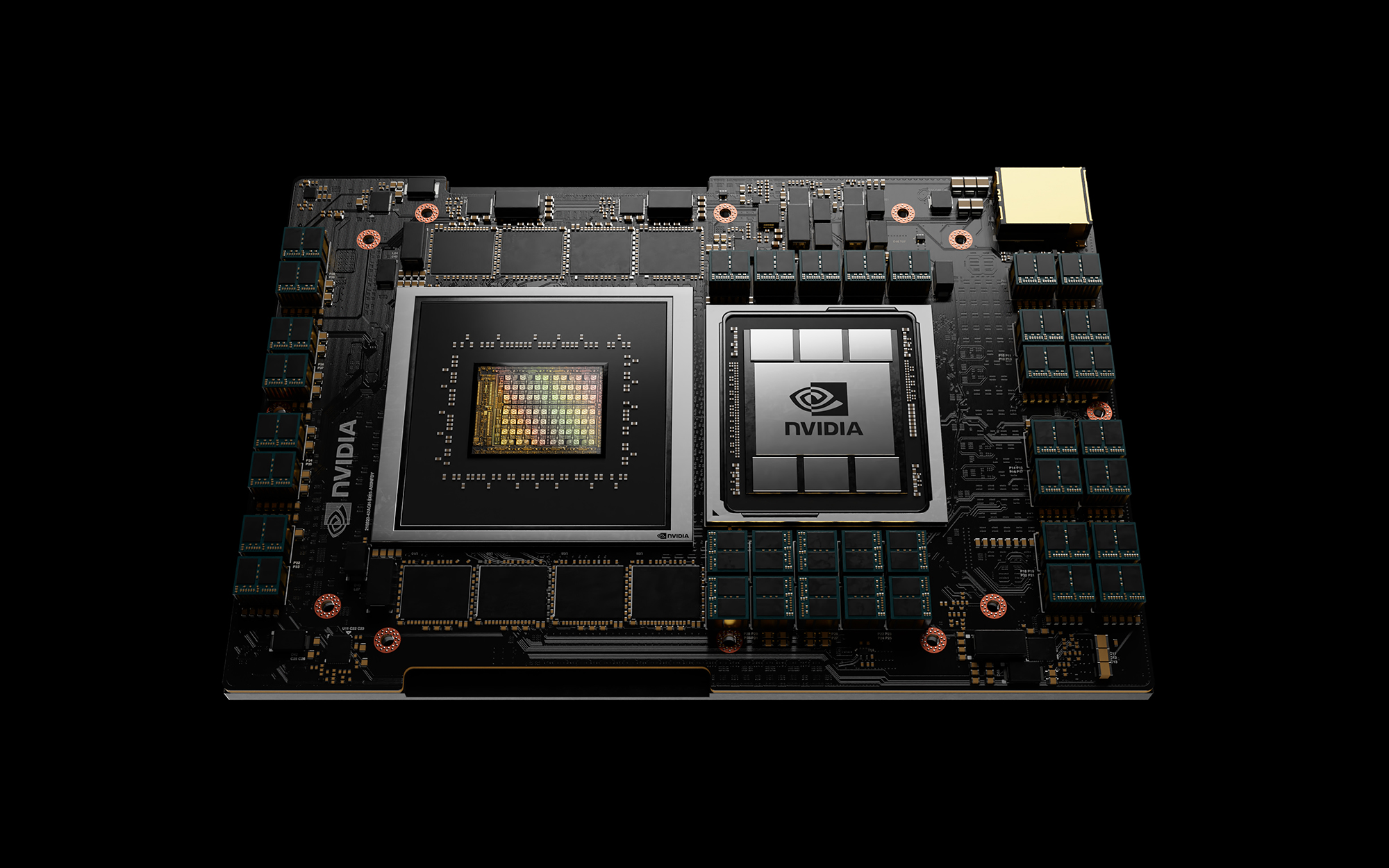

NVIDIA Unveils Next-generation GH200 Grace Hopper Superchip Platform for Era of Accelerated Computing and Generative AI

World’s First HBM3e Processor Offers Groundbreaking Memory, Bandwidth; Ability to Connect Multiple GPUs for Exceptional Performance; Easily Scalable Server Design August 8, 2023—SIGGRAPH—NVIDIA today announced the next-generation NVIDIA GH200 Grace Hopper™ platform — based on a new Grace Hopper Superchip with the world’s first HBM3e processor — built for the era of accelerated computing and […]

Improve Accuracy and Robustness of Vision AI Apps with Vision Transformers and NVIDIA TAO

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Vision Transformers (ViTs) are taking computer vision by storm, offering incredible accuracy, robust solutions for challenging real-world scenarios, and improved generalizability. The algorithms are playing a pivotal role in boosting computer vision applications and NVIDIA is making […]

Supercharge Edge AI with NVIDIA TAO on Edge Impulse

We are excited to announce a significant leap forward for the edge AI ecosystem. NVIDIA’s TAO Toolkit is now fully integrated into Edge Impulse, enhancing our platform’s capabilities and creating new possibilities for developers, engineers, and businesses alike. Check out the Edge Impulse documentation for more information on how to get started with NVIDIA TAO […]

FPD-Link III Camera for NVIDIA Jetson AGX Orin Development Kit

July 20, 2023 – e-con Systems is thrilled to announce that it is extending the support of NeduCAM25, our FPD link III camera, to the powerful NVIDIA AGX Orin platform. Click here to buy and evaluate the NeduCAM25_CUOAGX for your application. So, why choose this FPD Link III camera? Here are some of its remarkable […]

Get 60 fps Performance at 12 Mpixel Resolution with e-con’s Ultra-low Light Camera

July 20, 2023 – e-con Systems’ 12MP MIPI CSI-2 camera module, which is capable of streaming 60fps at 12MP and 4K resolutions, is specifically designed to meet the high demands of various applications in the field of in-vitro diagnostics (IVD), automated microscopy, and fundus imaging. This camera module is based on the Sony® Starvis™ IMX412 […]

NVIDIA Expands Isaac Software Access and Jetson Platform Availability, Accelerating Robotics From Cloud to Edge

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Coming to Microsoft Azure, Isaac Sim on Omniverse Cloud provides scalable simulation; Jetson Orin lineup, now in production, delivers accelerated computing capabilities for robotics. NVIDIA announced at GTC that Omniverse Cloud will be hosted on Microsoft Azure, […]

Upcoming Webinar Explores How to Choose the Right Processor and Camera For Your Application

On June 28, 2023 at 10:00 am PT (1:00 pm ET), Alliance Member companies e-con Systems and NVIDIA will co-deliver the free webinar “How to Choose the Right Jetson Processor and Camera For Your Application.” From the event page: The definitive guide to developing a successful edge AI-enabled embedded vision application. Key takeaways: Find out […]

NVIDIA Sets the Pace for the AI Era

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. Record breaking results announced by NVIDIA have fuelled speculation of an AI goldrush. Days later, the company introduced platforms for generative AI at Computex 2023. Yole Group and its entities, Yole SystemPlus […]

NVIDIA Grace Hopper Superchips Designed for Accelerated Generative AI Enter Full Production

GH200-Powered Systems Join 400+ System Configurations From Global Systems-Makers Based on NVIDIA Grace, Hopper, Ada Lovelace Architectures May 28, 2023—COMPUTEX— NVIDIA today announced that the NVIDIA® GH200 Grace Hopper Superchip is in full production, set to power systems coming online worldwide to run complex AI and HPC workloads. The GH200-powered systems join more than 400 […]

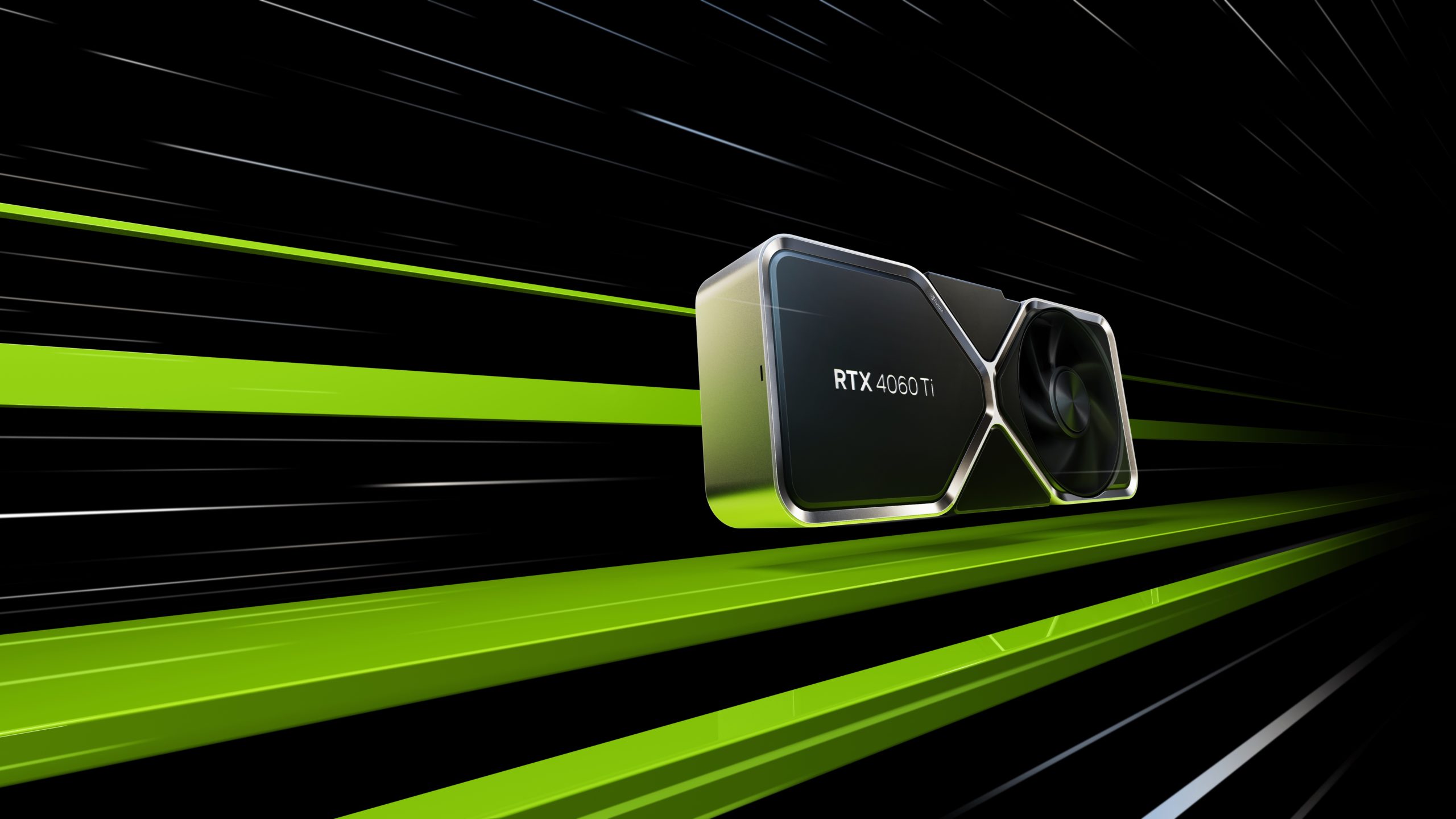

GeForce RTX 4060 Family Is Here: NVIDIA’s Revolutionary Ada Lovelace Architecture Starts at $299

Superpowered by AI, Newest GPUs Provide 2x the Horsepower of Latest Gaming Consoles Thursday, May 18, 2023 – NVIDIA today announced the GeForce RTX™ 4060 family of GPUs, with two graphics cards that deliver all the advancements of the NVIDIA® Ada Lovelace architecture — including DLSS 3 neural rendering and third-generation ray-tracing technologies at high […]

Increasing Throughput and Reducing Costs for AI-based Computer Vision with CV-CUDA

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Real-time cloud-scale applications that involve AI-based computer vision are growing rapidly. The use cases include image understanding, content creation, content moderation, mapping, recommender systems, and video conferencing. However, the compute cost of these workloads is growing too, […]

NVIDIA GeForce RTX 4070 Brings Power of Ada Lovelace Architecture and DLSS 3 to Millions, Starting at $599

Stream and Create Faster With RTX Acceleration and Advanced AI Tools; Play the Latest Games With Max Settings at 1440p Resolution Wednesday, April 12, 2023 – NVIDIA today announced the GeForce RTX™ 4070 GPU, delivering all the advancements of the NVIDIA® Ada Lovelace architecture — including DLSS 3 neural rendering, real-time ray-tracing technologies and the […]

NVIDIA Metropolis Ecosystem Grows with Advanced Development Tools to Accelerate Vision AI

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. More than 1,000 companies transform their spaces and processes with vision AI using NVIDIA Metropolis, which comprises developer tools downloaded over 1 million times. With AI at its tipping point, AI-enabled computer vision is being used to […]

Access the Latest in Vision AI Model Development Workflows with NVIDIA TAO Toolkit 5.0

This article was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. NVIDIA TAO Toolkit provides a low-code AI framework to accelerate vision AI model development suitable for all skill levels, from novice beginners to expert data scientists. With NVIDIA TAO (Train, Adapt, Optimize) Toolkit, developers can use the power […]

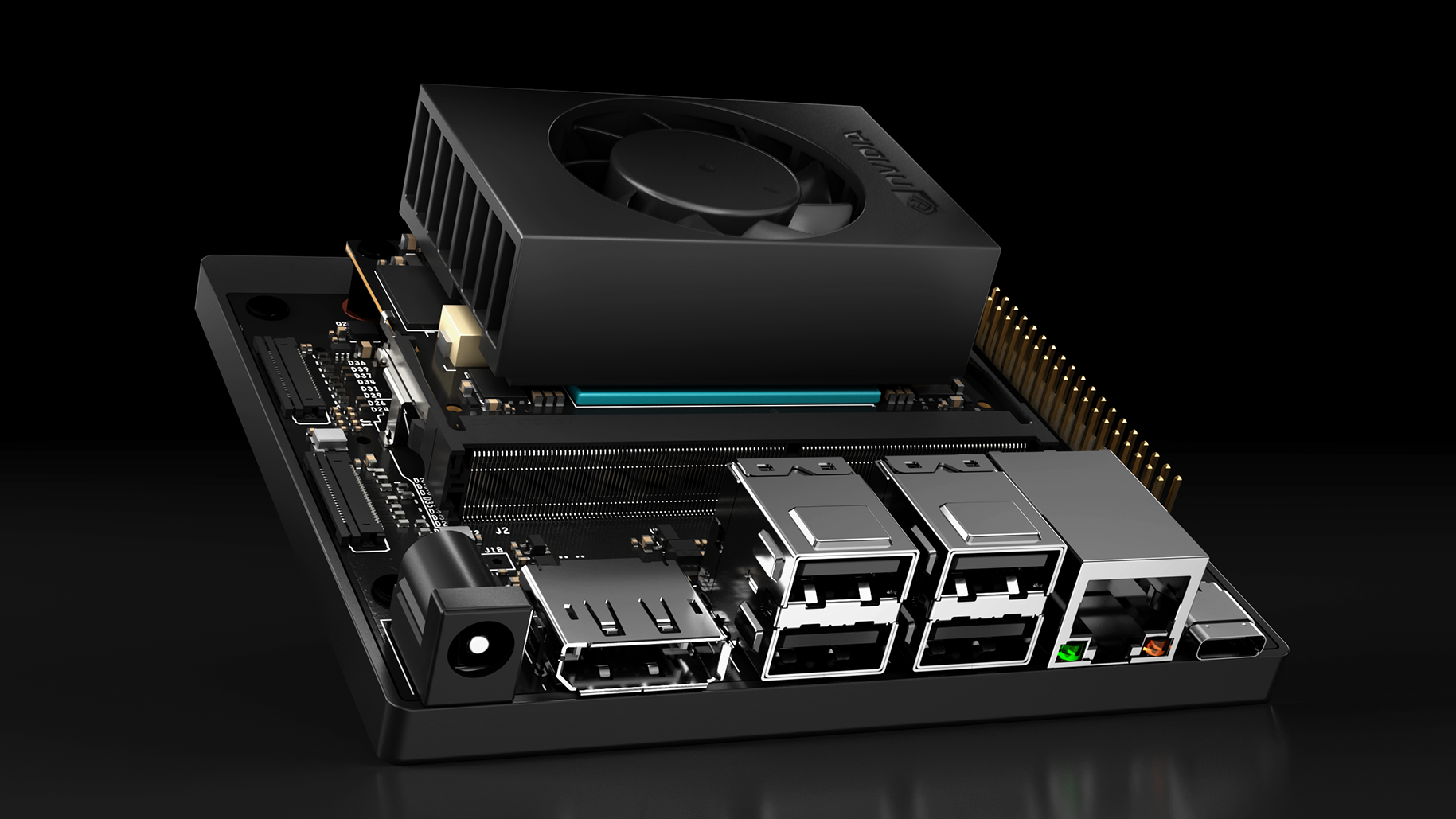

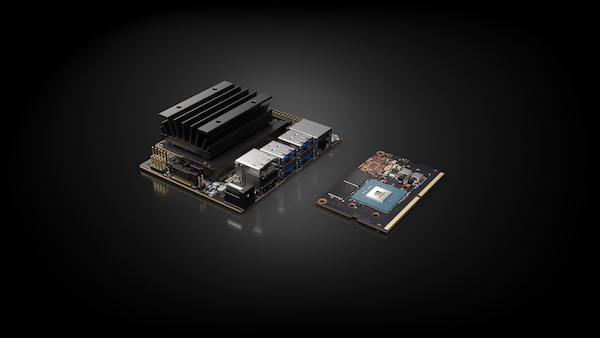

Develop AI-Powered Robots, Smart Vision Systems, and More with NVIDIA Jetson Orin Nano Developer Kit

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. The NVIDIA Jetson Orin Nano Developer Kit sets a new standard for creating entry-level AI-powered robots, smart drones, and intelligent vision systems, as NVIDIA announced at NVIDIA GTC 2023. It also simplifies getting started with the NVIDIA […]

STM32Cube.AI and NVIDIA TAO Toolkit Deliver a 10x Performance Increase on an STM32H7 Running Vision AI

This blog post was originally published at STMicroelectronics’ website. It is reprinted here with the permission of STMicroelectronics. NVIDIA announced today TAO Toolkit 5, which now supports the ONNX quantized format, thus opening STM32 engineers to a new way of building machine learning applications by making the technology more accessible. The ST demo featured an […]

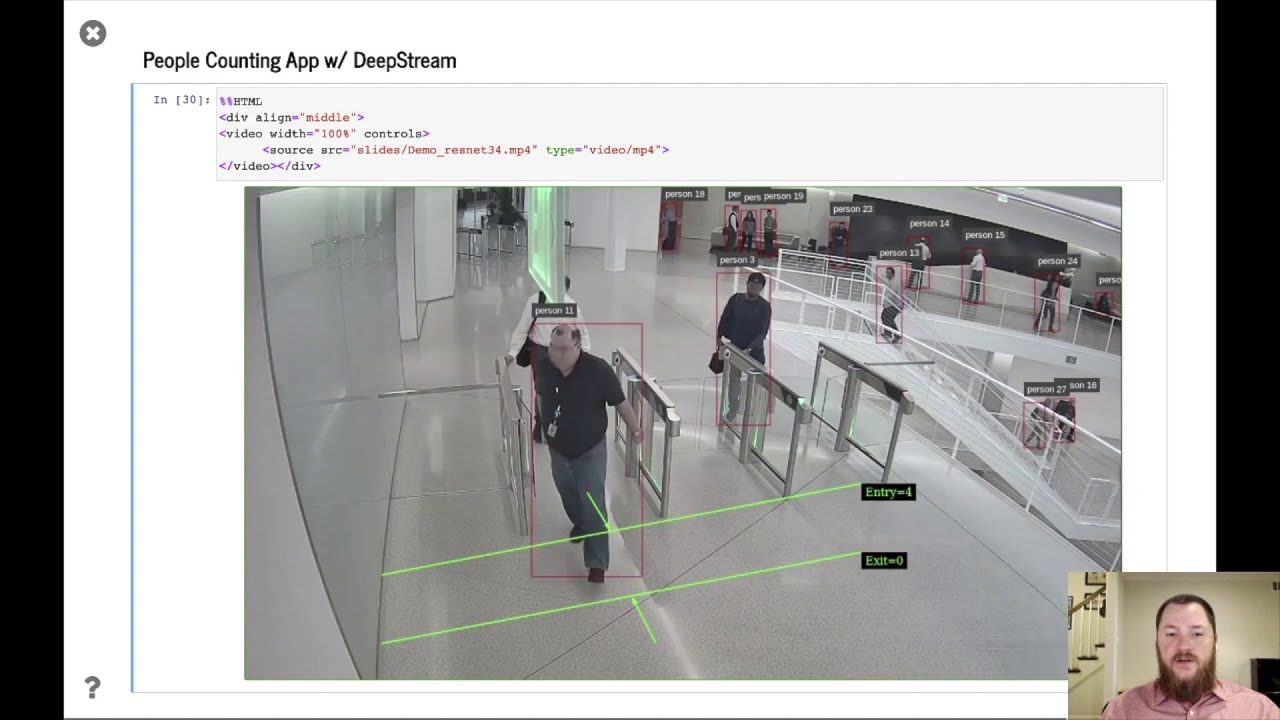

Seamlessly Develop Vision AI Applications with NVIDIA DeepStream SDK 6.2

NVIDIA announced the general availability of the NVIDIA DeepStream SDK 6.2, an AI analytics toolkit for building high-performance video analytics and streaming applications. The update adds new features including enhanced multi-object trackers, support for new sensors, integration with REST APIs, updated Graph Composer, and enterprise-grade support through NVIDIA AI Enterprise. Download the DeepStream SDK 6.2 […]

VVDN Technologies Demonstration of Its Various Reference Platforms

Kalpeshkumar Chauhan, Senior Director and Technology Evangelist for Vision and IoT at VVDN Technologies, demonstrates the company’s latest edge AI and vision technologies and products at the December 2022 Edge AI and Vision Innovation Forum. Specifically, Chauhan demonstrates the company’s various reference platforms, developed in partnership with other Alliance Member companies. VVDN has strong relationships […]

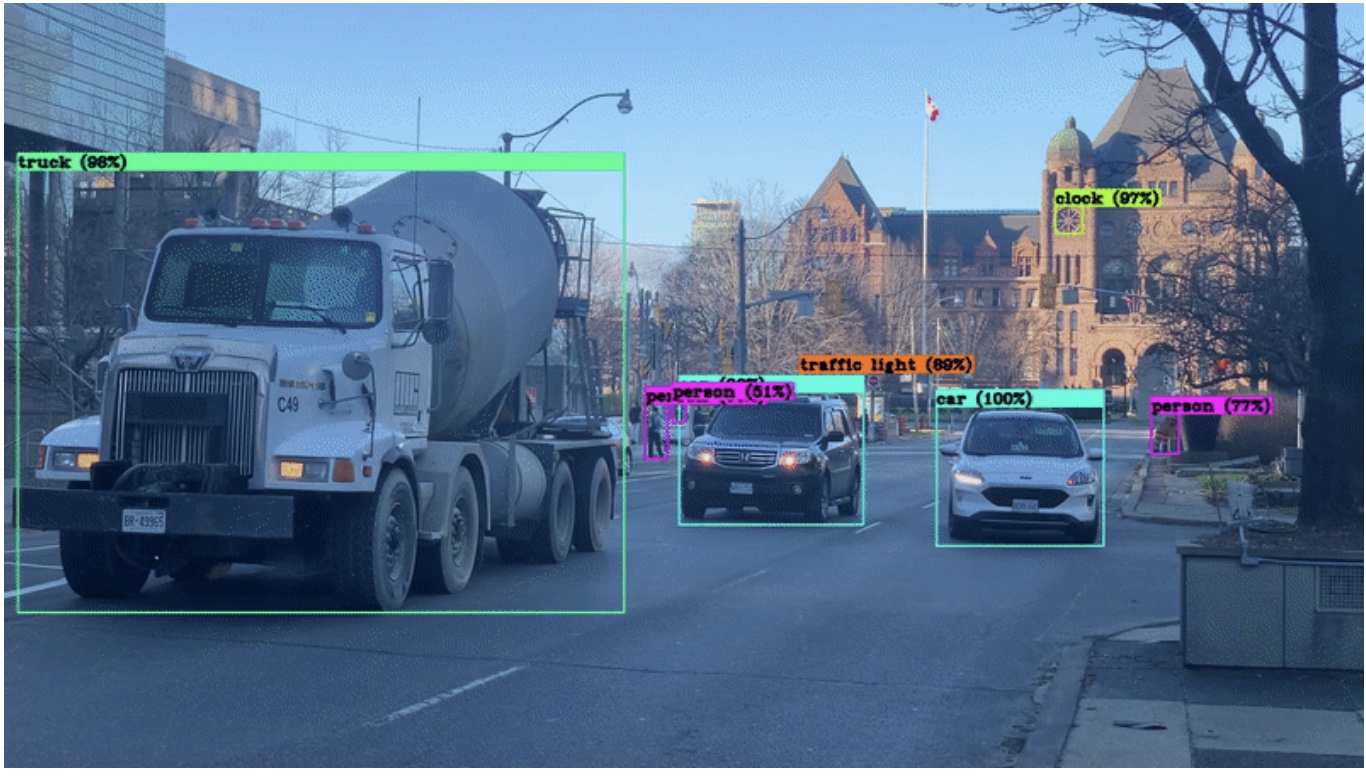

Deci Demonstration of Real-time Object Detection on a NVIDIA Jetson SoC with DeciDet

Nave Assaf, Deep Learning Engineer at Deci, demonstrates the company’s latest edge AI and vision technologies and products at the 2022 Embedded Vision Summit. Specifically, Assaf demonstrates how the DeciDet model runs real-time object detection on eight video streams connected to one Nvidia Jetson device while outperforming YOLO X both in terms of accuracy and […]

Basler Announces Vision Compatibility for New NVIDIA Jetson Orin Nano Edge AI Platform

Ahrensburg, 20 September 2022 – Basler will be introducing Add-on Camera Kits with 5 and 13 MP to support the NVIDIA® Jetson Orin™ Nano system-on-modules (SOMs), which have set a new baseline for entry-level edge AI and robotics, as announced today at NVIDIA GTC. Add-on Camera Kits are ready-to-use vision extensions that include an adapter […]

NVIDIA Launches IGX Edge AI Computing Platform for Safe, Secure Autonomous Systems

Platform Advances Human-Machine Collaboration Across Manufacturing, Logistics and Healthcare Tuesday, September 20, 2022 –- GTC — NVIDIA today introduced the NVIDIA IGX platform for high-precision edge AI, bringing advanced security and proactive safety to sensitive industries such as manufacturing, logistics and healthcare. In the past, such industries required costly solutions custom built for specific use cases, […]

NVIDIA Jetson Orin Nano Sets New Standard for Entry-Level Edge AI and Robotics With 80x Performance Leap

Canon, John Deere, Microsoft Azure, Teradyne, TK Elevator Join Over 1,000 Customers Adopting Jetson Orin Family Within Six Months of Launch Tuesday, September 20, 2022 — GTC — NVIDIA today expanded the NVIDIA® Jetson™ lineup with the launch of new Jetson Orin Nano™ system-on-modules that deliver up to 80x the performance over the prior generation, setting a new standard […]

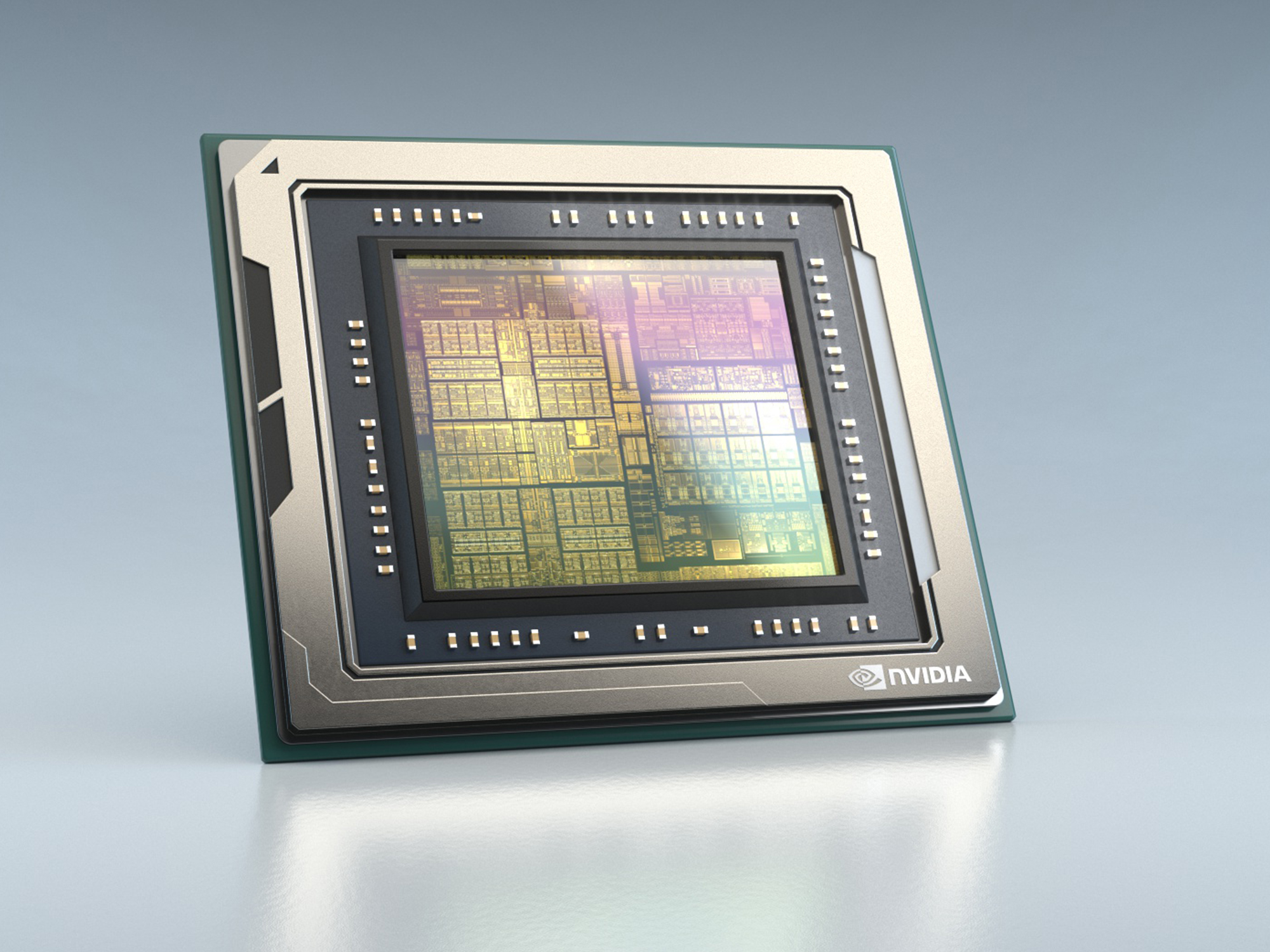

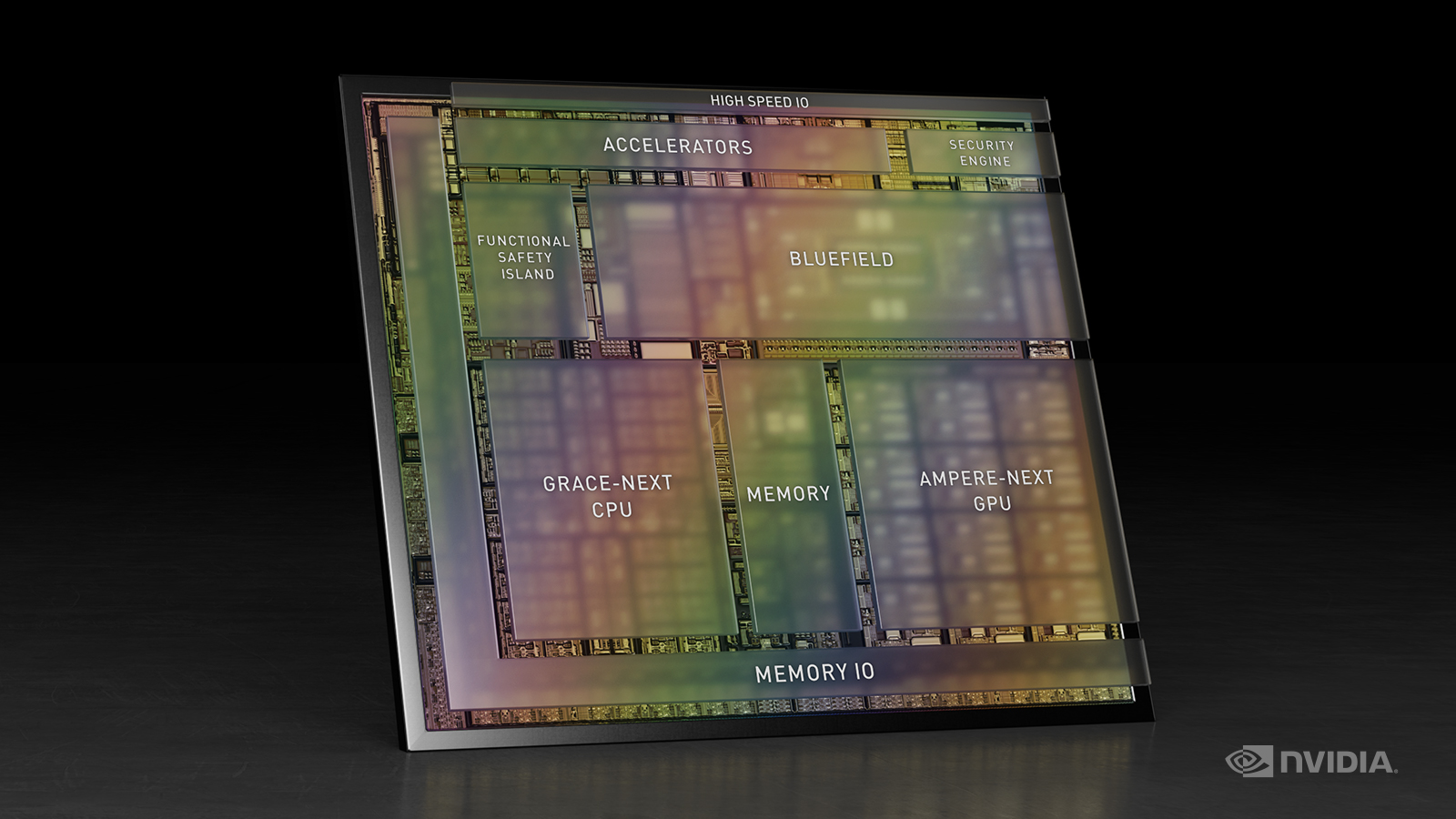

NVIDIA Unveils DRIVE Thor — Centralized Car Computer Unifying Cluster, Infotainment, Automated Driving, and Parking in a Single, Cost-Saving System

Wielding 2,000 Teraflops of Performance, Platform Integrates Next-Gen GPU and Transformer Engine to Support AI Workloads for Safe and Secure Autonomous Vehicles; ZEEKR First Customer, With Initial Production Vehicles Planned for Early 2025 Tuesday, September 20, 2022 — GTC — NVIDIA today introduced NVIDIA DRIVE™ Thor, its next-generation centralized computer for safe and secure autonomous […]

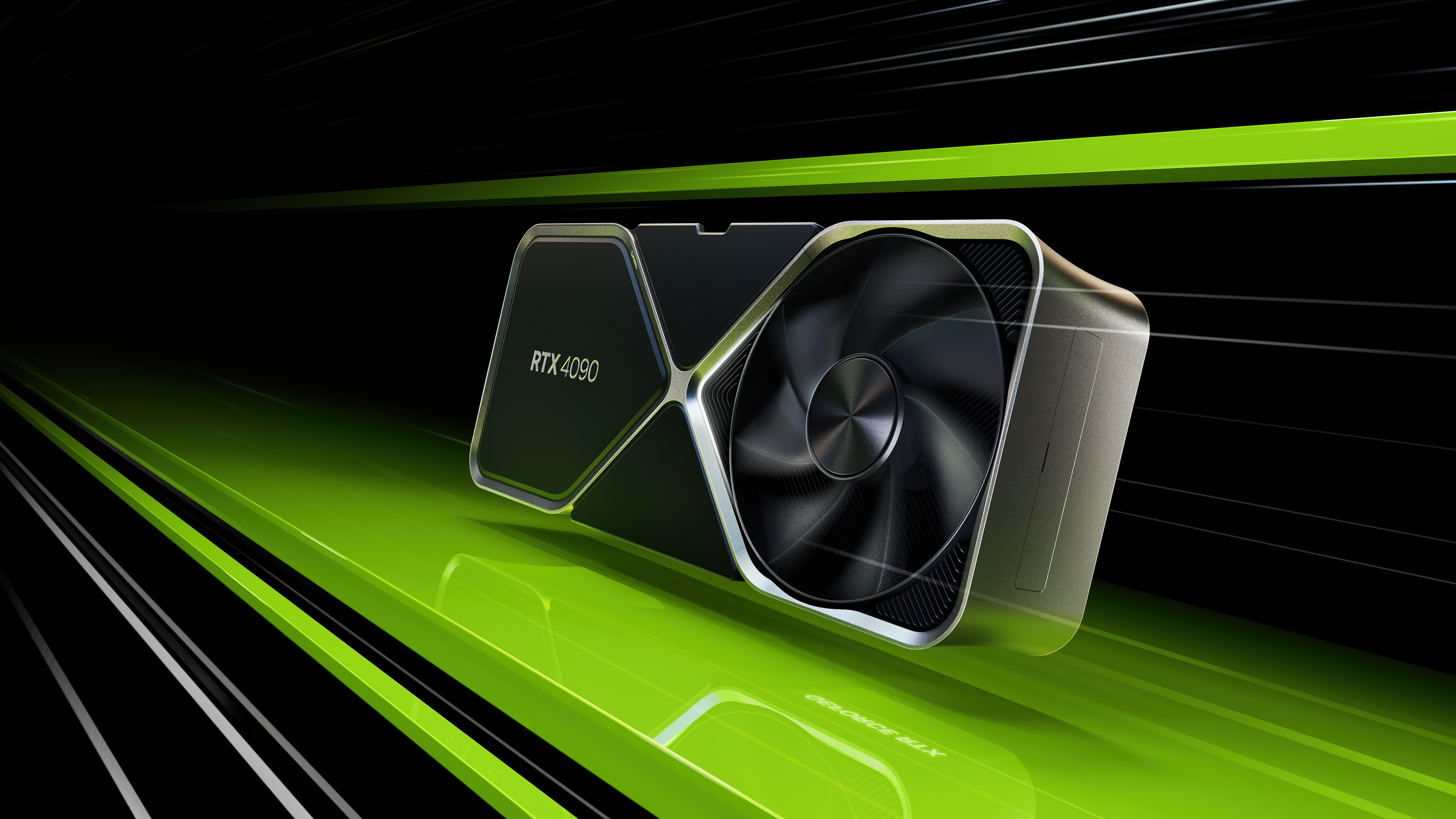

NVIDIA Delivers Quantum Leap in Performance, Introduces New Era of Neural Rendering With GeForce RTX 40 Series

Powered by Ada Lovelace Architecture and DLSS 3; Third-Gen RTX up to 4x Faster Than NVIDIA Ampere Architecture GPUs Tuesday, September 20, 2022 – NVIDIA today unveiled the GeForce RTX® 40 Series of GPUs, designed to deliver revolutionary performance for gamers and creators, led by its new flagship, the RTX 4090 GPU, with up to […]

Arm Supports FP8: A New 8-bit Floating-point Interchange Format for Neural Network Processing

This blog post was originally published at Arm’s website. It is reprinted here with the permission of Arm. Enabling secure and ubiquitous Artificial Intelligence (AI) is a key priority for the Arm architecture. The potential for AI and machine learning (ML) is clear, with new use cases and benefits emerging almost daily – but alongside […]

“Open Standards: Powering the Future of Embedded Vision,” a Presentation from the Khronos Group

Neil Trevett, President of the Khronos Group and Vice President of Developer Ecosystems at NVIDIA, presents the “Open Standards: Powering the Future of Embedded Vision” tutorial at the May 2022 Embedded Vision Summit. Open standards play an important role in enabling interoperability for efficient deployment of vision-based systems. In this session, Trevett shares an update […]

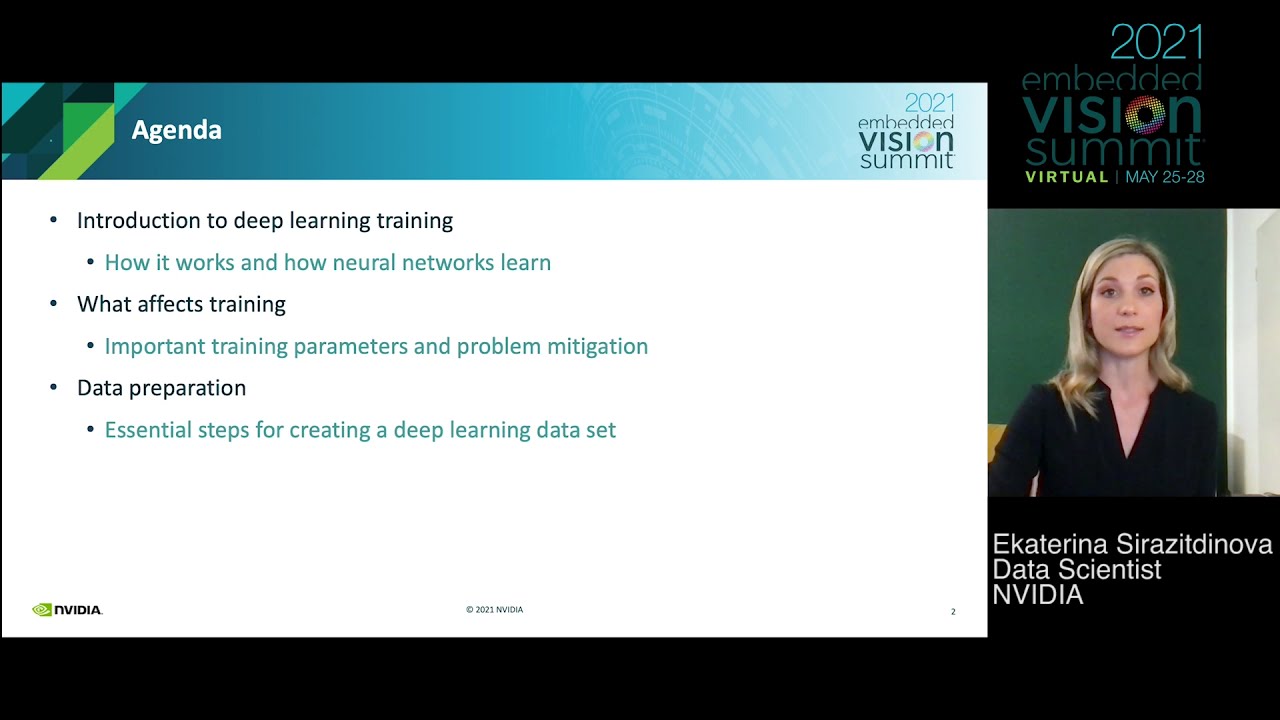

“Fundamentals of Training AI Models for Computer Vision and Video Analytics Applications,” a Presentation from NVIDIA

Ekaterina Sirazitdinova, Data Scientist at NVIDIA, presents the “Fundamentals of Training AI Models for Computer Vision and Video Analytics Applications” tutorial at the May 2022 Embedded Vision Summit. AI has become an important component of computer vision and video analytics applications. But creating AI-based solutions is a challenging process. To build a successful product, it […]

NVIDIA Jetson AGX Orin 32GB Production Modules Now Available; Partner Ecosystem Appliances and Servers Arrive

Nearly three dozen partners are offering feature-packed systems based on the new Jetson Orin module to help customers accelerate AI and robotics deployments. Bringing new AI and robotics applications and products to market, or supporting existing ones, can be challenging for developers and enterprises. The NVIDIA Jetson AGX Orin 32GB production module — available now […]

Sequitur Labs First to Provide Chip-to-Cloud Embedded Security in Support of New NVIDIA Jetson Orin Platform

EmSPARK Security Suite for Jetson AGX Orin helps protect autonomous machines at the edge SEATTLE, August 3, 2022 /Business Wire/ – Sequitur Labs today announced that its EmSPARK™ Security Suite for the NVIDIA Jetson™ edge AI platform has been qualified with the new Jetson AGX Orin™ 32 GB module to support trial deployments of the […]

“Vision AI At the Edge: From Zero to Deployment Using Low-Code Development,” a Presentation from NVIDIA

Carlos Garcia-Sierra, Product Manager for DeepStream at NVIDIA, presents the “Vision AI At the Edge: From Zero to Deployment Using Low-Code Development” tutorial on behalf of Alvin Clark, Product Marketing Manager at NVIDIA, at the May 2022 Embedded Vision Summit. Development of vision AI applications for the edge presents complex challenges beyond model inference, often […]

“Accelerating the Creation of Custom, Production-Ready AI Models for Edge AI,” a Presentation from NVIDIA

Akhil Docca, Senior Product Marketing Manager at NVIDIA, presents the “Accelerating the Creation of Custom, Production-Ready AI Models for Edge AI,” tutorial at the May 2022 Embedded Vision Summit. AI applications are powered by models. However, creating customized, production-ready AI models requires an army of data scientists and mountains of data. For businesses looking to […]

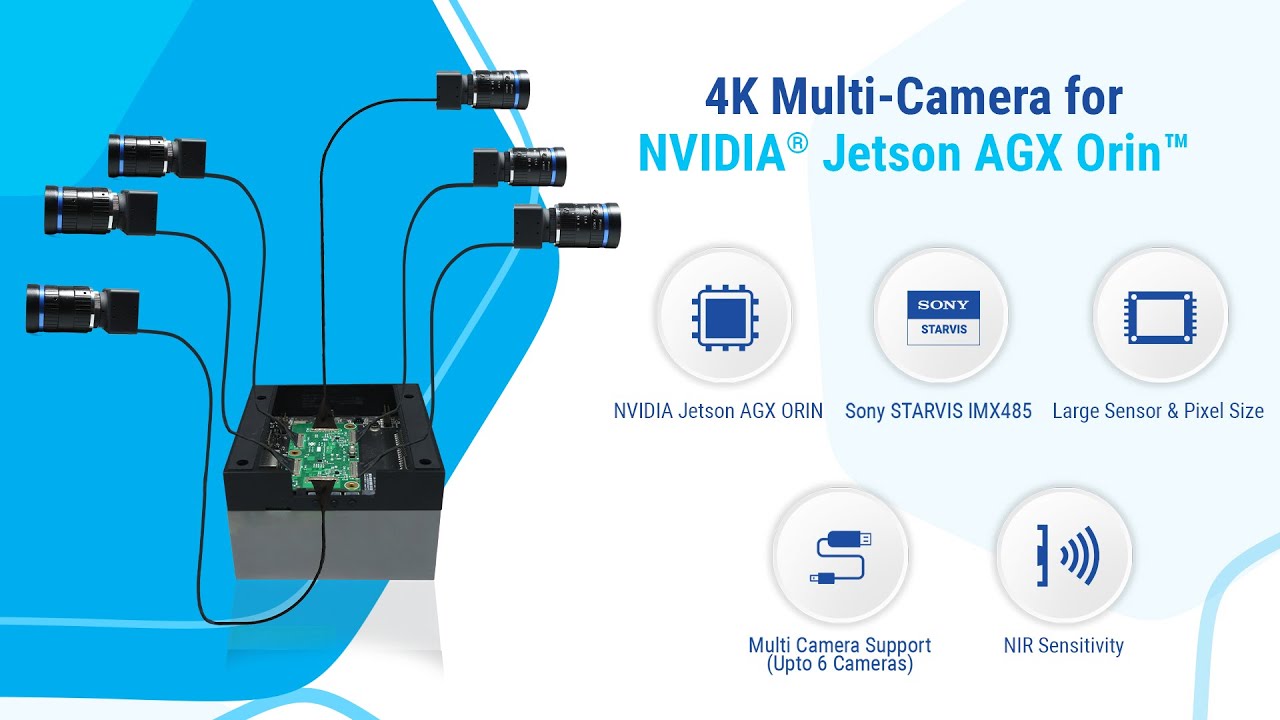

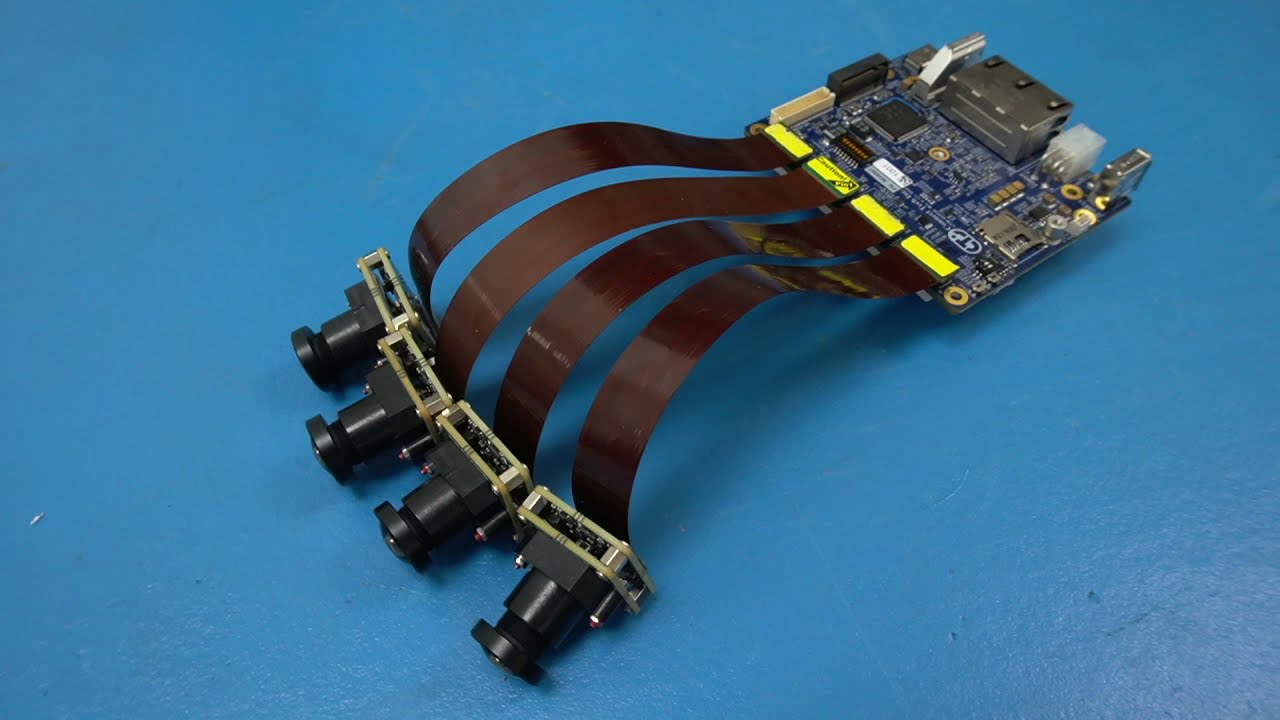

e-con Systems Launches Multi-camera Solution for NVIDIA Jetson AGX Orin Based on Sony STARVIS IMX485

NVIDIA Jetson AGX Orin | Sony STARVIS IMX485 | 1/1.2” sensor | Large pixel size | 4K resolution | Multi-camera solution San Jose and Chennai (June 21, 2022) – e-con Systems, a leading embedded OEM camera company and an NVIDIA Elite Partner, today launched the e-CAM82_CUOAGX – a 4K ultra-low-light camera powered by the NVIDIA […]

NVIDIA DeepStream Technical Deep Dive: Multi-object Tracker

This video was originally published at the NVIDIA Developer YouTube channel. It is reprinted here with the permission of NVIDIA. NVIDIA’s DeepStream SDK delivers a complete streaming analytics toolkit for AI-based multi-sensor processing, video, audio and image understanding. This video covers the fundamentals of NVIDIA’s new tracker unified architecture. From the video, you will: Learn […]

VVDN Launches AI Development System Powered by NVIDIA Jetson Xavier NX

VVDN Technologies, a global provider of Engineering, Manufacturing, Digital Solutions, and Services launched the Nvidia Jetson-powered AI development system. The system is a combination of a custom carrier board developed by VVDN and Jetson Xavier™ NX Module. The NVIDIA JetPack™ SDK supports the entire development system with a customized BSP. The development system is backed […]

Basler Announces Elite-Level Status in NVIDIA Partner Network to Expand Support for Jetson Edge AI Platform

After a long-standing, successful membership in the NVIDIA Partner Network, Basler AG is elevated to Elite partner-level status. The collaboration provides Basler customers with the opportunity to combine the NVIDIA Jetson platform with vision AI technology even more seamlessly and with an intensified level of support. Ahrensburg, June 16, 2022 – Basler offers fully integrated […]

Simplify AI Model Development with the Latest TAO Toolkit Release

June 6, 2022 – Today, NVIDIA announced the general availability of the latest version of the TAO Toolkit. As a low-code version of the NVIDIA Train, Adapt and Optimize (TAO) framework, the toolkit simplifies and accelerates the creation of AI models for speech and vision AI applications. With TAO, developers can use the power of […]

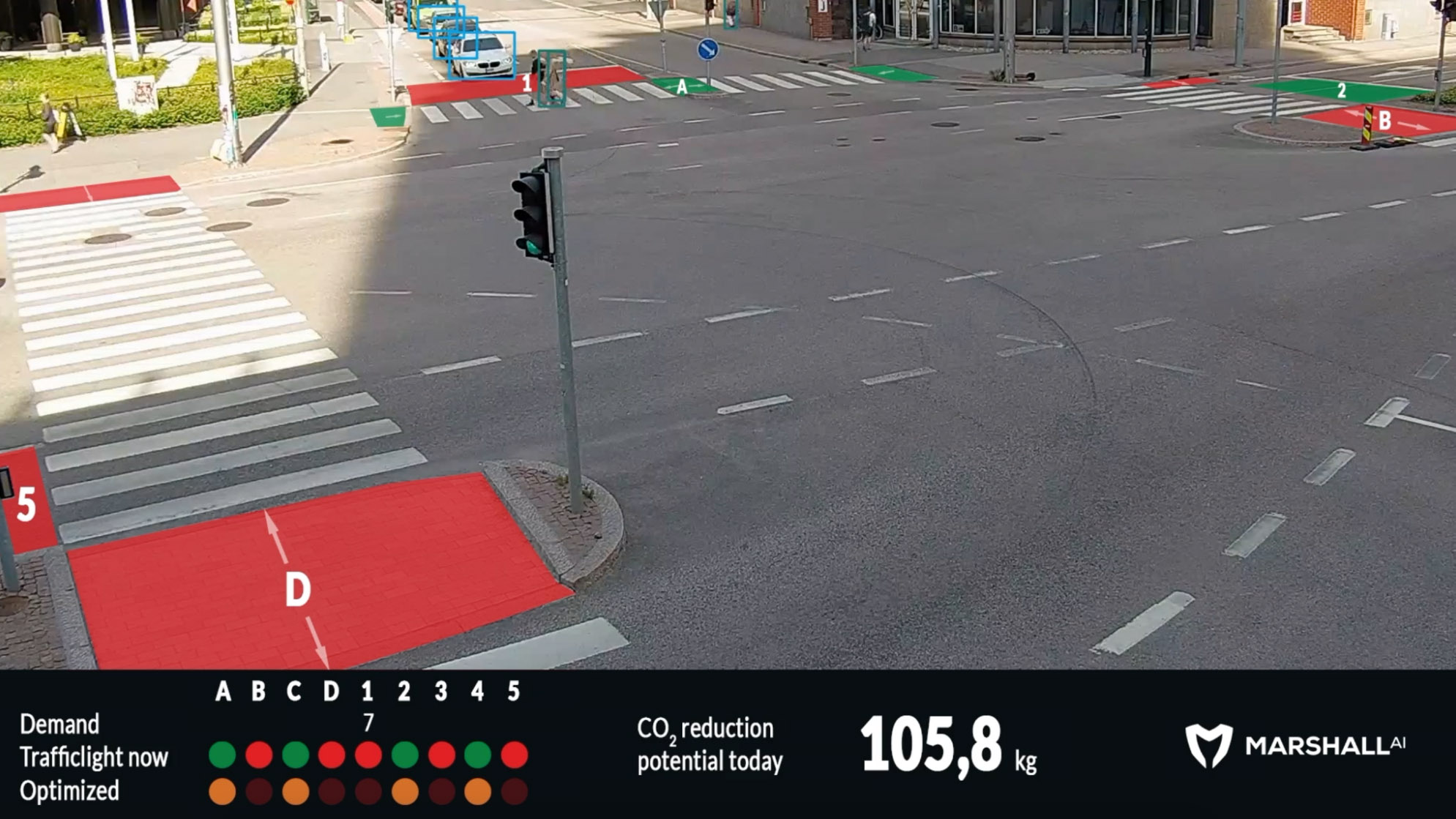

Metropolis Spotlight: MarshallAI Optimizes Traffic Management While Reducing Carbon Emissions

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. A major contributor to CO2 emissions in cities is traffic. City planners are always looking to reduce their carbon footprint and design efficient and sustainable infrastructure. NVIDIA Metropolis partner, MarshallAI, is helping cities improve their traffic management […]

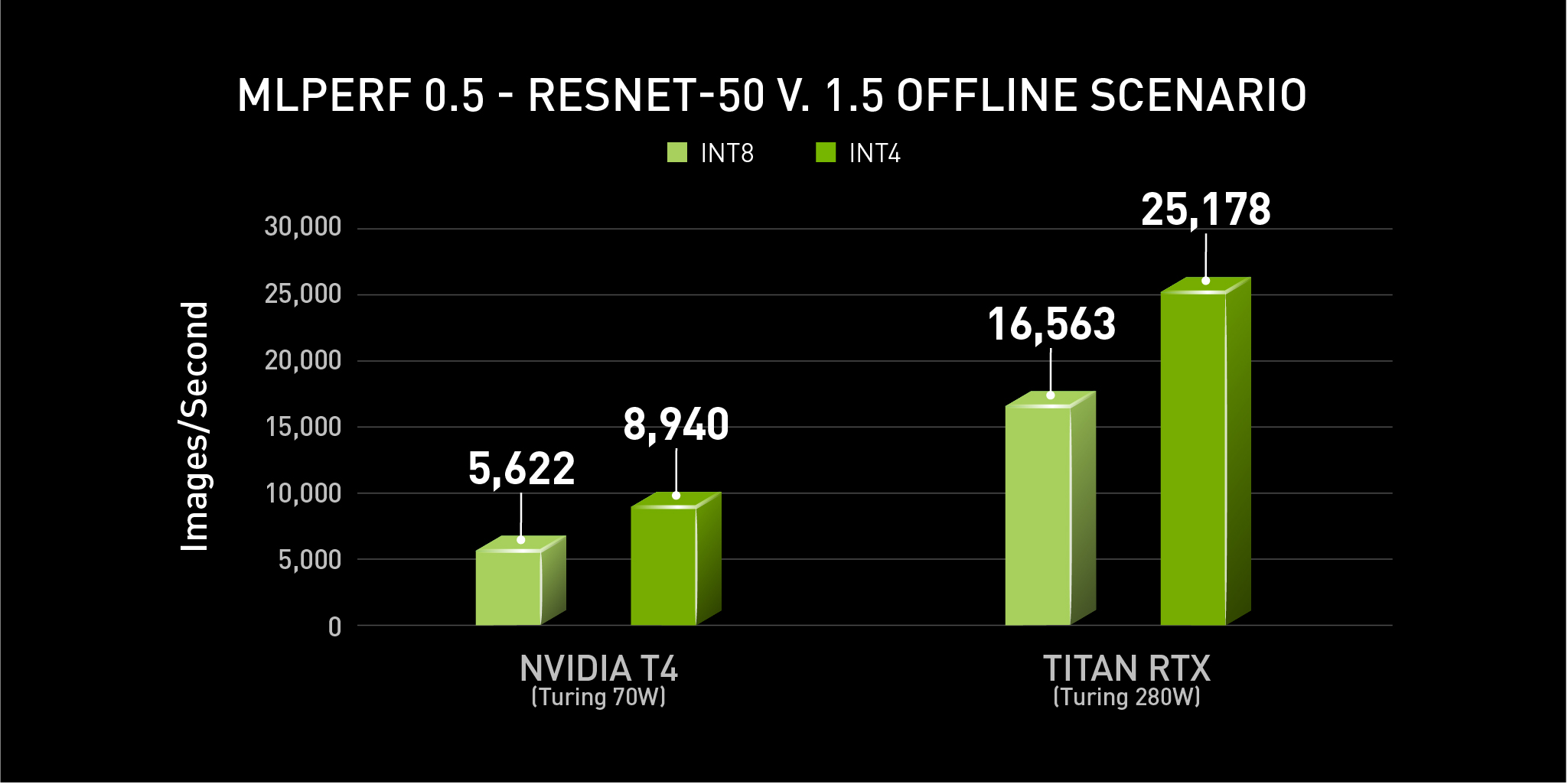

NVIDIA Orin Leaps Ahead in Edge AI, Boosting Leadership in MLPerf Tests

The just-released NVIDIA Jetson AGX Orin raised the bar for AI at the edge, adding to our overall top rankings in the latest industry inference benchmarks. In its debut in the industry MLPerf benchmarks, NVIDIA Orin, a low-power system-on-chip based on the NVIDIA Ampere architecture, set new records in AI inference, raising the bar in […]

Is the New NVIDIA Jetson AGX Orin Really a Game Changer? We Benchmarked It

This technical article was originally published by SmartCow AI Technologies. It is reprinted here with the permission of SmartCow AI Technologies. On March 22th 2022, NVIDIA announced the availability of its new edge computing platform, the Jetson AGX Orin™ developer kit. The production version is being released on Q4 2022 at roughly the same price […]

Guide to Computer Vision: Why It Matters and How It Helps Solve Problems

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. This post was written to enable the beginner developer community, especially those new to computer vision and computer science. NVIDIA recognizes that solving and benefiting the world’s visual computing challenges through computer vision and artificial intelligence requires […]

NVIDIA Announces Availability of Jetson AGX Orin Developer Kit to Advance Robotics and Edge AI

One Million Developers Now Deploying on Jetson; Microsoft Azure, John Deere, Medtronic, Amazon Web Services, Cisco, Hyundai Robotics, JD.com, Komatsu, Meituan Among Leading Adopters Tuesday, March 22, 2022—GTC—NVIDIA today announced the availability of the NVIDIA® Jetson AGX Orin™ developer kit, the world’s most powerful, compact and energy-efficient AI supercomputer for advanced robotics, autonomous machines, and […]

NVIDIA Launches AI Computing Platform for Medical Devices and Computational Sensing Systems

Clara Holoscan MGX Medical-Grade Platform With NVIDIA Orin and NVIDIA AI Software Stack Powers Systems Built by Embedded-Computing Leaders Tuesday, March 22, 2022—GTC—NVIDIA today introduced Clara Holoscan MGX™, a platform for the medical device industry to develop and deploy real-time AI applications at the edge, specifically designed to meet required regulatory standards. Clara Holoscan MGX […]

NVIDIA Enters Production With DRIVE Orin, Announces BYD and Lucid Group as New EV Customers, Unveils Next-Gen DRIVE Hyperion AV Platform

Momentum Accelerates as Trailblazing OEMs Adopt NVIDIA’s Programmable AI Compute Platform Tuesday, March 22, 2022—GTC—NVIDIA today announced the start of production of its NVIDIA DRIVE Orin™ autonomous vehicle computer, showcased new automakers adopting the NVIDIA DRIVE™ platform, and unveiled the next generation of its NVIDIA DRIVE Hyperion™ architecture. The company also announced that its automotive […]

NVIDIA Introduces 60+ Updates to CUDA-X Libraries, Opening New Science and Industries to Accelerated Computing

New Capabilities Accelerate Work in Quantum Computing and 6G Research, Logistics Optimization Research, Robotics, Cybersecurity, Genomics and Drug Discovery, Data Analytics and More Tuesday, March 22, 2022—GTC—NVIDIA today unveiled more than 60 updates to its CUDA-X™ collection of libraries, tools and technologies across a broad range of disciplines, which dramatically improve performance of the CUDA® […]

ADLINK Launches NVIDIA Jetson Xavier NX-based Industrial 4-channel PoE AI Vision System

Next-Gen AI vision system leverages new NVIDIA module for easy maintenance as an optimized development platform that simplifies AI to the edge. Summary: A vision system with the NVIDIA® Jetson Xavier™ NX supporting 4-channel PoE cameras, optimized PoE capability and Digital I/O for industrial AI inference. Smart PoE, PoE loss detection function and WatchDog indicator […]

Sequitur Labs Releases Turnkey Solution to Simplify Protection of Edge AI Models Powered by the NVIDIA Jetson Platform

Latest release of EmSPARK™ Security Suite for the NVIDIA Jetson platform features new trusted applications for AI protection SEATTLE, March 22, 2022 – Sequitur Labs today announced a new release of its EmSPARK™ Security Suite for the NVIDIA Jetson™ edge AI platform, featuring a generally available development kit and pre-built Trusted Applications that provide robust […]

NVIDIA and SoftBank Group Announce Termination of NVIDIA’s Acquisition of Arm Limited

SoftBank to Explore Arm Public Offering SANTA CLARA, Calif., and TOKYO – Feb. 7, 2022 – NVIDIA and SoftBank Group Corp. (“SBG” or “SoftBank”) today announced the termination of the previously announced transaction whereby NVIDIA would acquire Arm Limited (“Arm”) from SBG. The parties agreed to terminate the Agreement because of significant regulatory challenges preventing […]

Managing Video Streams in Runtime with the NVIDIA DeepStream SDK

This technical article was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Transportation monitoring systems, healthcare, and retail have all benefited greatly from intelligent video analytics (IVA). DeepStream is an IVA SDK. DeepStream enables you to attach and detach video streams in runtime without affecting the entire deployment. This […]

AI Optimization Technology Company Nota Selected as NVIDIA Inception Premier Member

January 17, 2022— Nota (CEO Myungsu Chae), an AI optimization technology company, announced that it has been promoted to Premier status in the NVIDIA Inception program. NVIDIA Inception nurtures cutting-edge startups revolutionizing industries with advancements in AI and data science. The free program has 9,000+ members who are given access to the best technical tools, […]

e-CAM24_CUNX: Color Global Shutter Camera to Capture Fast Moving Objects

This video was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Today, there are several vision applications that require cameras to capture fast-moving objects without any rolling shutter artifacts. They include license plate recognition, advanced driver-assistance systems, robotic vision, drones, factory automation, gesture recognition, traffic monitoring, and […]

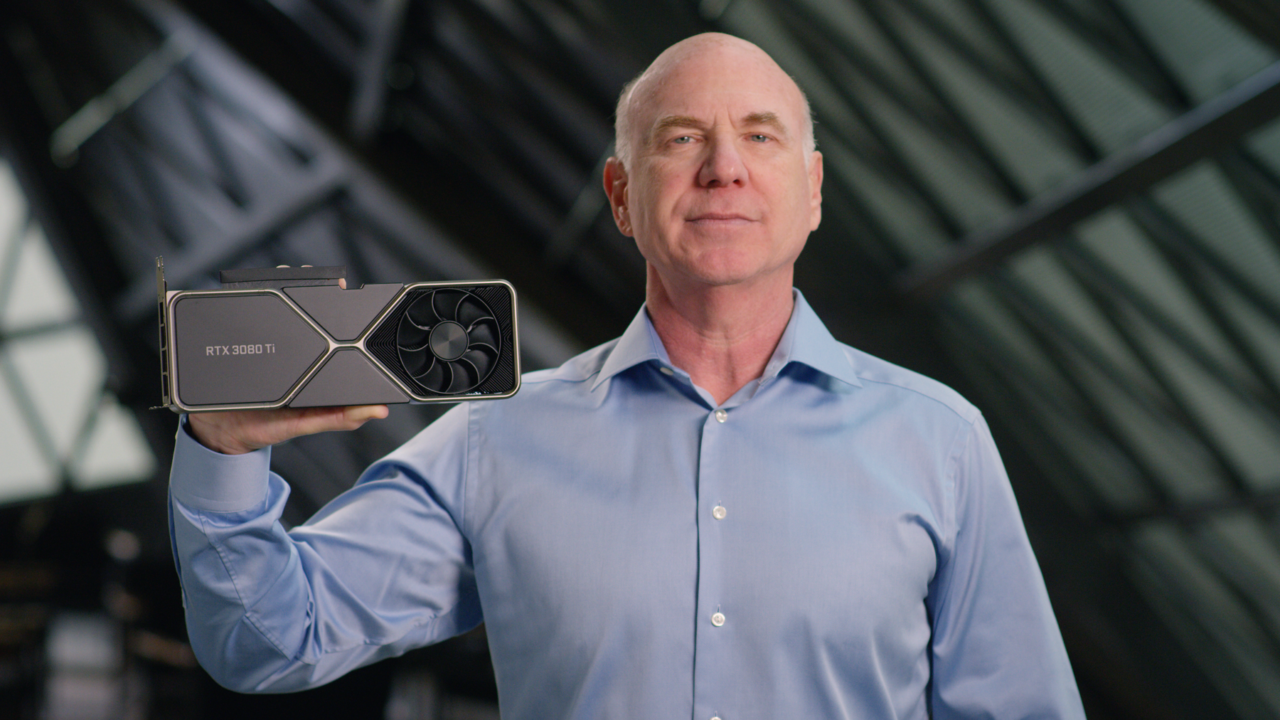

NVIDIA Expands Reach With New GeForce Laptops and Desktops, GeForce NOW Partners, and Omniverse for Creators

160+ Gaming and Studio Laptop Designs, GeForce RTX 3080 Ti for Laptops and RTX 3050 for Desktops; More Electronic Arts Games, Samsung TVs and AT Omniverse Extended to Millions of Creators Tuesday, January 4, 2022—CES—NVIDIA today set out the next direction of the ultimate platform for gamers and creators, unveiling more than 160 gaming and […]

Validating NVIDIA DRIVE Sim Camera Models

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Autonomous vehicles require large-scale development and testing in a wide range of scenarios before they can be deployed. Simulation can address these challenges by delivering scalable, repeatable environments for autonomous vehicles to encounter the rare and dangerous […]

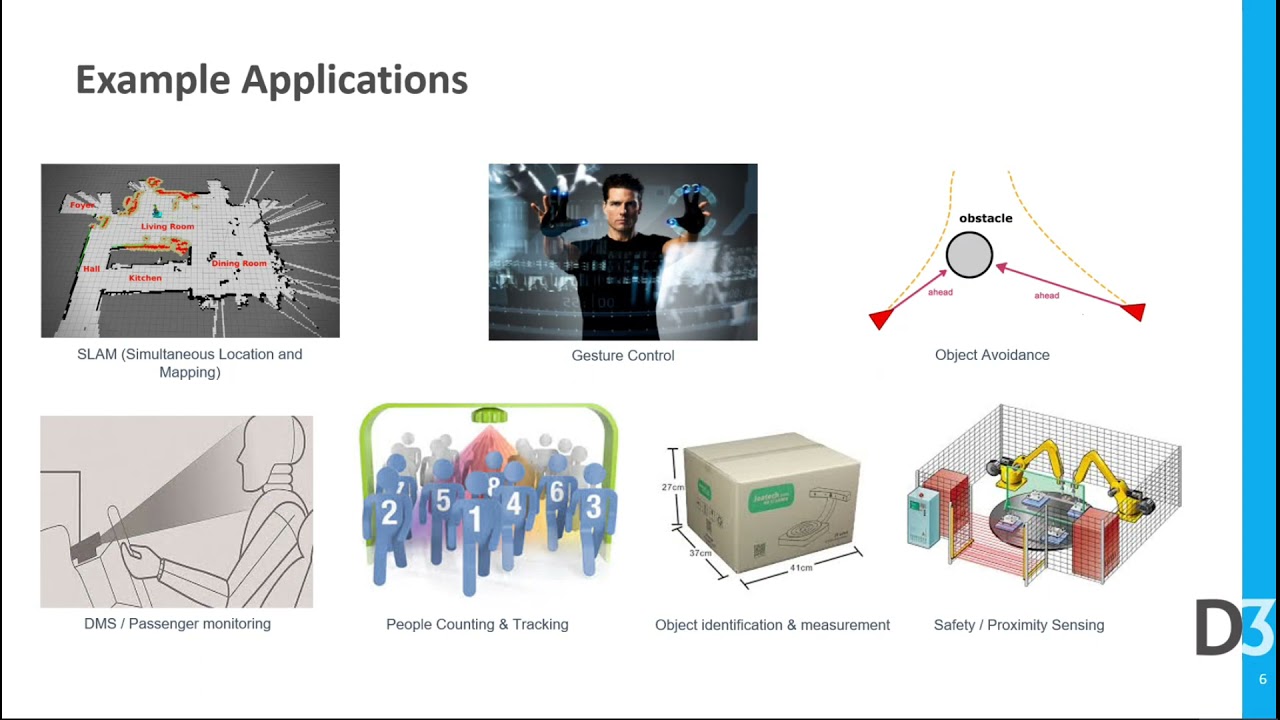

D3 Engineering Demonstration of a Synchronized 2D/3D Time-of-Flight Multi-camera Application on NVIDIA’s Jetson AGX Xavier

Jason Enslin, Product Manager for NVIDIA Jetson and Cameras at NVIDIA partner D3 Engineering, demonstrates the company’s latest edge AI and vision technologies and products at the 2021 Embedded Vision Summit. Specifically, Enslin demonstrates a synchronized 2D and 3D time-of-flight multi-camera application on NVIDIA’s Jetson AGX Xavier. With the growing need for video, distance measurement […]

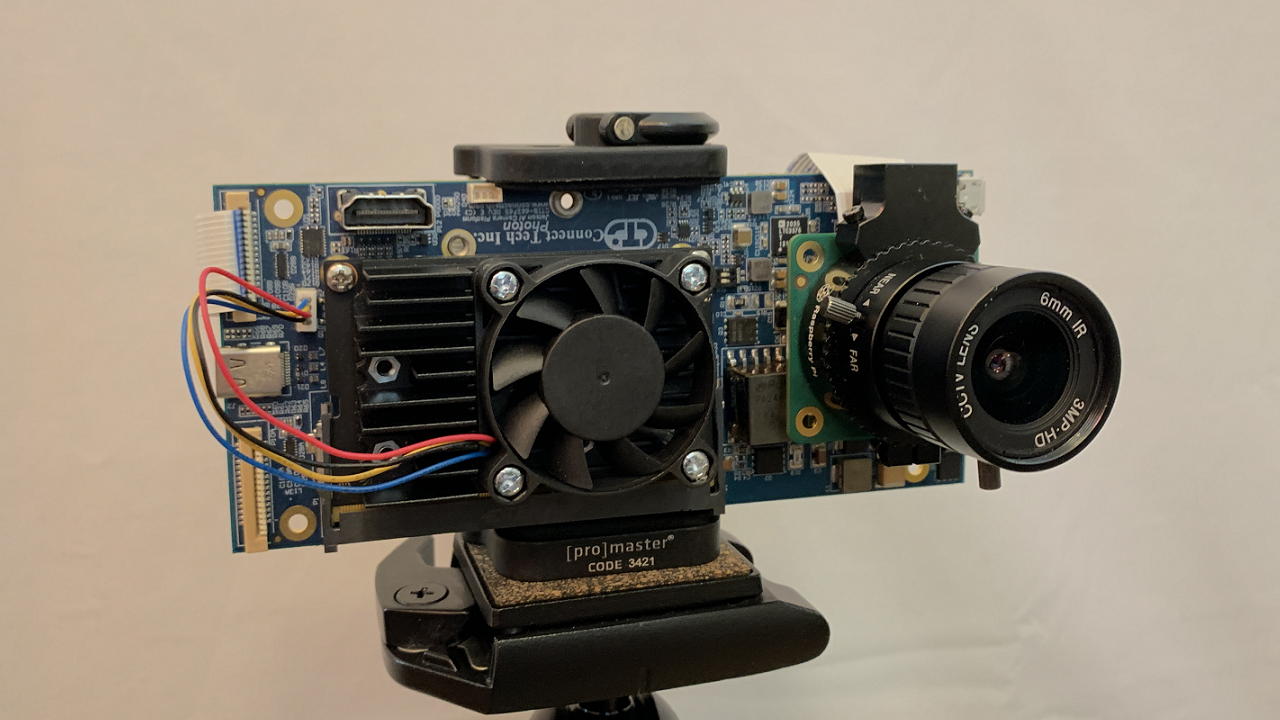

Connect Tech Demonstration of Integrating Vision Sensors with NVIDIA’s Jetson Edge Applications

Patrick Dietrich, Chief Technology Officer of NVIDIA partner Connect Tech, demonstrates the company’s latest edge AI and vision technologies and products at the 2021 Embedded Vision Summit. Specifically, Dietrich demonstrates how to integrating vision sensors with NVIDIA’s Jetson edge applications. Since the inception of NVIDIA’s Jetson platform, the capabilities of edge AI and vision-enabled systems […]

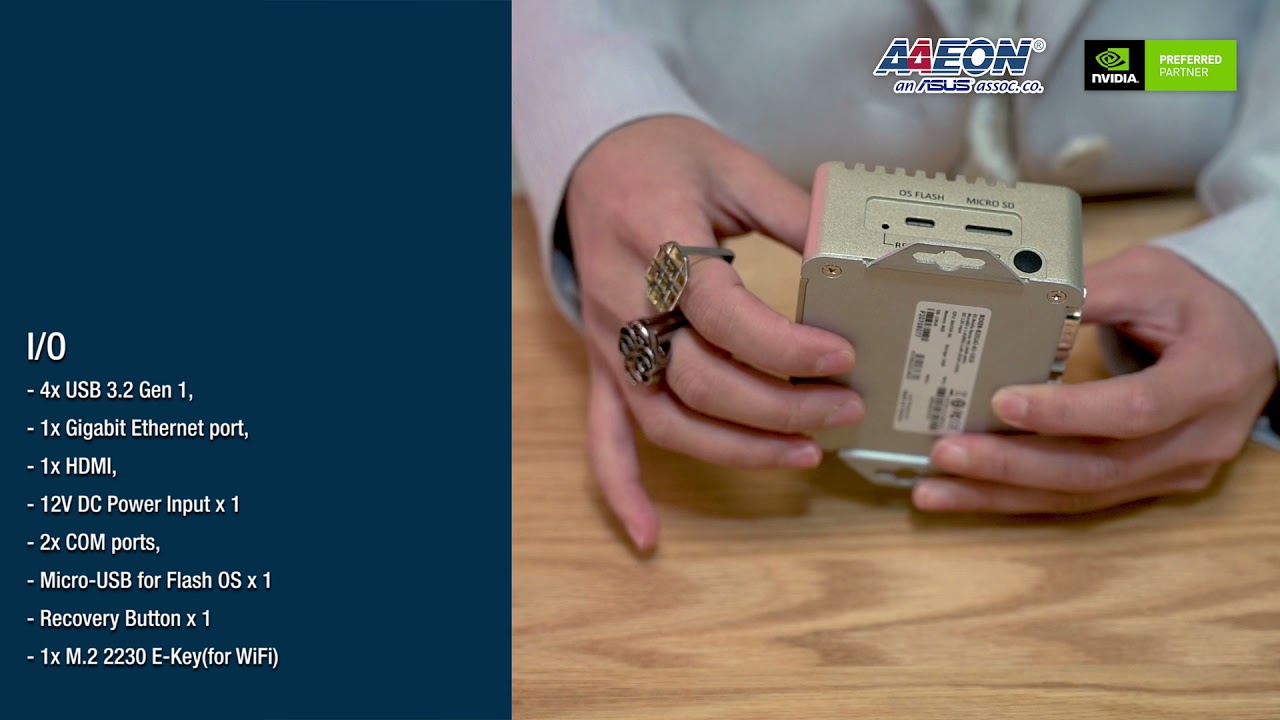

AAEON Demonstration of Its Compact Fanless Embedded BOX PC Based on NVIDIA’s Jetson Xavier NX SoC

Iris Huang, Product Sales Manager, and Owen Wei, AI & IOT Product Solution Manager, both of NVIDIA partner AAEON, demonstrate the company’s latest edge AI and vision technologies and products at the 2021 Embedded Vision Summit. Specifically, Huang and Wei demonstrate AAEON’s BOXER-8251AI AI@Edge compact fan-less embedded BOX PC, based on NVIDIA’s Jetson Xavier NX […]

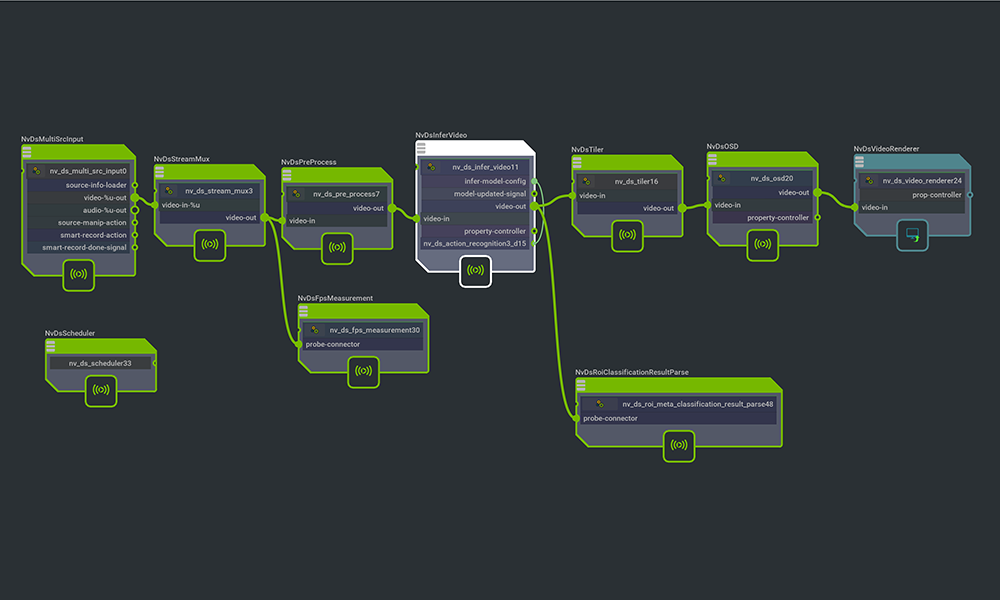

NVIDIA Brings Low-Code Development to Vision AI with DeepStream 6.0

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. DeepStream SDK 6.0 is now available for download. A powerful AI streaming analytics toolkit, DeepStream helps developers build high-performance, low-latency, complex, video analytics applications, and services. Graph Composer This release introduces Graph Composer, a new low-code programming […]

NVIDIA Announces Omniverse Replicator Synthetic-Data-Generation Engine for Training AIs

First Omniverse Replicator-Based Applications, DRIVE Sim and Isaac Sim, Accelerate Development of Autonomous Vehicles and Robots Tuesday, November 9, 2021—GTC—NVIDIA today announced NVIDIA Omniverse Replicator, a powerful synthetic-data-generation engine that produces physically simulated synthetic data for training deep neural networks. In its first implementations of the engine, the company introduced two applications for generating synthetic […]

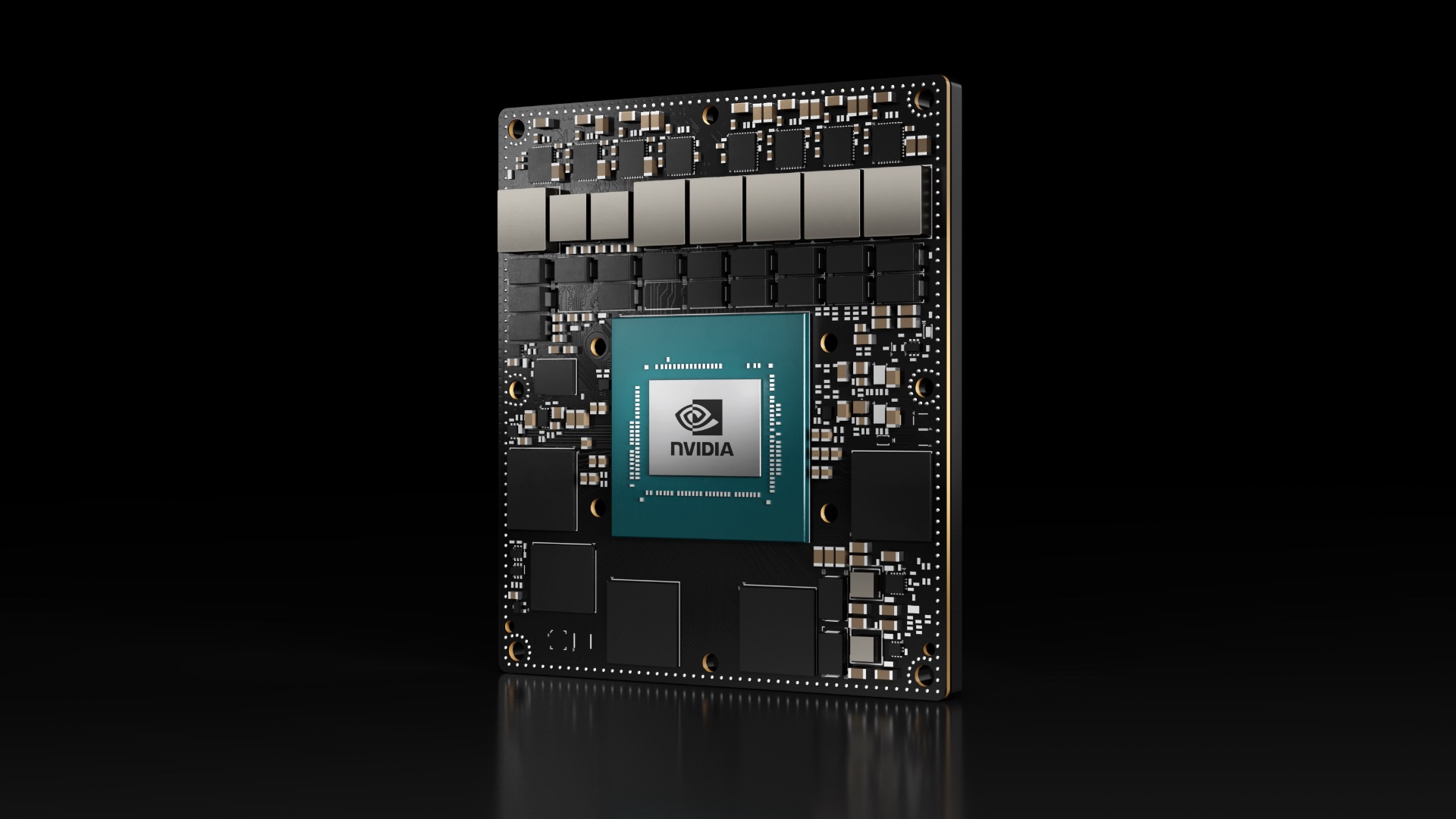

NVIDIA Sets Path for Future of Edge AI and Autonomous Machines With New Jetson AGX Orin Robotics Computer

NVIDIA Ampere Architecture Comes to Jetson, Delivering up to 200 TOPS and 6x Performance Gain Tuesday, November 9, 2021—GTC—NVIDIA today introduced NVIDIA Jetson AGX Orin™, the world’s smallest, most powerful and energy-efficient AI supercomputer for robotics, autonomous machines, medical devices and other forms of embedded computing at the edge. Built on the NVIDIA Ampere architecture, […]

NVIDIA Announces Major Updates to Triton Inference Server as 25,000+ Companies Worldwide Deploy NVIDIA AI Inference

Capital One, Microsoft, Samsung Medison, Siemens Energy, Snap Among Industry Leaders Worldwide Using Platform Tuesday, November 9, 2021—GTC—NVIDIA today announced major updates to its AI inference platform, which is now being used by Capital One, Microsoft, Samsung Medison, Siemens Energy and Snap, among its 25,000+ customers. The updates include new capabilities in the open source […]

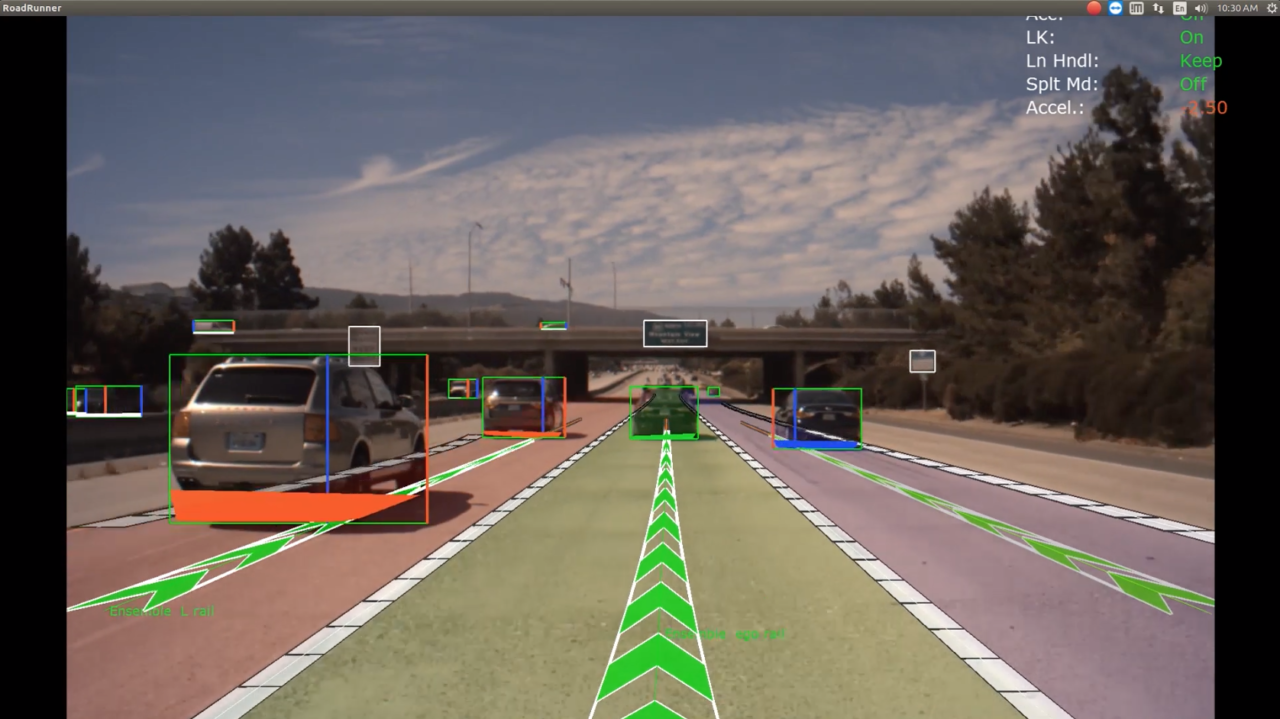

Looks Can Be Perceiving: Startups Build Highly Accurate Perception Software on NVIDIA DRIVE

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. High-performance, energy-efficient AI compute is heightening autonomous vehicles’ detection capabilities. For autonomous vehicles, what’s on the surface matters. While humans are taught to avoid snap judgments, self-driving cars must be able to see, detect and act quickly […]

“Getting Started with Vision AI Model Training,” a Presentation from NVIDIA

Ekaterina Sirazitdinova, Data Scientist at NVIDIA, presents the “Getting Started with Vision AI Model Training” tutorial at the May 2021 Embedded Vision Summit. In many modern vision and graphics applications, deep neural networks (DNNs) enable state-of-the-art performance for tasks like image classification, object detection and segmentation, quality enhancement and even new content generation. In this […]

“Khronos Group Standards: Powering the Future of Embedded Vision,” a Presentation from the Khronos Group

Neil Trevett, Vice President of Developer Ecosystems at NVIDIA and President of the Khronos Group, presents the “Khronos Group Standards: Powering the Future of Embedded Vision” tutorial at the May 2021 Embedded Vision Summit. Open standards play an important role in enabling interoperability for faster, easier deployment of vision-based systems. With advances in machine learning, […]

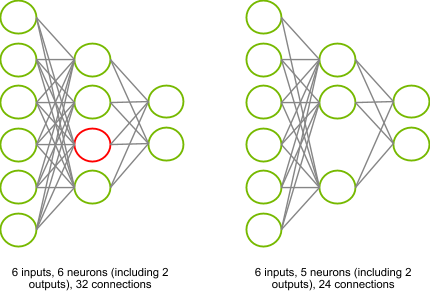

What Is a Machine Learning Model?

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Fueled by data, ML models are the mathematical engines of AI, expressions of algorithms that find patterns and make predictions faster than a human can. When you shop for a car, the first question is what model […]

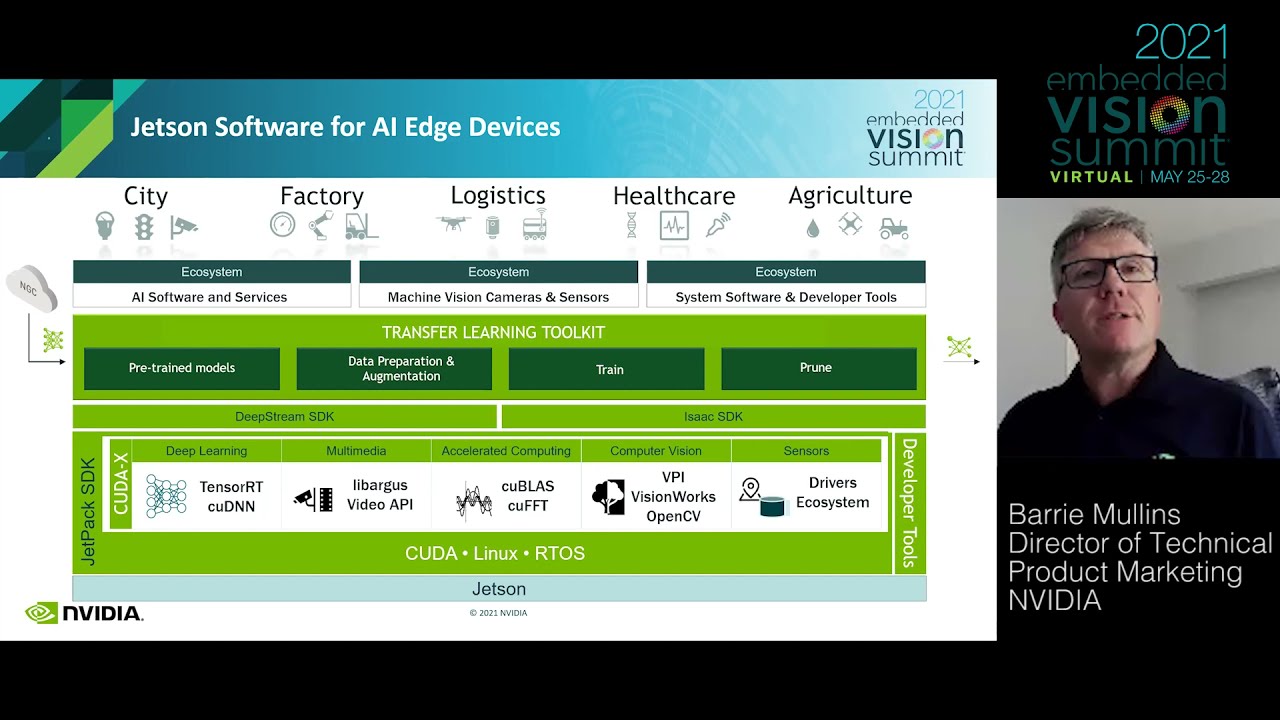

“Streamlining Development of Edge AI Applications,” a Presentation from NVIDIA

Barrie Mullins, Director of Technical Product Marketing at NVIDIA, presents the “Streamlining Development of Edge AI Applications” tutorial at the May 2021 Embedded Vision Summit. Edge AI provides benefits for cost, latency, privacy, and connectivity. Developing and deploying optimized, accurate and effect AI on edge-based systems is a time-consuming, challenging and complex process. In this […]

FRAMOS Sensor Module Ecosystem Supporting GMSL2 for Flexible Cable Routing and Ease of Installation into Small Spaces

7/28/21 – FRAMOS offers camera data transfer over a single coax cable using the GMSL2 protocol on the Jetson AGX Xavier platform. Camera developers utilizing the FRAMOS Sensor Module Ecosystem can integrate the highly flexible GMSL2 technology with image sensors producing up to 8MP resolution at 30fps over cable length of 15m. Developers benefit from […]

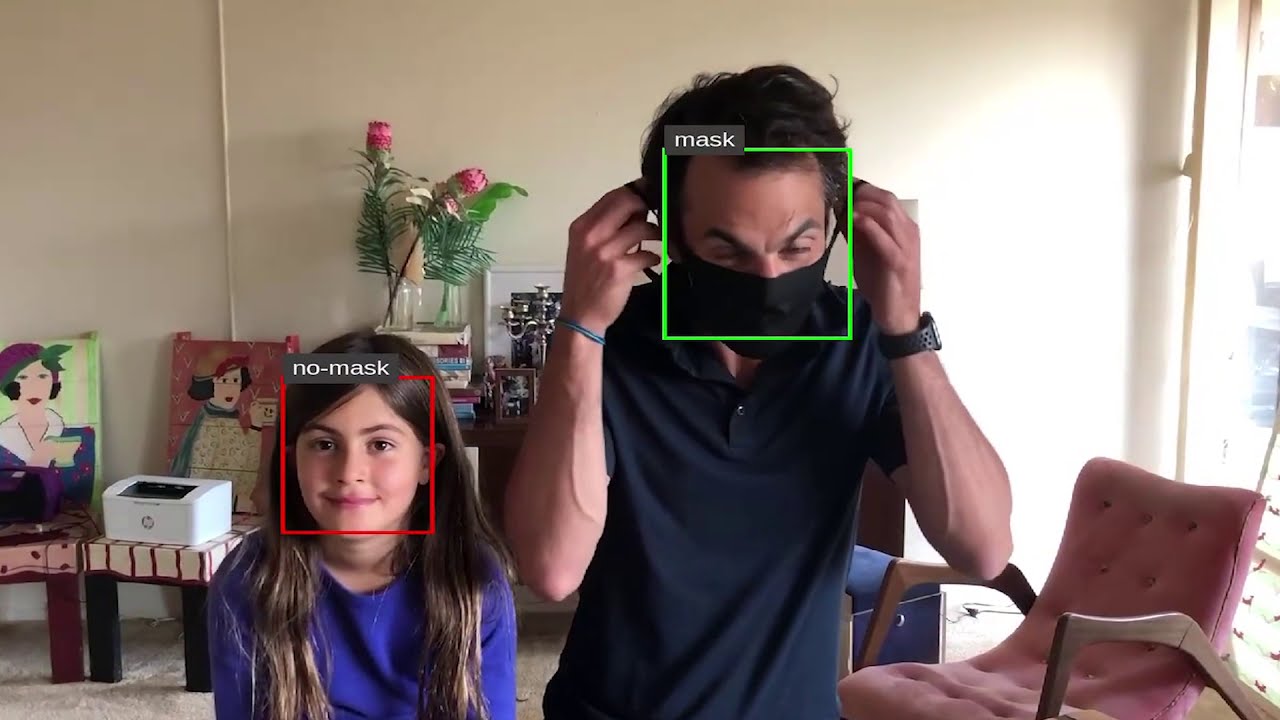

“A Mask Detection Smart Camera Using the NVIDIA Jetson Nano: System Architecture and Developer Experience,” a Presentation from BDTI and Tryolabs

Evan Juras, Computer Vision Engineer at BDTI, and Braulio Ríos, Machine Learning Engineer at Tryolabs, co-present the “A Mask Detection Smart Camera Using the NVIDIA Jetson Nano: System Architecture and Developer Experience” tutorial at the May 2021 Embedded Vision Summit. MaskCam is a prototype reference design for a smart camera that counts the number of […]

NVIDIA Inference Breakthrough Makes Conversational AI Smarter, More Interactive From Cloud to Edge

Tuesday, July 20, 2021 – NVIDIA today launched TensorRT™ 8, the eighth generation of the company’s AI software, which slashes inference time in half for language queries — enabling developers to build the world’s best-performing search engines, ad recommendations and chatbots and offer them from the cloud to the edge. TensorRT 8’s optimizations deliver record-setting […]

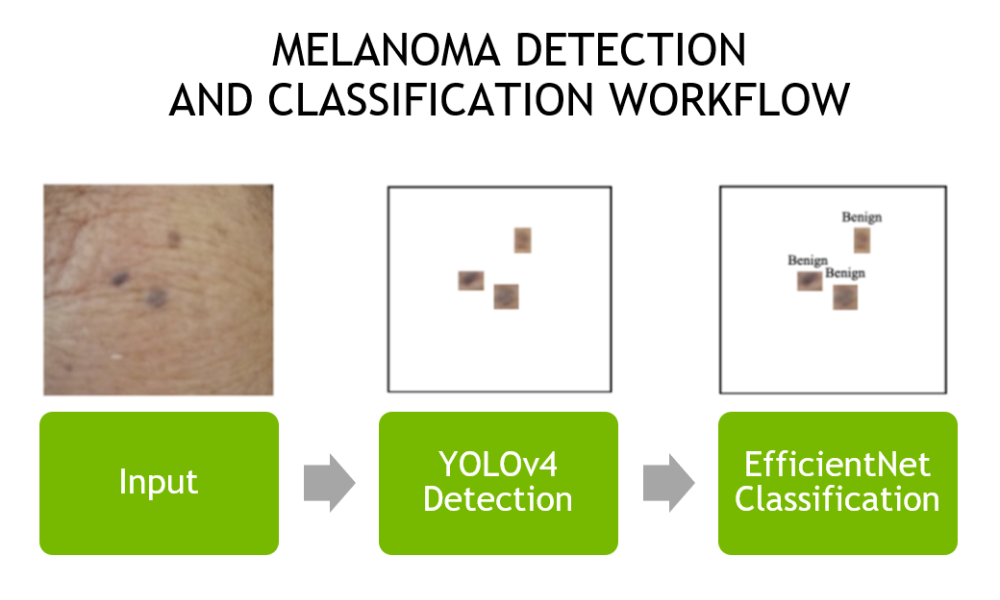

Building Real-time Dermatology Classification with NVIDIA Clara AGX

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. The most commonly diagnosed cancer in the US today is skin cancer. There are three main variants: melanoma, basal cell carcinoma (BCC), and squamous cell carcinoma (SCC). Though melanoma only accounts for roughly 1% of all skin […]

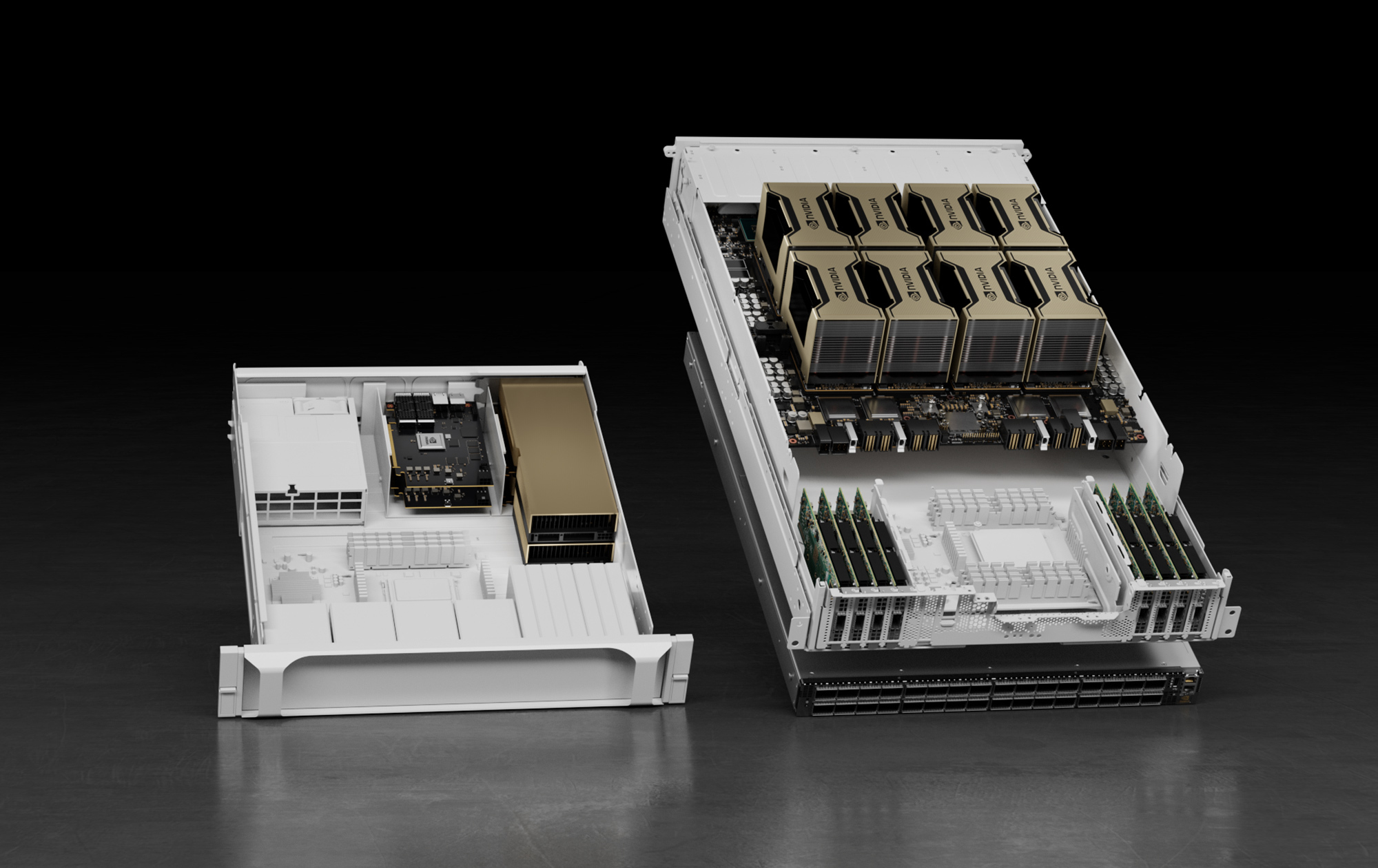

NVIDIA and Global Partners Launch New HGX A100 Systems to Accelerate Industrial AI and HPC

Wide Range of HPC Systems and Cloud Services Powered by HGX Now Supercharged with NVIDIA A100 80G PCIe, NVIDIA NDR 400G InfiniBand, NVIDIA Magnum IO Monday, June 28, 2021—ISC—NVIDIA today announced it is turbocharging the NVIDIA HGX™ AI supercomputing platform with new technologies that fuse AI with high performance computing, making supercomputing more useful to […]

Tough Customer: NVIDIA Unveils Jetson AGX Xavier Industrial Module

New ruggedized module is engineered to bring AI to harsh, safety-critical environments. From factories and farms to refineries and construction sites, the world is full of places that are hot, dirty, noisy, potentially dangerous — and critical to keeping industry humming. These places all need inspection and maintenance alongside their everyday operations, but, given safety […]

NVIDIA Research: Fast Uncertainty Quantification for Deep Object Pose Estimation

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Researchers from NVIDIA, University of Texas at Austin and Caltech developed a simple, efficient, and plug-and-play uncertainty quantification method for the 6-DoF (degrees of freedom) object pose estimation task, using an ensemble of K pre-trained estimators with […]

NVIDIA Brings Gaming, Enterprise Innovations to COMPUTEX 2021

New gaming flagship, new laptops, RTX momentum and AI for every company featured in a news-packed virtual keynote. May 31, 2021 – Touching on gaming, enterprise AI — and the advances underpinning both — NVIDIA’s Jeff Fisher and Manuvir Das Tuesday delivered a news-packed virtual keynote at COMPUTEX 2021. Fisher, senior vice president of NVIDIA’s […]

FRAMOS Announces Industry’s First Integration of Sony’s SLVS-EC on the NVIDIA Jetson Edge AI Platform

Taufkirchen, May 19, 2021 – FRAMOS, a global custom vision solutions and component supplier, today announced a new integration supporting SLVS-EC – Sony’s next-gen data interface – natively on the NVIDIA Jetson platform. This implementation is based on an existing IMX530 FRAMOS Sensor Module (FSM) with a 4th Generation Sony Pregius Global Shutter sensor and […]

NVIDIA Transforms Mainstream Laptops into Gaming Powerhouses with GeForce RTX 30 Series

Brings RTX Real-Time Ray Tracing and AI-Based DLSS to Tens of Millions More Gamers and Creators with $799 Portable Powerhouses SANTA CLARA, Calif., May 11, 2021 (GLOBE NEWSWIRE) — NVIDIA today announced a new wave of GeForce RTX™ laptops from the world’s top manufacturers, delivering real-time ray tracing and AI-based DLSS to tens of millions […]

Extending NVIDIA Performance Leadership with MLPerf Inference 1.0 Results

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Inference is where we interact with AI. Chat bots, digital assistants, recommendation engines, fraud protection services, and other applications that you use every day—all are powered by AI. Those deployed applications use inference to get you the […]

Maximize Every Pixel with IQ Tuning from FRAMOS on the NVIDIA Jetson Platform

Taufkirchen (Germany), 14. April 2021 – FRAMOS now provides sensor-specific ISP tuning for the NVIDIA Jetson platform to maximize image quality (IQ) leveraging a new state-of-the-art in-house lab. With this new offering, FRAMOS improves the image quality of all sensors across the entire Sensor Module Ecosystem and provides an even higher level of imaging quality […]

Volvo Cars, Zoox, SAIC and More Join Growing Range of Autonomous Vehicle Makers Using New NVIDIA DRIVE Solutions

NVIDIA’s Automotive Pipeline Now Exceeds $8 Billion for AI-Based Mobility Solutions Monday, April 12, 2021 — GTC — Volvo Cars, Zoox and SAIC are among the growing ranks of leading transportation companies using the newest NVIDIA DRIVE™ solutions to power their next-generation AI-based autonomous vehicles, NVIDIA announced today. NVIDIA’s design-win pipeline for NVIDIA DRIVE now totals […]

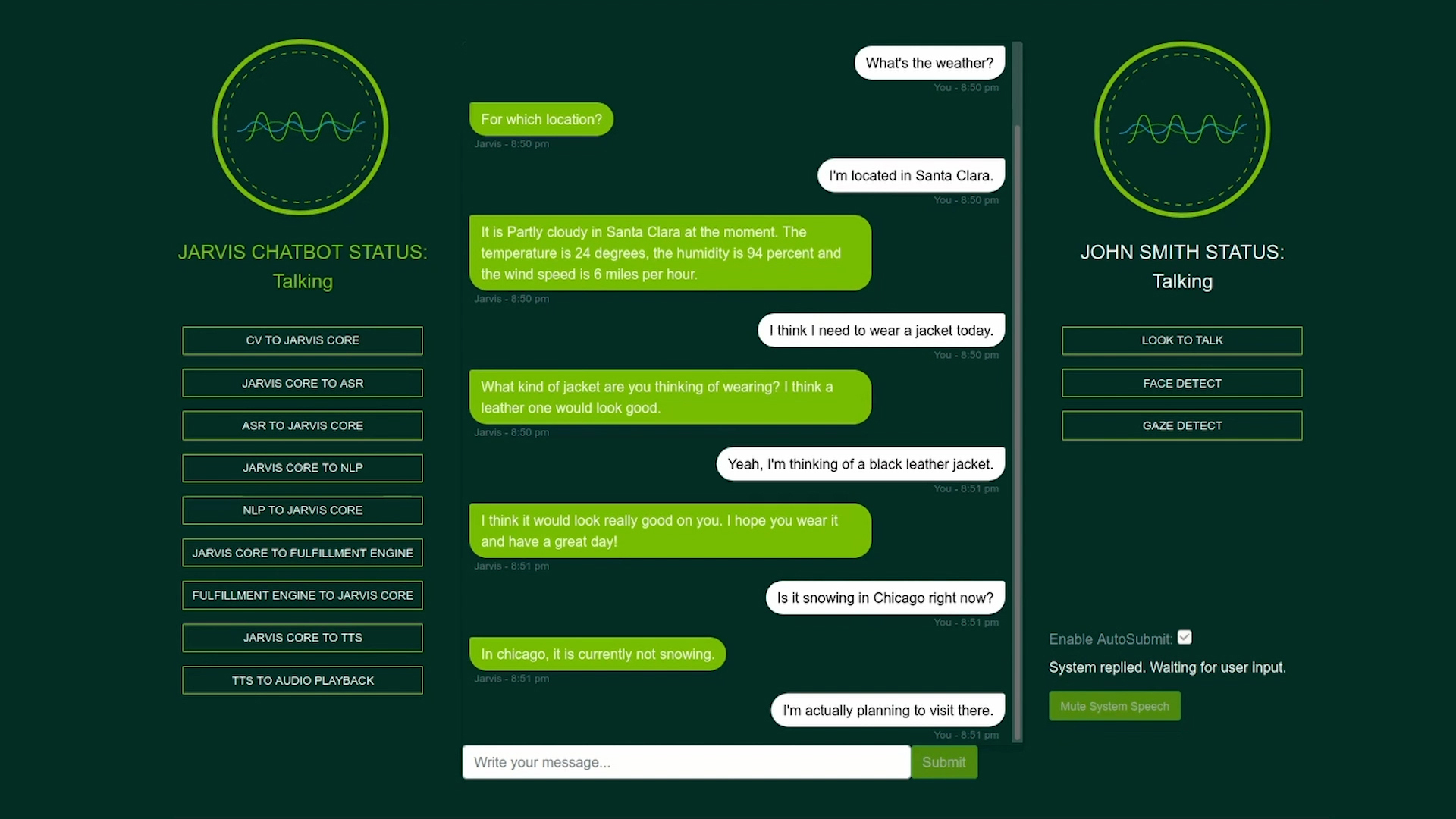

NVIDIA Announces Availability of Jarvis Interactive Conversational AI Framework

Pre-Trained Deep Learning Models and Software Tools Enable Developers to Adapt Jarvis for All Industries; Easily Deployed from Any Cloud to Edge Monday, April 12, 2021 — GTC — NVIDIA today announced availability of the NVIDIA Jarvis framework, providing developers with state-of-the-art pre-trained deep learning models and software tools to create interactive conversational AI services […]

NVIDIA and Global Computer Makers Launch Industry-Standard Enterprise Server Platforms for AI

NVIDIA-Certified Servers with NVIDIA AI Enterprise Software Running on VMware vSphere Simplify and Accelerate Adoption of AI Monday, April 12, 2021 — GTC — NVIDIA today introduced a new class of NVIDIA-Certified Systems™, bringing AI within reach for organizations that run their applications on industry-standard enterprise data center infrastructure. These include high-volume enterprise servers from […]

NVIDIA and Partners Collaborate on Arm Computing for Cloud, HPC, Edge, PC

NVIDIA GPU + AWS Graviton2-Based Amazon EC2 Instances, HPC Developer Kit with Ampere Computing CPU and Dual GPUs, More Initiatives Help Expand Opportunities for Arm-Based Solutions Monday, April 12, 2021 — GTC — NVIDIA today announced a series of collaborations that combine NVIDIA GPUs and software with Arm®-based CPUs — extending the benefits of Arm’s flexible, […]

NVIDIA Announces New DGX SuperPOD, the First Cloud-Native, Multi-Tenant Supercomputer, Opening World of AI to Enterprise

Customers Worldwide Advance Conversational AI, Drug Discovery, Autonomous Vehicles and More with DGX SuperPOD Monday, April 12, 2021 — GTC — NVIDIA today unveiled the world’s first cloud-native, multi-tenant AI supercomputer — the next-generation NVIDIA DGX SuperPOD™ featuring NVIDIA BlueField®-2 DPUs. Fortifying the DGX SuperPOD with BlueField-2 DPUs — data processing units that offload, accelerate and […]

New NVIDIA RTX GPUs Power Next Generation of Workstations and PCs for Millions of Artists, Designers, Engineers and Virtual Desktop Users

NVIDIA Ampere Architecture GPUs Provide Computing Power for Professionals to Work from Anywhere Monday, April 12, 2021 — GTC — NVIDIA today announced a range of eight new NVIDIA Ampere architecture GPUs for next-generation laptops, desktops and servers that make it possible for professionals to work from wherever they choose, without sacrificing quality or time. […]

NVIDIA Unveils NVIDIA DRIVE Atlan, an AI Data Center on Wheels for Next-Gen Autonomous Vehicles

Fusing AI and BlueField Technology on a Single Chip, New SoC Delivers More Than 1,000 TOPS and Data-Center-Grade Security to Autonomous Machines SANTA CLARA, Calif., April 12, 2021 — GTC — NVIDIA today revealed its next-generation AI-enabled processor for autonomous vehicles, NVIDIA DRIVE™ Atlan, which will deliver more than 1,000 trillion operations per second (TOPS) and targets automakers’ […]

NVIDIA Announces CPU for Giant AI and High Performance Computing Workloads

NVIDIA Grace is the company’s first data center CPU, an Arm-based processor that will deliver 10x the performance of today’s fastest servers on the most complex AI and high performance computing workloads. ‘Grace’ CPU delivers 10x performance leap for systems training giant AI models, using energy-efficient Arm cores Swiss Supercomputing Center and US Department of […]

NVIDIA-Powered Systems Ready to Bask in Ice Lake

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Data-hungry workloads such as machine learning and data analytics have become commonplace. To cope with these compute-intensive tasks, enterprises need accelerated servers that are optimized for high performance. Intel’s 3rd Gen Intel Xeon Scalable processors (code-named “Ice […]

NVIDIA Releases Updates to CUDA-X AI Software

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. NVIDIA CUDA-X AI are deep learning libraries for researchers and software developers to build high performance GPU-accelerated applications for conversational AI, recommendation systems and computer vision. Learn what’s new in the latest releases of CUDA-X AI libraries. […]

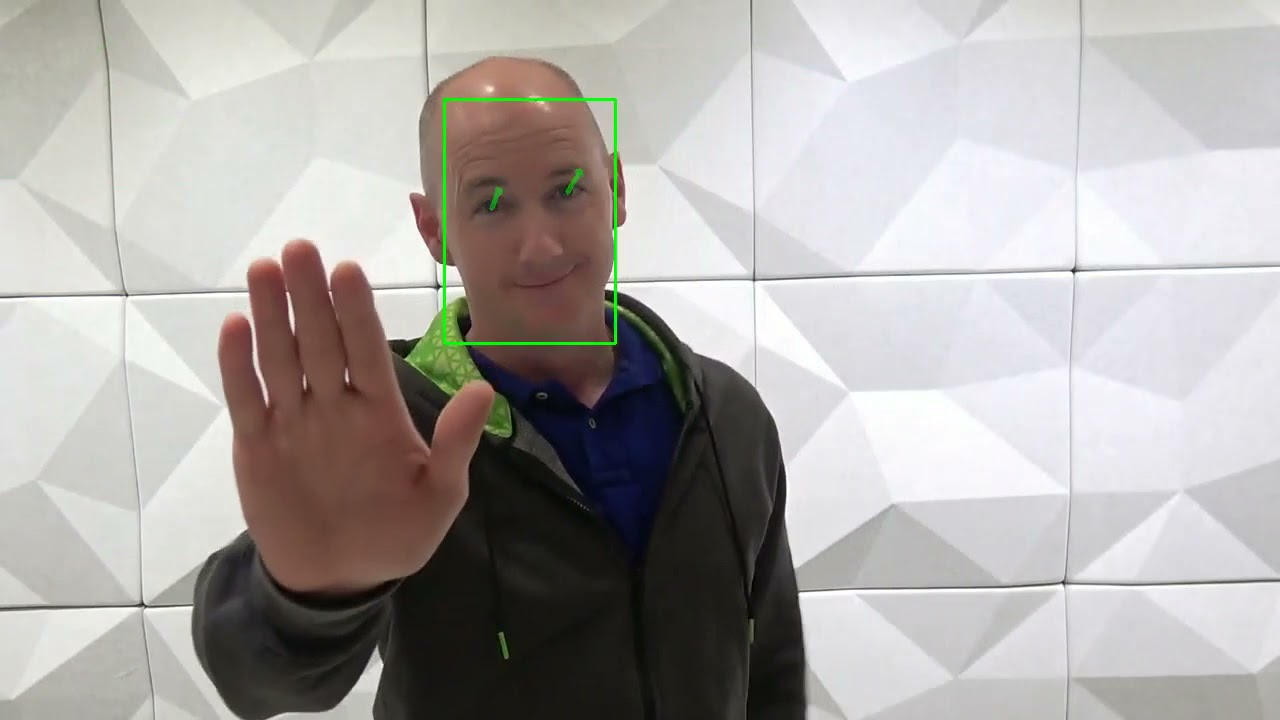

BDTI, Jabil, NVIDIA and Tryolabs Demonstration of AI-based Face Mask Detection and Analytics

BDTI and its partners, Tryolabs S.A. and Jabil Optics, are delighted to announce MaskCam: an open-source smart camera prototype reference design based on the NVIDIA Jetson Nano capable of estimating the number and percentage of people wearing face masks in its field of view. MaskCam was developed as part of an independent, hands-on evaluation of […]

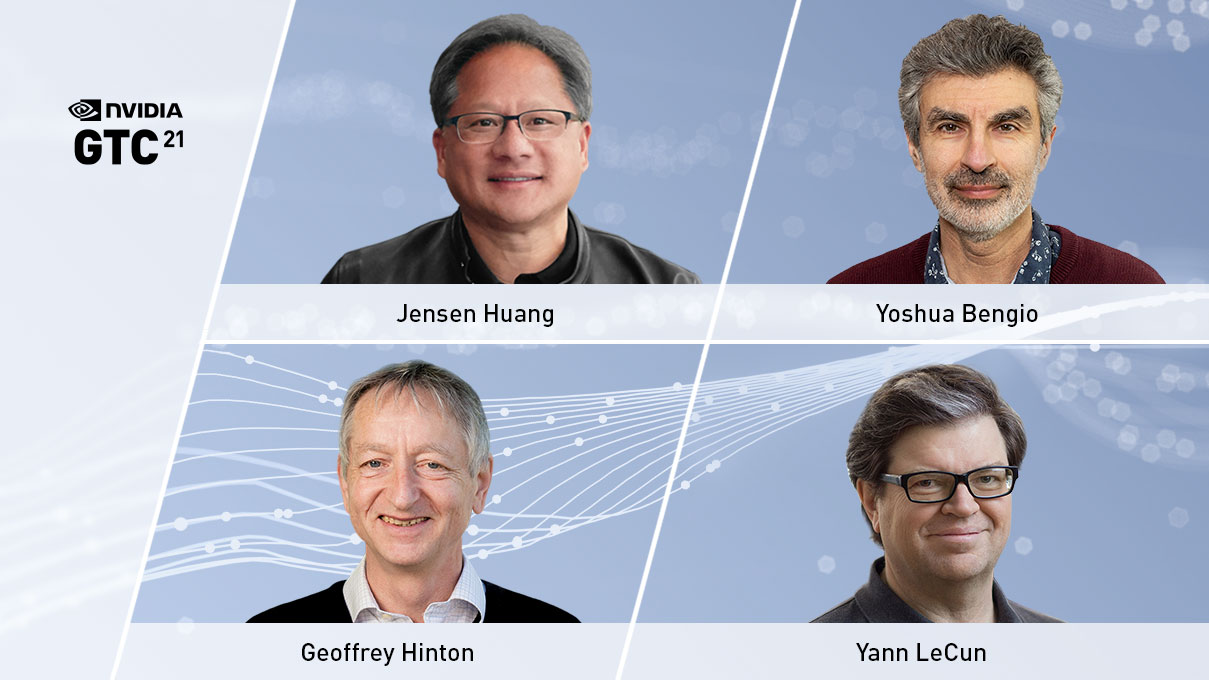

NVIDIA CEO Jensen Huang to Host AI Pioneers Yoshua Bengio, Geoffrey Hinton and Yann LeCun, and Others, at GTC21

Tuesday, March 9, 2021 – NVIDIA today announced that its CEO and founder Jensen Huang will host renowned AI pioneers Yoshua Bengio, Geoffrey Hinton and Yann LeCun at the company’s upcoming technology conference, GTC21, running April 12-16. The event will kick off with a news-filled livestreamed keynote by Huang on April 12 at 8:30 am […]

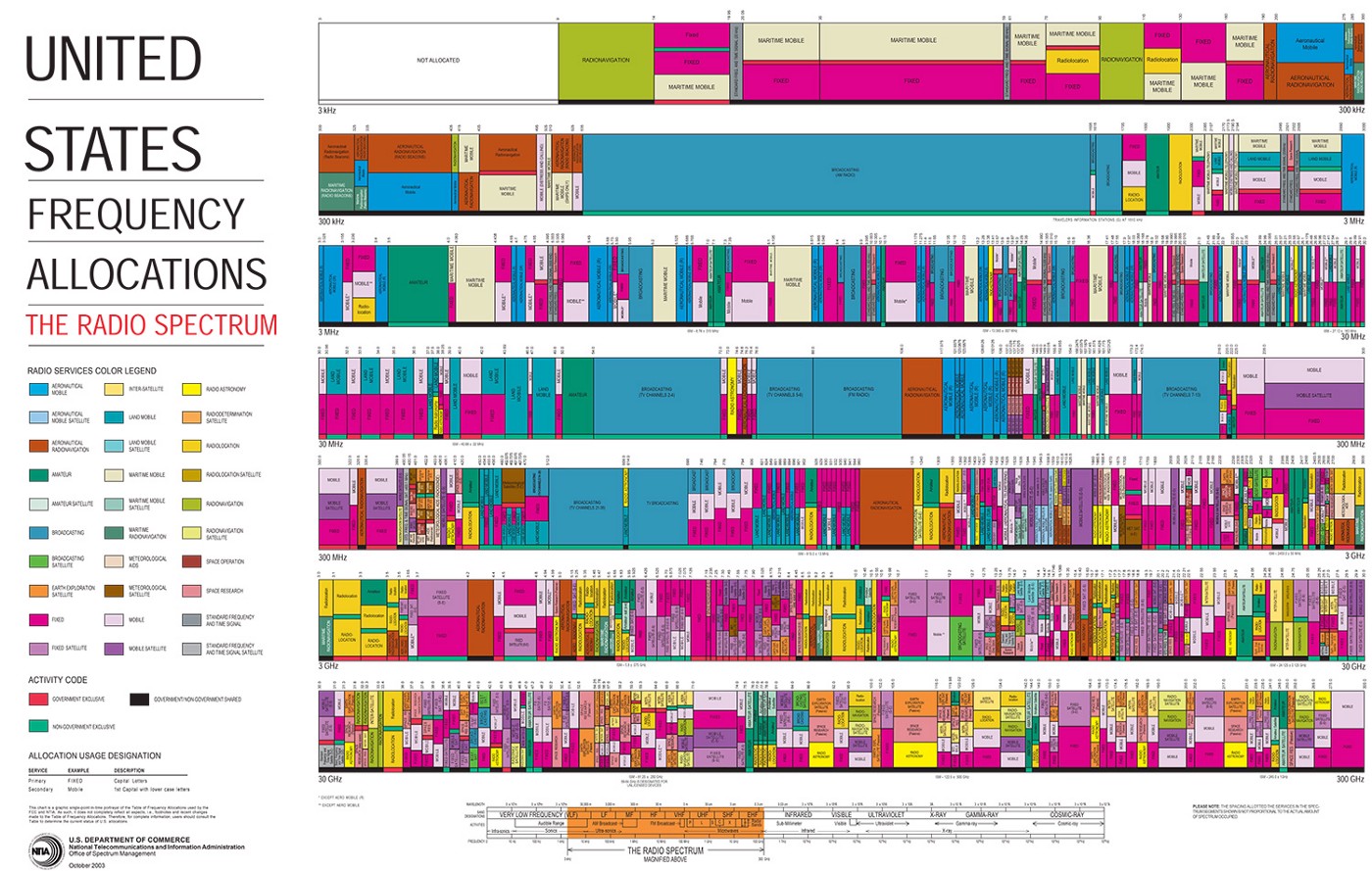

Accelerated Signal Processing with cuSignal

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Why signal processing? Signal processing is all around us. Broadly defined as the manipulation of signals — or mechanisms of transmitting information from one place to another — the field of signal processing exploits embedded information to […]

Rapid Deployment of Vision-Enabled Edge AI Applications Now a Reality with New FRAMOS and Connect Tech Partnership

Ottawa, ON, February 19, 2021 — FRAMOS and Connect Tech today announced their inaugural product resulting from their partnership, the Connect Tech Boson for FRAMOS carrier board. Designed specifically for NVIDIA® Jetson™ Nano and Jetson Xavier NX edge AI applications, Boson for FRAMOS is an all-in-one board-level solution that integrates the FRAMOS Sensor Module Ecosystem […]

Building and Deploying a Face Mask Detection Application Using NGC Collections

This technical article was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. AI workflows are complex. Building an AI application is no trivial task, as it takes various stakeholders with domain expertise to develop and deploy the application at scale. Data scientists and developers need easy access to software […]

“Khronos Standard APIs for Accelerating Vision and Inferencing,” a Presentation from the Khronos Group

Neil Trevett, President of the Khronos Group and Vice President of Developer Ecosystems at NVIDIA, presents the “Khronos Standard APIs for Accelerating Vision and Inferencing” tutorial at the September 2020 Embedded Vision Summit. The landscape of processors and tools for accelerating inferencing and vision applications continues to evolve rapidly. Khronos standards, such as OpenCL, OpenVX, […]

NVIDIA Ampere Architecture Powers Record 70+ New GeForce RTX Laptops

GeForce RTX 30 Series Laptop GPUs Offer Up to 2x Efficiency, 3rd Gen Max-Q Technology, Start at $999 Tuesday, January 12, 2021 – A new era of laptops begins today featuring the NVIDIA Ampere architecture, with the launch of 70+ models powered by GeForce® RTX™ 30 Series Laptop GPUs. These next-gen laptops, which start at […]

NVIDIA Introduces GeForce RTX 3060, Next Generation of the World’s Most Popular GPU

Powered by NVIDIA Ampere Architecture, Delivers Up to 10x the Ray-Tracing Performance of GTX 1060; Starting at $329 Tuesday, January 12, 2021 – NVIDIA today announced that it is bringing the NVIDIA Ampere architecture to millions more PC gamers with the new GeForce® RTX™ 3060 GPU. With its efficient, high-performance architecture and the second generation […]

NVIDIA Debuts GeForce RTX 3060 Family for the Holidays

Starting at $399, GeForce RTX 3060 Ti Delivers Amazing Ray-Tracing and DLSS Performance to Season’s Hottest Titles; Available Tomorrow Tuesday, December 1, 2020 – Bringing its RTX 30 Series lineup of gaming GPUs to the market’s sweet spot, NVIDIA today introduced the GeForce® RTX™ 3060 Ti, the first member of the RTX 3060 family, powered […]

Implementing a Real-time, AI-Based, Face Mask Detector Application for COVID-19

This technical article was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Businesses are constantly overhauling their existing infrastructure and processes to be more efficient, safe, and usable for employees, customers, and the community. With the ongoing pandemic, it’s even more important to have advanced analytics apps and services […]

NVIDIA Doubles Down: Announces A100 80GB GPU, Supercharging World’s Most Powerful GPU for AI Supercomputing

Leading Systems Providers Atos, Dell Technologies, Fujitsu, GIGABYTE, Hewlett Packard Enterprise, Inspur, Lenovo, Quanta and Supermicro to Offer NVIDIA A100 Systems to World’s Industries Monday, November 16, 2020—SC20—NVIDIA today unveiled the NVIDIA® A100 80GB GPU — the latest innovation powering the NVIDIA HGX™ AI supercomputing platform — with twice the memory of its predecessor, providing […]

“Optimize, Deploy and Scale Edge AI and Video Analytics Applications,” a Presentation from NVIDIA and Amazon

Edwin Weill, Ph.D., Enterprise Data Scientist at NVIDIA, along with Ryan Vanderwerf, Partner Solutions Architect at Amazon Web Services, present the “Optimize, Deploy and Scale Edge AI and Video Analytics Applications” tutorial at the September 2020 Embedded Vision Summit. In this Over-the-Shoulder tutorial, you will learn how to use AWS… “Optimize, Deploy and Scale Edge […]

What Is Computer Vision?

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Computer vision is achieved with convolutional neural networks that can use images and video to perform segmentation, classification and detection for many applications. Computer vision has become so good that the days of general managers screaming at […]

Enhancing Robotic Applications with the NVIDIA Isaac SDK 3D Object Pose Estimation Pipeline

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. In robotics applications, 3D object poses provide crucial information to downstream algorithms such as navigation, motion planning, and manipulation. This helps robots make intelligent decisions based on surroundings. The pose of objects can be used by a […]

NVIDIA Announces Ready-Made NVIDIA DGX SuperPODs, Offered by Global Network of Certified Partners

World’s Most Advanced AI System Now Available in 20-Node Building Block Increments; First Installments Shipping by Yearend to Korea, UK, Sweden and India Monday, October 5, 2020 — GTC — NVIDIA today announced the NVIDIA DGX SuperPOD™ Solution for Enterprise, the world’s first turnkey AI infrastructure, making it possible for organizations to install incredibly powerful AI supercomputers […]

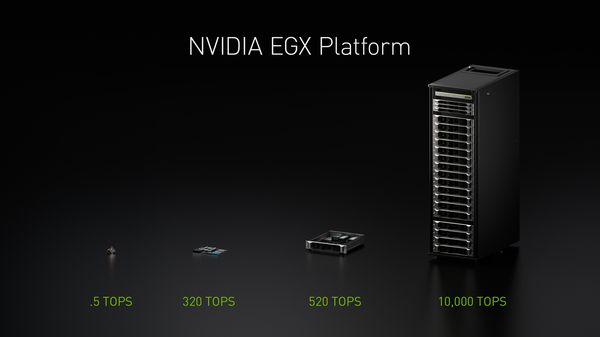

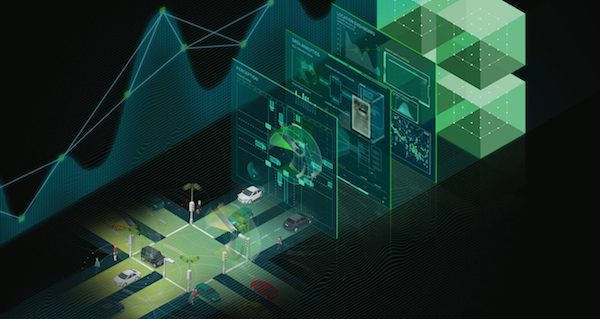

Global Technology Leaders Adopt NVIDIA EGX Edge AI Platform to Infuse Intelligence at the Edge of Every Business

Hundreds of Vision AI, 5G, CloudRAN, Security and Networking Companies Team with Leading Infrastructure Providers to Use NVIDIA EGX to Deliver Edge AI to Every Industry Monday, October 5, 2020 — GTC — NVIDIA today announced widespread adoption of the NVIDIA EGX™ edge AI platform by the world’s leading tech companies, bringing a new wave […]

NVIDIA Announces Cloud-AI Video-Streaming Platform to Better Connect Millions Working and Studying Remotely

GPU-Accelerated AI Platform, NVIDIA Maxine, Enables Video-Conference Providers to Vastly Improve Streaming Quality and Offer AI-Powered Features Including Super Resolution, Gaze Correction and Live Captions Monday, October 5, 2020 — GTC — NVIDIA today announced the NVIDIA Maxine platform, which provides developers with a cloud-based suite of GPU-accelerated AI video conferencing software to enhance streaming video […]

NVIDIA Unveils Jetson Nano 2GB: The Ultimate AI and Robotics Starter Kit for Students, Educators, Robotics Hobbyists

At $59, New Developer Kit with Free Online Training and Certification Makes AI Easily Accessible to All SANTA CLARA, Calif., Oct. 05, 2020 (GLOBE NEWSWIRE) — GTC— NVIDIA today expanded the NVIDIA® Jetson™ AI at the Edge platform with an entry-level developer kit priced at just $59, opening the potential of AI and robotics to […]

Connecting Multiple VC MIPI Cameras to NVIDIA Developer Kits

September 22, 2020 – Vision Components offers software support for Auvidea GmbH adapter boards, which can connect multiple VC MIPI camera modules to NVIDIA Jetson TX2 and Xavier AGX developer kits. Drivers are provided, enabling developers to start work on their embedded vision projects immediately. Auvidea’s tested and validated J20 adapter board supports all available […]

NVIDIA to Acquire Arm for $40 Billion, Creating World’s Premier Computing Company for the Age of AI

News Highlights: Unites NVIDIA’s leadership in artificial intelligence with Arm’s vast computing ecosystem to drive innovation for all customers NVIDIA will expand Arm’s R&D presence in Cambridge, UK, by establishing a world-class AI research and education center, and building an Arm/NVIDIA-powered AI supercomputer for groundbreaking research NVIDIA will continue Arm’s open-licensing model and customer neutrality […]

Deploying a Scalable Object Detection Inference Pipeline

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. This post is the first in a series on Autonomous Driving at Scale, developed with Tata Consultancy Services (TCS). In this post, we provide a general overview of the deep learning inference for object detection. Autonomous vehicle […]

NVIDIA Delivers Greatest-Ever Generational Leap with GeForce RTX 30 Series GPUs

Powered by NVIDIA Ampere Architecture, Second-Generation RTX Delivers Up to 2x Performance Over Turing for Real-Time Ray Tracing and AI Gaming NVIDIA today unveiled its GeForce RTX™ 30 Series GPUs, powered by the NVIDIA Ampere architecture, which delivers the greatest-ever generational leap in GeForce® history. Smashing performance records, the GeForce RTX 3090, 3080 and 3070 […]

Developing Robotics Applications in Python with NVIDIA Isaac SDK

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. The modular and easy-to-use perception stack of NVIDIA Isaac SDK continues to accelerate the development of various mobile robots. Isaac SDK 2020.1 introduces the Python API, making it easier to build robotic applications for those who are […]

CUDA Refresher: The CUDA Programming Model

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. This is the fourth post in the CUDA Refresher series, which has the goal of refreshing key concepts in CUDA, tools, and optimization for beginning or intermediate developers. The CUDA programming model provides an abstraction of GPU […]

MIPI Camera and Driver for NVIDIA Developer Kit

The VC MIPI starter pack including camera, cable, and driver for the NVIDIA Jetson Nano Developer Kit makes embedded vision development as easy as can be August 5, 2020 – Vision Components has added a new starter pack to its MIPI product range for embedded vision projects: users simply connect the camera module and MIPI […]

Accelerating TensorFlow on NVIDIA A100 GPUs

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. The NVIDIA A100, based on the NVIDIA Ampere GPU architecture, offers a suite of exciting new features: third-generation Tensor Cores, Multi-Instance GPU (MIG) and third-generation NVLink. Ampere Tensor Cores introduce a novel math mode dedicated for AI […]

FRAMOS Joins NVIDIA Partner Network to Advance Edge AI-Enabled Vision Applications

Taufkirchen, Germany – July 8, 2020 – FRAMOS today announced it has joined the NVIDIA Partner Network as a Preferred Service Delivery Partner. This collaboration will allow FRAMOS to offer camera solutions to customers using the NVIDIA Jetson Edge AI platform to advance vision application development in robotics, automation, IoT-connected manufacturing, and more. Involvement in […]

CUDA Refresher: The GPU Computing Ecosystem

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. This is the third post in the CUDA Refresher series, which has the goal of refreshing key concepts in CUDA, tools, and optimization for beginning or intermediate developers. Ease of programming and a giant leap in performance […]

Mercedes-Benz and NVIDIA to Build Software-Defined Computing Architecture for Automated Driving Across Future Fleet

Auto and Computer Industry Leaders Intend to Join Forces and Enable Next-Generation Fleet with Software Upgradeability, AI and Autonomous Capabilities Tuesday, June 23, 2020 – Mercedes-Benz, one of the largest manufacturers of premium passenger cars, and NVIDIA, the global leader in accelerated computing, plan to enter into a cooperation to create a revolutionary in-vehicle computing […]

World’s Top System Makers Unveil NVIDIA A100-Powered Servers to Accelerate AI, Data Science and Scientific Computing

Cisco, Dell Technologies, HPE, Inspur, Lenovo, Supermicro Announce Systems Coming This Summer Monday, June 22, 2020—ISC Digital—NVIDIA and the world’s leading server manufacturers today announced NVIDIA A100-powered systems in a variety of designs and configurations to tackle the most complex challenges in AI, data science and scientific computing. More than 50 A100-powered servers from leading […]

Best AI Processor: NVIDIA Jetson Nano Wins 2020 Vision Product of the Year Award

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Compact yet powerful computer for AI at the edge recognized by the Edge AI and Vision Alliance. The small but mighty NVIDIA Jetson Nano has added yet another accolade to the company’s armory of awards. The Edge […]

CUDA Refresher: Getting Started with CUDA

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. This is the second post in the CUDA Refresher series, which has the goal of refreshing key concepts in CUDA, tools, and optimization for beginning or intermediate developers. Advancements in science and business drive an insatiable demand […]

CUDA Refresher: Reviewing the Origins of GPU Computing

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. This is the first post in the CUDA Refresher series, which has the goal of refreshing key concepts in CUDA, tools, and optimization for beginning or intermediate developers. Scientific discovery and business analytics drive an insatiable demand […]

2020 Vision Product of the Year Award Winner Showcase: NVIDIA (AI Processors)

NVIDIA’s Jetson Nano is the 2020 Vision Product of the Year Award Winner in the AI Processors category. NVIDIA’s Jetson Nano delivers the power of modern AI in the smallest supercomputer for embedded and IoT. Jetson Nano is a small form factor, power-efficient, low-cost and production-ready System on Module (SOM) and Developer Kit that opens […]

NVIDIA’s New Ampere Data Center GPU in Full Production

New NVIDIA A100 GPU Boosts AI Training and Inference up to 20x; NVIDIA’s First Elastic, Multi-Instance GPU Unifies Data Analytics, Training and Inference; Adopted by World’s Top Cloud Providers and Server Makers SANTA CLARA, Calif., May 14, 2020 (GLOBE NEWSWIRE) — NVIDIA today announced that the first GPU based on the NVIDIA® Ampere architecture, the […]

NVIDIA EGX Edge AI Platform Brings Real-Time AI to Manufacturing, Retail, Telco, Healthcare and Other Industries

Ecosystem Expands with EGX A100 and EGX Jetson Xavier NX Supported by AI-Optimized, Cloud-Native, Secure Software to Power New Wave of 5G and Robotics Applications SANTA CLARA, Calif., May 14, 2020 (GLOBE NEWSWIRE) — NVIDIA today announced two powerful products for its EGX Edge AI platform — the EGX A100 for larger commercial off-the-shelf servers […]

NVIDIA Releases Jetson Xavier NX Developer Kit with Cloud-Native Support

Cloud-Native Support Comes to Entire Jetson Platform Lineup, Making It Easier to Build, Deploy and Manage AI at the Edge Thursday, May 14, 2020—GTC 2020—NVIDIA today announced availability of the NVIDIA® Jetson Xavier™ NX developer kit with cloud-native support — and the extension of this support to the entire NVIDIA Jetson™ edge computing lineup for […]

Training with Custom Pretrained Models Using the NVIDIA Transfer Learning Toolkit

This article was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Supervised training of deep neural networks is now a common method of creating AI applications. To achieve accurate AI for your application, you generally need a very large dataset especially if you create… Training with Custom Pretrained Models […]

Speeding Up Deep Learning Inference Using TensorRT

This article was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. This is an updated version of How to Speed Up Deep Learning Inference Using TensorRT. This version starts from a PyTorch model instead of the ONNX model, upgrades the sample application to use TensorRT 7, and replaces the […]

NVIDIA VRSS, a Zero-Effort Way to Improve Your VR Image Quality

This article was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. The Virtual Reality (VR) industry is in the midst of a new hardware cycle – higher resolution headsets and better optics being the key focus points for the device manufacturers. Similarly on the software front, there has been […]

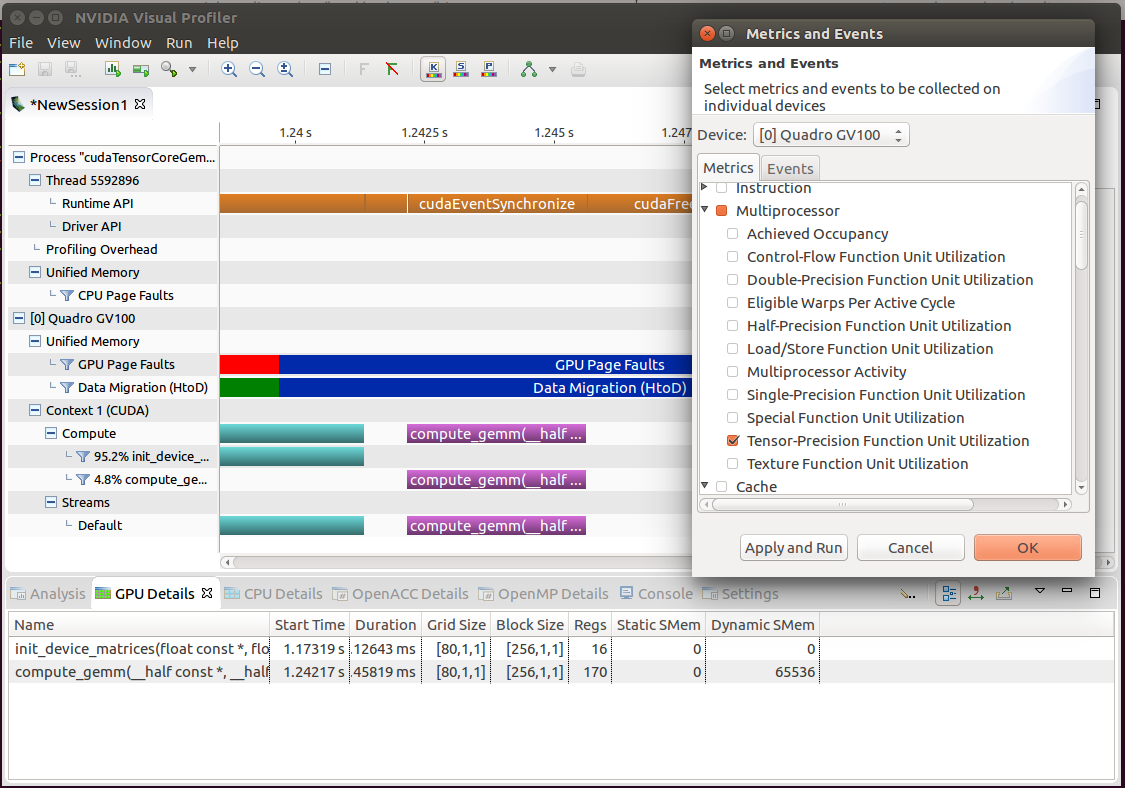

Accelerating WinML and NVIDIA Tensor Cores

This article was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Every year, clever researchers introduce ever more complex and interesting deep learning models to the world. There is of course a big difference between a model that works as a nice demo in isolation and a model that […]

Speeding Up Deep Learning Inference Using TensorFlow, ONNX, and TensorRT

This article was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Starting with TensorRT 7.0, the Universal Framework Format (UFF) is being deprecated. In this post, you learn how to deploy TensorFlow trained deep learning models using the new TensorFlow-ONNX-TensorRT workflow. Figure 1 shows the high-level workflow of TensorRT. […]

Learning to Rank with XGBoost and GPU

This article was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. XGBoost is a widely used machine learning library, which uses gradient boosting techniques to incrementally build a better model during the training phase by combining multiple weak models. Weak models are generated by computing the gradient descent using […]

Laser Focused: How Multi-View LidarNet Presents Rich Perspective for Self-Driving Cars

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Deep neural network takes a two-stage approach to address lidar processing challenges. Editor’s note: This is the latest post in our NVIDIA DRIVE Labs series, which takes an engineering-focused look at individual autonomous vehicle challenges and how […]

Building a Real-time Redaction App Using NVIDIA DeepStream, Part 2: Deployment